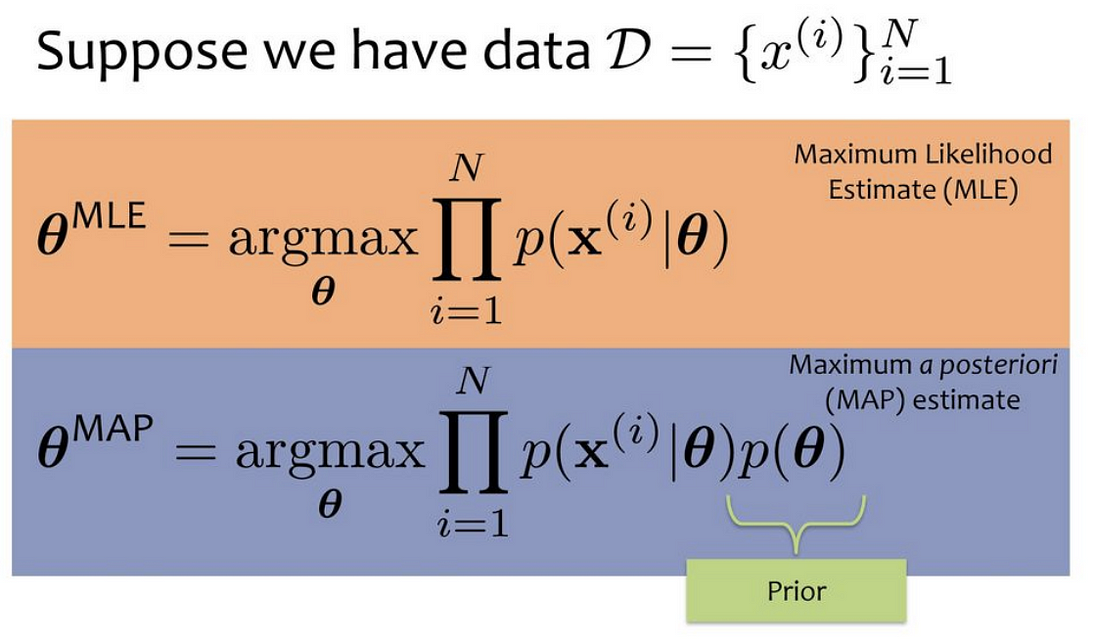

A Brief History: Who Developed It? Maximum Likelihood Estimation (MLE) and Maximum A Posteriori (MAP) Learning are cornerstone techniques in statistical learning. Ronald Fisher introduced MLE in the early 20th century as a tool for statistical estimation. MAP, building on …

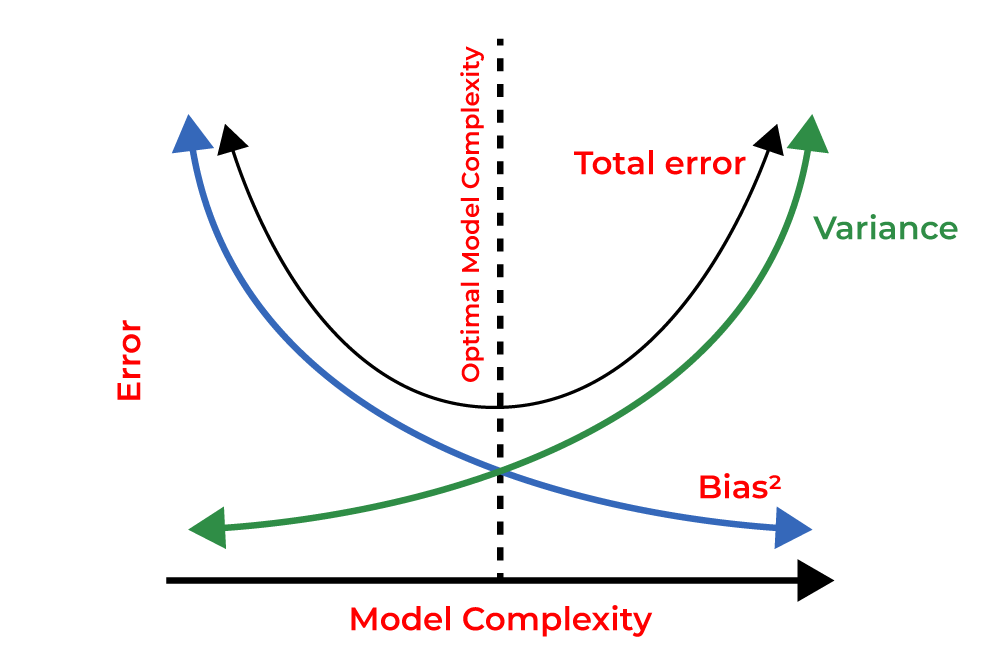

A Brief History: Who Developed Regularization? Regularization, a key technique in machine learning, originated from statistics and mathematics to address overfitting in predictive models. Popularized in the 1980s, it became central to regression analysis and neural networks. Researchers like Andrew …

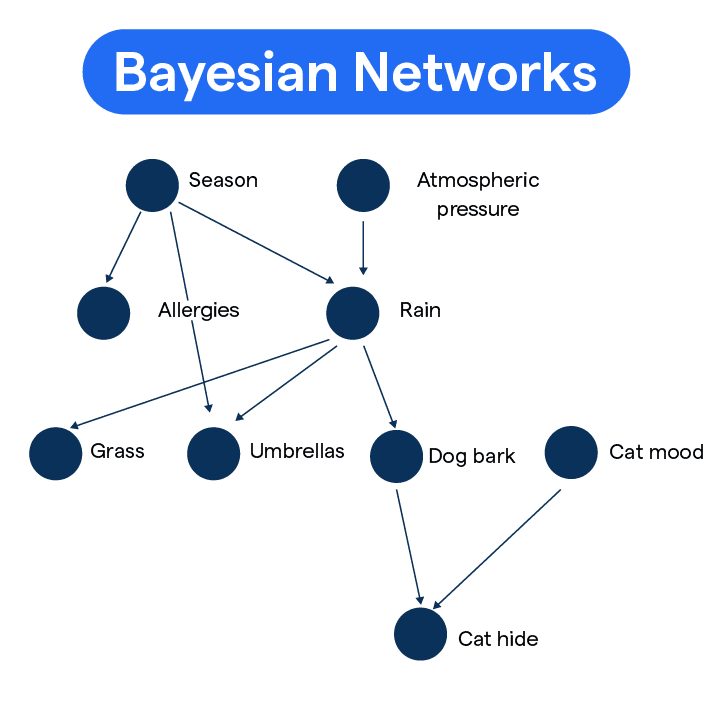

A Brief History of This Tool Bayesian Networks, introduced by Judea Pearl in the 1980s, revolutionized the modeling of uncertainty in complex systems: his work integrated probability theory and graph theory to address challenges in reasoning under uncertainty. This tool …

A Brief History: Who Developed It? The Completeness Score, a clustering evaluation metric, was introduced to enhance machine learning analysis. It builds on foundational works like the Rand Index and Mutual Information Score: these earlier methods laid the groundwork for …

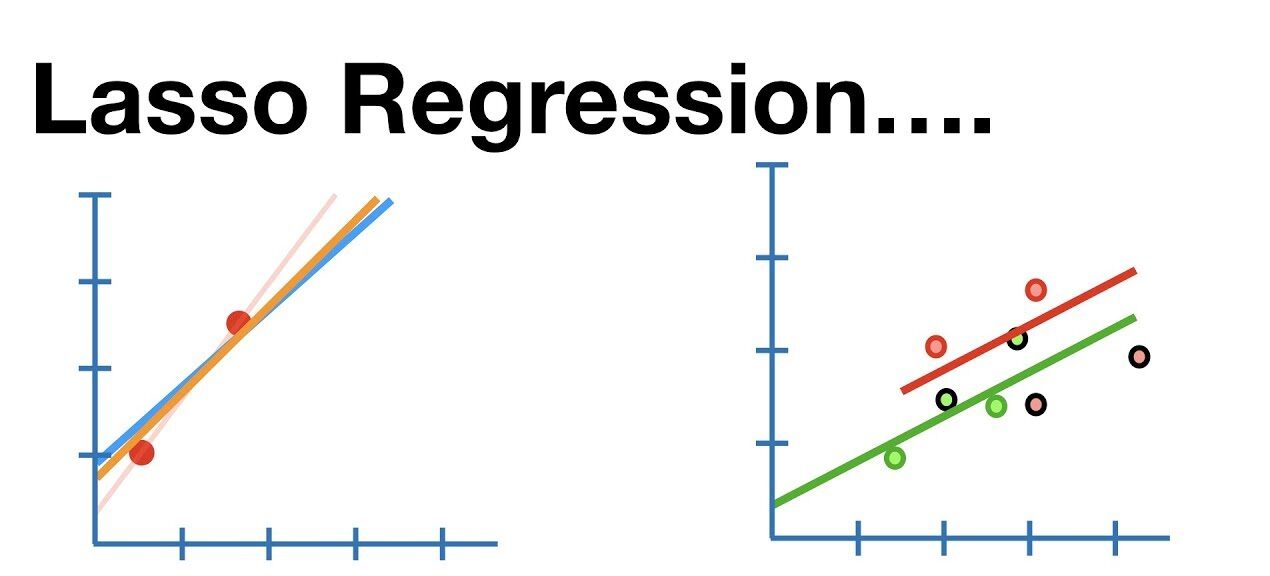

A Brief History: Who Developed Lasso Regularization? Lasso regularization, also known as Least Absolute Shrinkage and Selection Operator, was introduced by Robert Tibshirani in 1996 to address the challenges of feature selection and overfitting in regression models. Developed within the …

A Brief History: Who Developed It? K-Means clustering was introduced in the 1950s by Stuart Lloyd for signal processing and later refined in the 1970s by James MacQueen for data analysis. Today, it is a cornerstone in machine learning clustering …

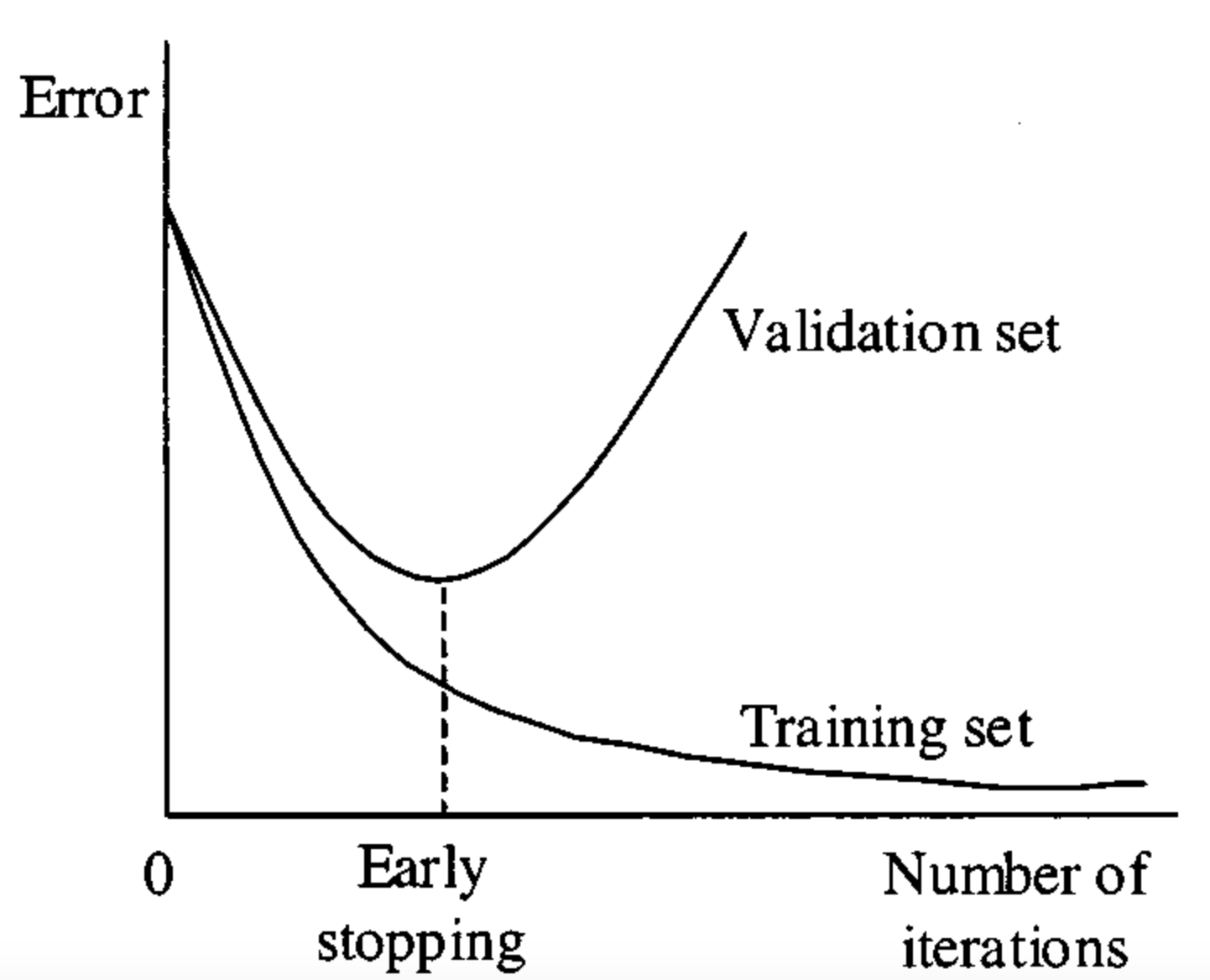

A Brief History: Who Developed Early Stopping? Early stopping emerged in the field of neural networks and statistical learning during the late 20th century. It was developed to address the challenge of overfitting in iterative optimization algorithms. Though not attributed …

A Brief History of Generative Gaussian Mixtures Generative Gaussian Mixtures, rooted in probability and statistics, trace back to Carl Friedrich Gauss’s pioneering work on Gaussian distributions. These principles were later integrated into machine learning algorithms, such as Expectation-Maximization, to advance …

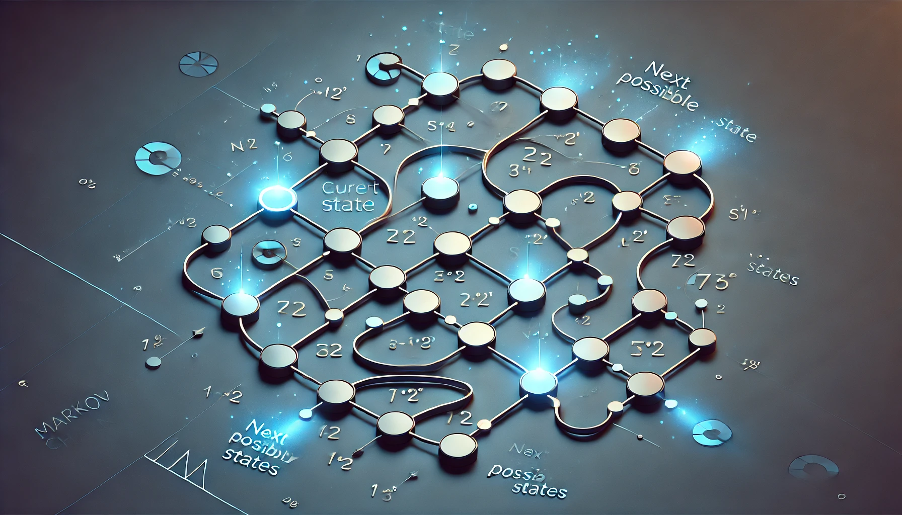

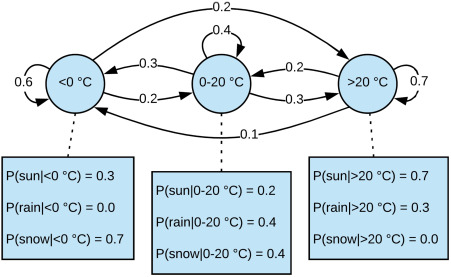

A Brief History of This Tool Markov Chains, introduced by Russian mathematician Andrey Markov in 1906, revolutionized stochastic modeling: his work on probability transitions provided a framework for analyzing dynamic systems. Today, Markov Chains are foundational in machine learning, financial …

A Brief History: Who Developed Hidden Markov Models (HMMs)? Hidden Markov Models were introduced in the 1960s by Leonard E. Baum and colleagues. These models have become pivotal in various industries, from speech recognition to bioinformatics, thanks to their adaptability …