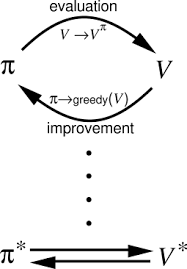

Policy iteration, first introduced in the 1950s by Richard Bellman and refined by Andrew Barto and Richard Sutton, is a fundamental method in Reinforcement Learning for optimising decision-making strategies. By iteratively evaluating and improving policies, it ensures efficient and adaptive solutions for complex sequential decision problems.

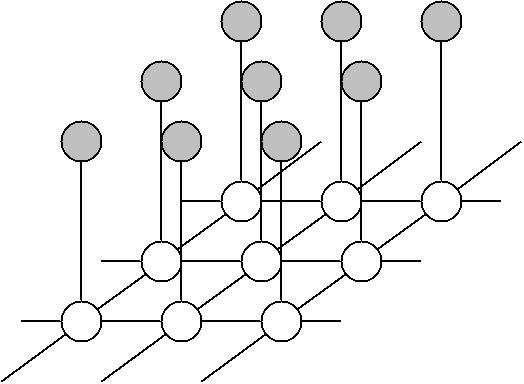

Markov Random Fields (MRFs), introduced through Andrey Markov’s early 20th-century work and formalised by Julian Besag in the 1970s, are probabilistic graphical models for representing contextual dependencies. Widely used in applications like image processing, natural language processing, and environmental modeling, MRFs capture relationships within structured data.

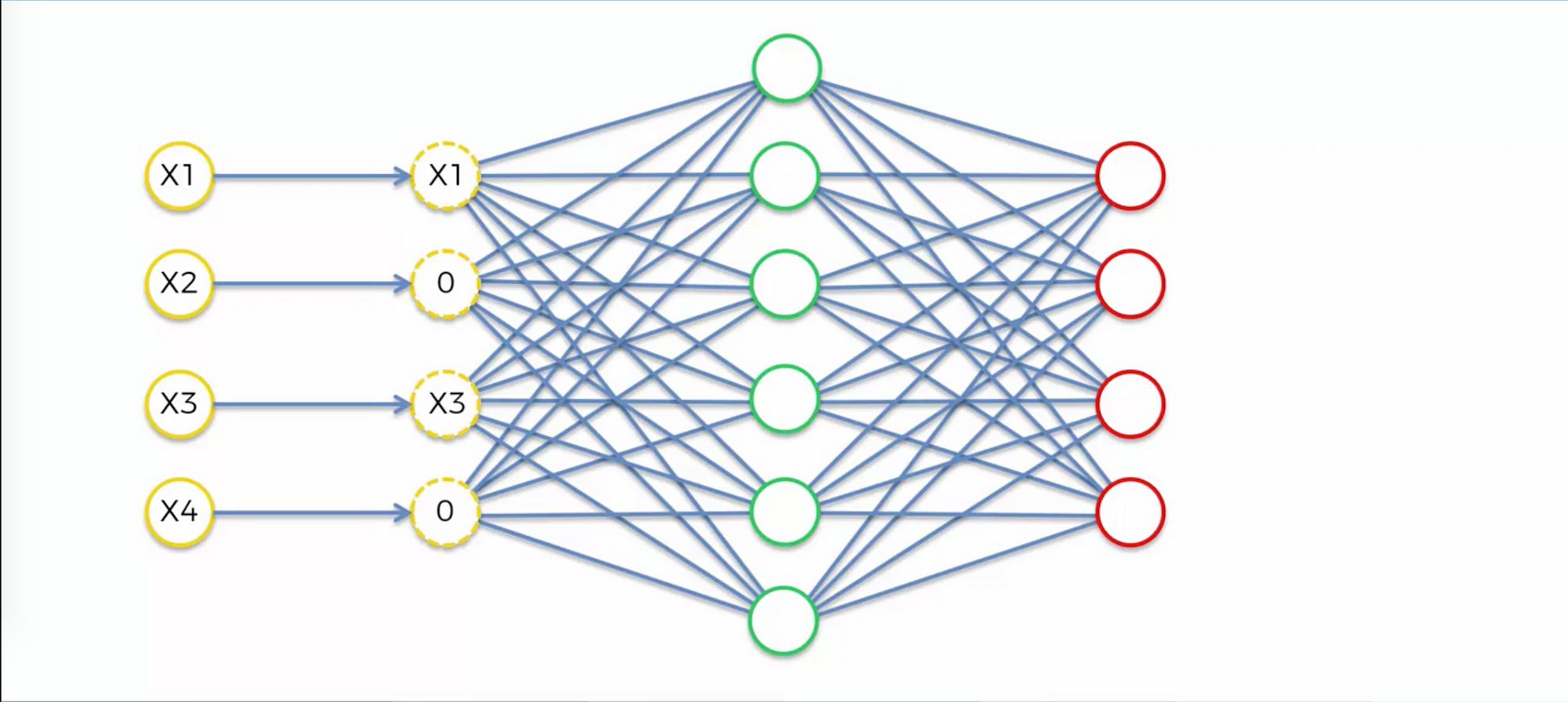

Denoising autoencoders, introduced in 2008 by Pascal Vincent, are neural networks designed to reconstruct clean data from noisy inputs. Widely used in medical imaging, satellite data analysis, and speech processing, they enhance data quality and enable robust feature extraction.

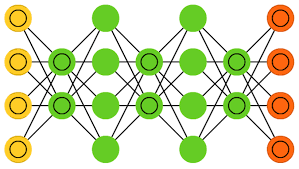

Deep Belief Networks (DBNs), introduced in 2006 by Geoffrey Hinton and colleagues, revolutionised unsupervised learning by enabling hierarchical feature extraction and robust data representation. Widely used in industries like healthcare, finance, and transport, DBNs enhance tasks such as image recognition, NLP, and time-series prediction.