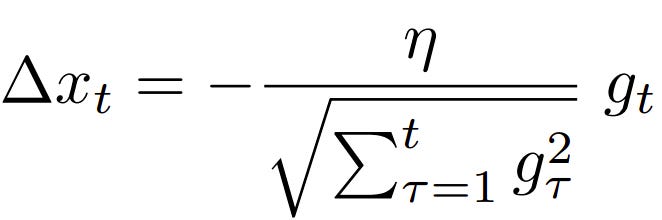

AdaDelta is an adaptive learning rate optimisation algorithm introduced by Matthew D. Zeiler in 2012 as an enhancement to AdaGrad. It is widely used for its efficiency, stability, and ability to address challenges like diminishing learning rates and gradient vanishing, particularly in sparse data tasks.

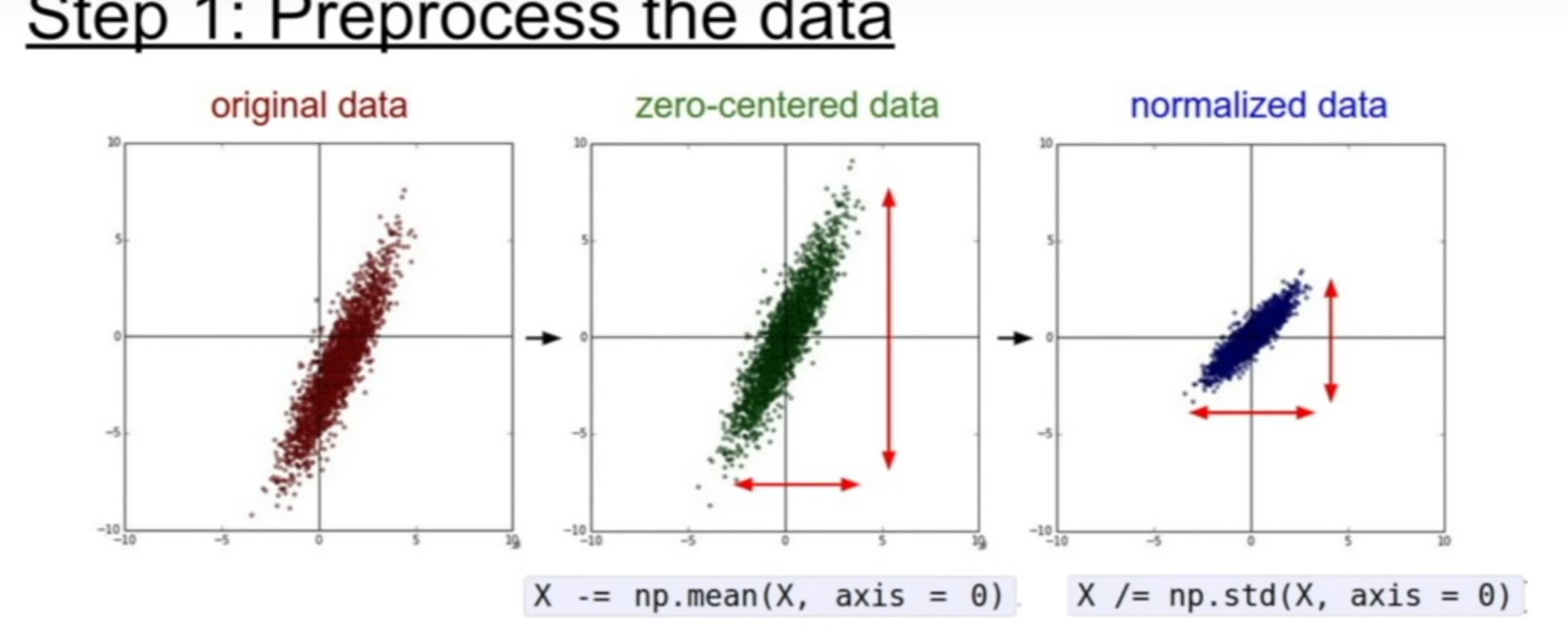

Zero-centring and whitening are fundamental pre-processing techniques in machine learning that standardise data by aligning its mean and eliminating redundancy. These methods improve model accuracy, speed up training, and are widely used across industries such as health, environment, and transport in Australia.

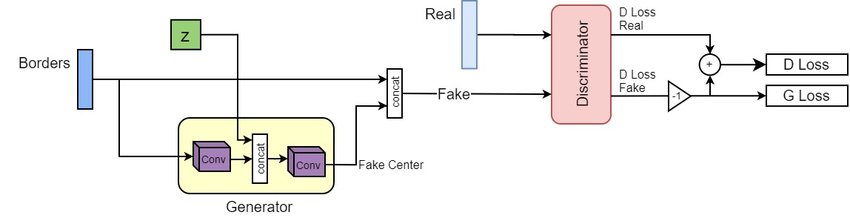

The Wasserstein GAN (WGAN), introduced in 2017 by Martin Arjovsky and collaborators, revolutionised GAN training by addressing instability and mode collapse using the Wasserstein distance. Its applications range from generating realistic images to synthetic data creation, with significant impacts globally and in Australia.

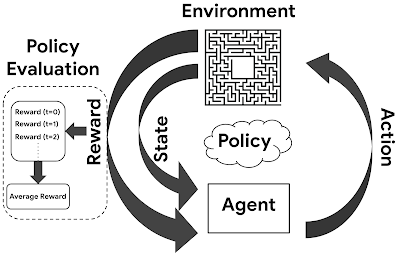

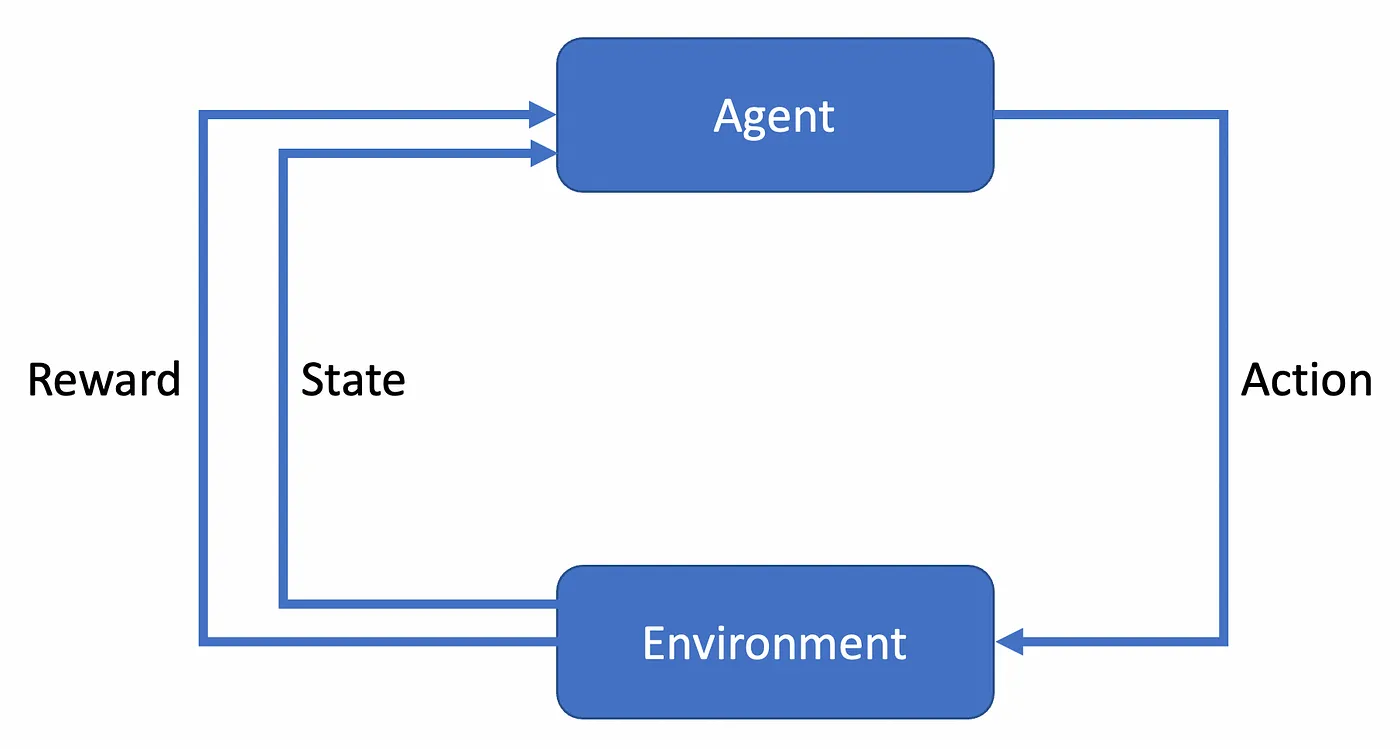

The concept of “policy” in machine learning, rooted in Reinforcement Learning, represents a set of strategies guiding AI decisions based on input states. Policies enhance decision-making efficiency, adaptability, and scalability, with applications in autonomous vehicles, audits, and resource allocation in Australia.

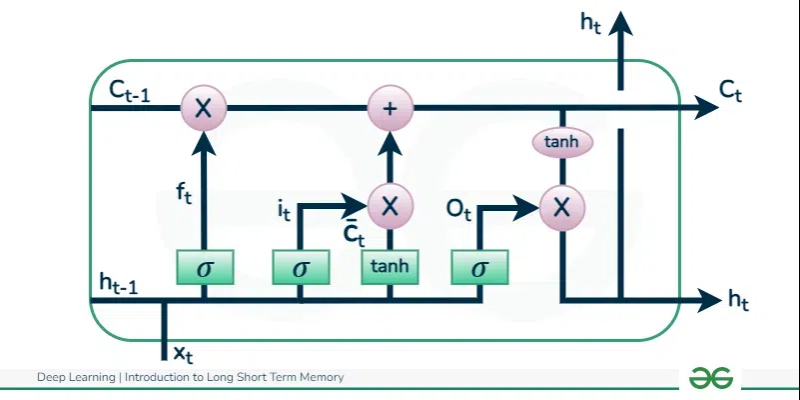

Long Short-Term Memory (LSTM) networks, introduced in 1997 by Sepp Hochreiter and Jürgen Schmidhuber, revolutionised sequential data modelling by overcoming the limitations of traditional RNNs. With applications in speech recognition, NLP, and time-series analysis, LSTMs have become essential for handling long-term dependencies in data.

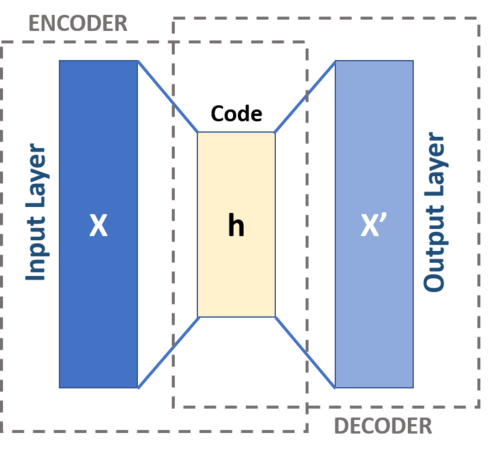

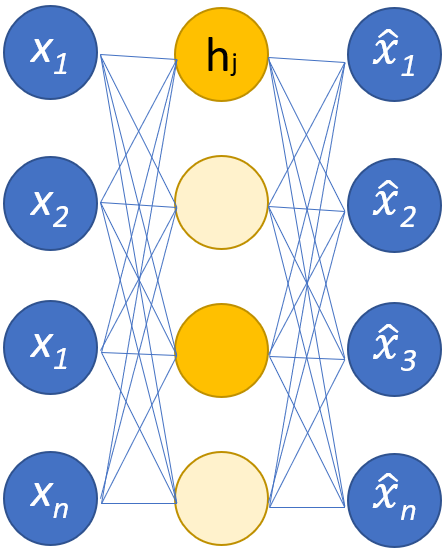

Autoencoders, introduced in the 1980s by researchers like Geoffrey Hinton, are neural networks that compress and reconstruct data, enabling dimensionality reduction, noise removal, and anomaly detection. They are essential for unsupervised learning tasks, with applications in data compression, image processing, and recommender systems.

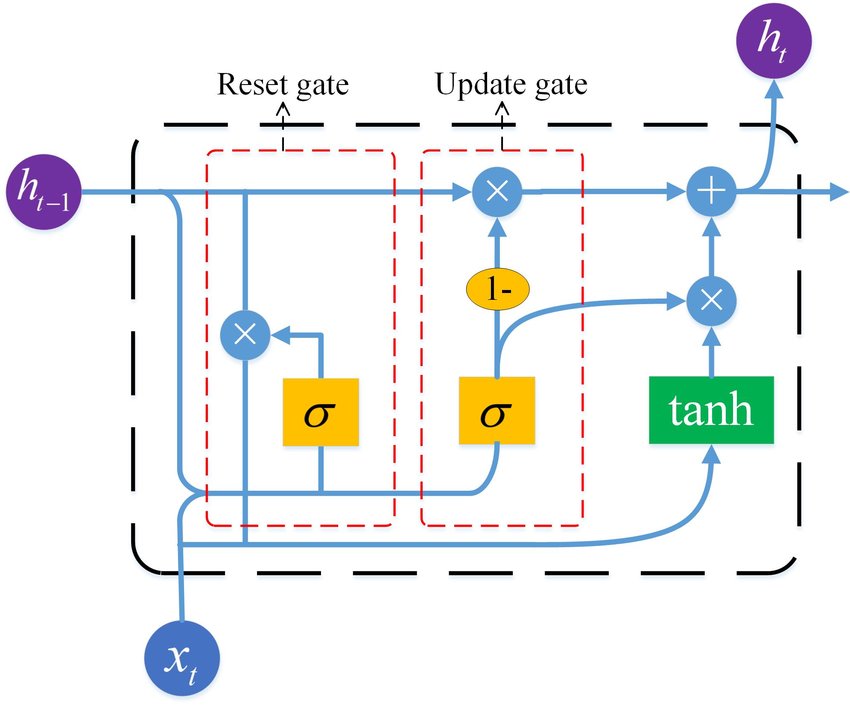

Gated Recurrent Units (GRUs), introduced in 2014 by Kyunghyun Cho and his team, are streamlined alternatives to LSTMs, designed for handling sequential data with greater computational efficiency. GRUs excel in tasks like speech recognition, time-series prediction, and natural language processing, making them ideal for real-time applications.

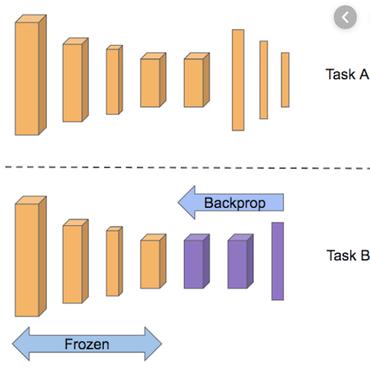

Transfer learning, popularised in the 2010s through deep learning advancements and frameworks like ImageNet, enables models trained on one task to be adapted for related tasks. It addresses challenges like data scarcity and high training costs, with applications in healthcare, finance, and government.

Sparse autoencoders, introduced in the 2000s by researchers like Andrew Ng, are neural networks that extract essential features from high-dimensional data while minimising redundancy. They are applied in feature engineering, image compression, and pattern recognition, benefiting industries such as healthcare, finance, and government analytics.

The SARSA algorithm, introduced by Richard Sutton and Andrew Barto in the early 1990s, is an on-policy reinforcement learning method that learns policies in real-time by evaluating state-action transitions. Its safe exploration and adaptability make it ideal for dynamic and complex environments, such as traffic systems and rescue operations.