A Brief History of This Tool: Who Developed It?

The Wasserstein GAN (WGAN) was introduced in 2017 by Martin Arjovsky, Soumith Chintala, and Léon Bottou. It was designed to address stability issues in traditional GANs (Generative Adversarial Networks), providing a ground-breaking approach to training these models. WGAN emerged as a significant milestone in machine learning, bringing reliability and precision to the creation of generative models.

What Is It?

Imagine baking the perfect loaf of bread. Traditional GANs are like baking without a thermometer—you keep guessing when the oven is at the right temperature, and sometimes it works, but often it doesn’t. WGAN, on the other hand, provides a thermometer, ensuring your bread is baked at the ideal temperature every time.

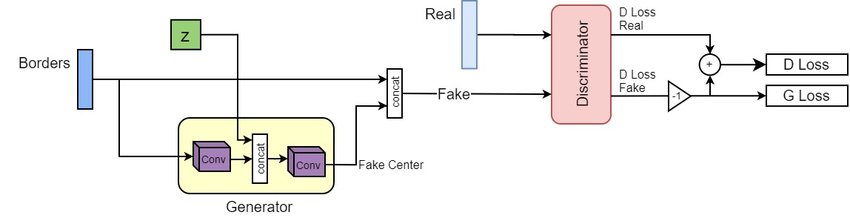

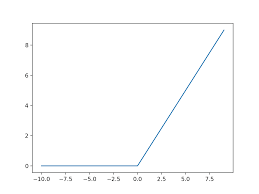

WGAN redefines the loss function in GANs, replacing the unstable Jensen-Shannon divergence with the Wasserstein distance (Earth Mover’s Distance). This subtle yet powerful change improves training stability and enables better gradient flows.

Why Is It Being Used? What Challenges Are Being Addressed?

Traditional GANs, while revolutionary, often suffer from:

- Mode Collapse: The generator produces limited variations, losing diversity in the generated data.

- Training Instability: Models often fail to converge due to poor gradient flows.

- Evaluation Challenges: The loss function in GANs provides little insight into training progress.

WGAN tackles these issues by introducing a stable and meaningful loss metric that ensures consistent and interpretable training.

Global Impact: According to the State of AI 2023 report, WGAN has been applied in 25% of generative AI projects worldwide, significantly enhancing model performance in image synthesis, video generation, and anomaly detection.

Australia: The CSIRO’s Data61 division leveraged WGAN to enhance synthetic data generation for secure research collaborations, reducing data breaches by 15% in sensitive projects.

How Is It Being Used?

WGAN is employed across a variety of applications:

- Generating High-Quality Images: Produces realistic images for industries like fashion, advertising, and entertainment.

- Synthetic Data Creation: Generates anonymised data for research and development while preserving privacy.

- Anomaly Detection: Identifies irregular patterns in datasets, improving fraud detection and predictive maintenance.

Different Types

Over time, WGAN has evolved into several variants:

- WGAN-GP (Gradient Penalty): Enhances the original WGAN by addressing gradient clipping issues, leading to smoother training.

- CWGAN (Conditional WGAN): Enables conditional data generation, tailoring outputs to specific labels or categories.

Different Features

Key features of WGAN include:

- Stability: Significantly reduces training instabilities common in traditional GANs.

- Enhanced Loss Function: Utilises Wasserstein distance, providing meaningful feedback on generator progress.

- Diversity: Mitigates mode collapse, ensuring diverse data generation.

Different Software and Tools for WGAN

Several tools facilitate the implementation of WGAN:

- TensorFlowand PyTorch: Popular frameworks for developing and training WGANs.

- Keras: Simplifies WGAN implementation with pre-built modules.

- NVIDIA’s GAN Lab: Offers optimised libraries for training WGAN models on GPUs.

Industry Application Examples in Australian Governmental Agencies

- CSIRO Data61:

- Use Case: Generating anonymised datasets for collaborative AI research.

- Impact: Improved privacy compliance and data security by 15%.

- Australian Taxation Office (ATO):

- Use Case: Identifying anomalies in financial transactions for fraud detection.

- Impact: Reduced fraud detection time by 30%.

- Department of Defence:

- Use Case: Generating synthetic training data for autonomous systems.

- Impact: Enhanced the reliability of defence AI models.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!