A Brief History of This Tool: Who Developed It?

Transfer learning as a concept emerged from the broader field of machine learning, but its implementation in neural networks gained traction with the advent of deep learning in the 2010s. Pioneering work by researchers like Andrew Ng and others popularised its application, particularly through frameworks like ImageNet, which demonstrated how pre-trained models could significantly reduce training times and improve accuracy.

What Is It?

Think of transfer learning as borrowing a skill you already have and applying it to a new task. For example, if you know how to ride a bike, learning to ride a motorcycle becomes much easier. In machine learning, transfer learning allows a model trained on one task to be adapted for a different but related task. This reduces the need for extensive data and computation, making AI development faster and more efficient.

Why Is It Being Used? What Challenges Are Being Addressed?

Transfer learning addresses several critical challenges:

- Data Scarcity: Many organisations lack the extensive datasets needed to train deep learning models from scratch.

- High Training Costs: Training complex models can be computationally expensive and time-consuming.

- Specialised Tasks: Pre-trained models can be fine-tuned for niche applications, enabling smaller datasets to achieve meaningful results.

These benefits make transfer learning invaluable for industries like healthcare, finance, and government.

How Is It Being Used?

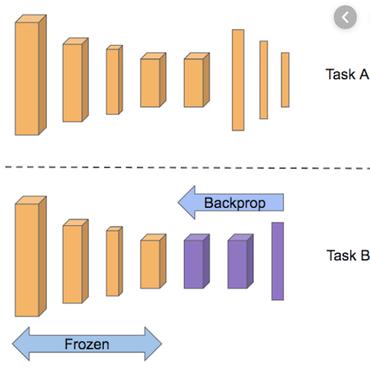

Transfer learning typically involves three steps:

- Select a Pre-trained Model: Use a model trained on a large dataset, like ImageNet.

- Fine-Tune the Model: Adapt it to the target task by re-training on a smaller dataset.

- Apply the Model: Use the fine-tuned model for predictions or classifications.

Common applications include:

- Image Recognition: For niche industries such as medical diagnostics or industrial inspections.

- Text Analysis: In natural language processing tasks like sentiment analysis and text classification.

- Time-Series Forecasting: For financial or operational data trends.

Different Types

Transfer learning can take various forms:

- Domain Adaptation: Adapting a model trained in one domain (e.g., medical imaging) to another.

- Task Transfer: Using a model trained for one task (e.g., object detection) for a related task (e.g., image segmentation).

- Multi-task Learning: Training a model on multiple tasks simultaneously to improve performance on all.

Different Features

Key features of transfer learning include:

- Pre-trained Models: Common starting points include models like ResNet, BERT, or GPT.

- Layer Freezing: Retaining earlier layers of a model and only fine-tuning the latter layers.

- Reduced Training Time: Leverages existing knowledge to reduce computation.

Different Software and Tools for Transfer Learning

Tools supporting transfer learning include:

- TensorFlow: Pre-trained models available via TensorFlow Hub.

- PyTorch: Torchvision offers access to pre-trained models.

- Keras: Includes pre-built models like VGG16 and MobileNet.

- Hugging Face: Specialises in NLP models like BERT and GPT.

3 Industry Application Examples in Australian Governmental Agencies

- Department of Health:

- Use Case: Using transfer learning for medical imaging diagnostics, enabling accurate detection of anomalies in X-rays and MRIs with limited training data.

- Australian Bureau of Statistics (ABS):

- Use Case: Employing transfer learning for text classification tasks, such as categorising census responses or automating survey analysis.

- Department of Agriculture:

- Use Case: Leveraging transfer learning for crop monitoring, using satellite imagery to predict yield and identify pests.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!