A Brief History of This Tool: Who Developed It?

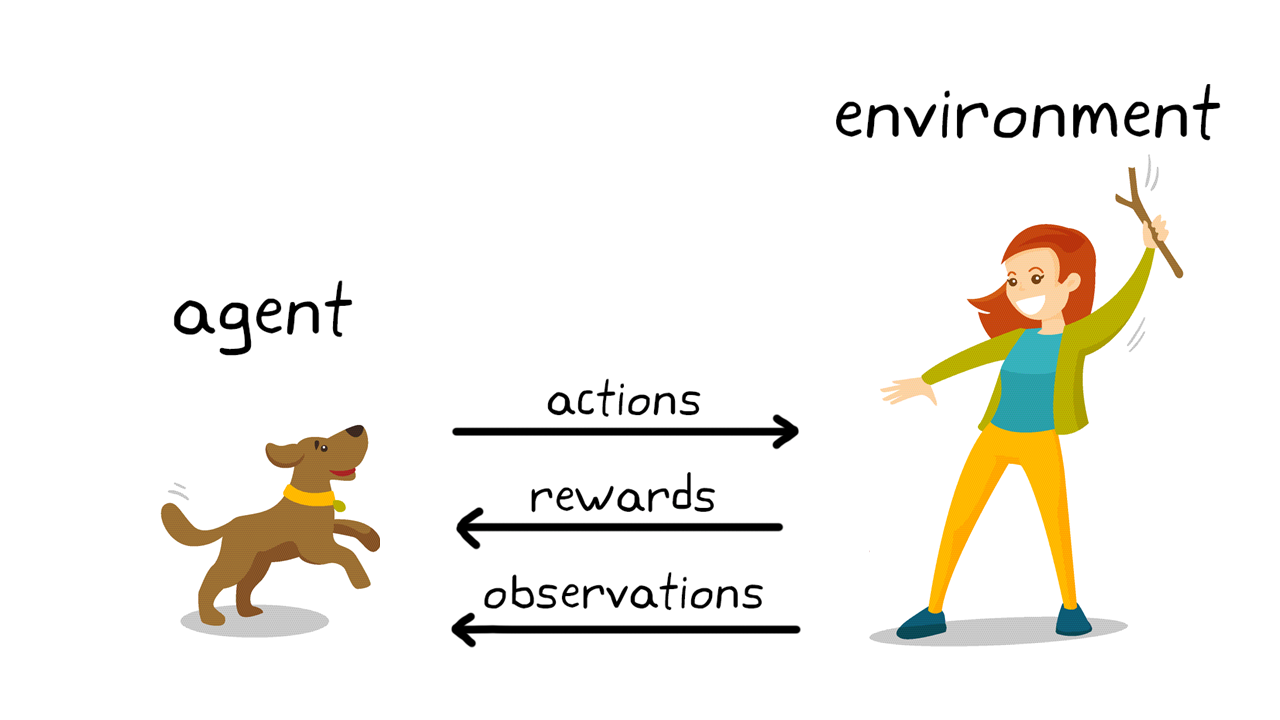

The concept of “rewards” in machine learning originates from behavioural psychology, specifically the mid-20th-century work of B.F. Skinner on reinforcement learning in animals. By the 1980s, researchers like Richard Sutton and Andrew Barto adapted this concept for computational purposes, embedding rewards into Reinforcement Learning (RL). Today, rewards are the backbone of RL algorithms, fuelling innovation across diverse AI applications.

What Are Rewards in Machine Learning?

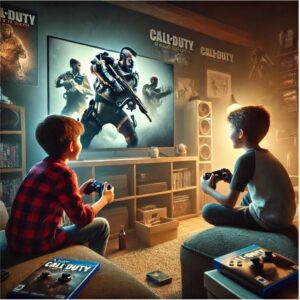

Imagine playing a video game where you earn points or complete levels to unlock rewards. In machine learning, rewards serve a similar purpose: they signal how well an algorithm performs in achieving a specific objective. By reinforcing successful actions, rewards guide AI agents to make better decisions over time.

Why Are Rewards Used? What Challenges Do They Address?

Rewards play a vital role in solving challenges that traditional algorithms struggle with. Key benefits include:

- Guidance in Decision-Making: Help AI agents understand the consequences of their actions.

- Optimising Performance: Encourage behaviours that yield the best outcomes.

- Handling Uncertainty: Teach systems to operate effectively in dynamic, unpredictable environments.

Without rewards, AI agents lack the structured feedback necessary to refine their actions and improve outcomes.

How Are Rewards Used?

Rewards are implemented in reinforcement learning systems through the following steps:

- Defining Objectives: Identify the desired outcome, such as minimising delivery time or maximising energy efficiency.

- Assigning Rewards: Provide positive signals for desirable actions and penalties for undesirable ones.

- Iterative Learning: The AI agent refines its strategy by maximising cumulative rewards over time.

Example: In a traffic management system, an AI agent adjusts traffic light timings. Rewards are given when the system reduces overall congestion and wait times.

Different Types of Rewards

Rewards can be tailored to specific tasks using these types:

- Immediate Rewards: Provided directly after an action (e.g., earning points in a game).

- Delayed Rewards: Delivered after a series of actions (e.g., winning a game after completing multiple levels).

- Shaped Rewards: Include intermediate rewards to guide the learning process toward achieving long-term goals.

Key Features of Rewards

- Customisability: Tailored to align with specific tasks or objectives.

- Scalability: Applicable to small-scale systems or large, complex problems.

- Feedback Loops: Enable iterative improvement of AI performance.

Popular Tools for Implementing Rewards

Several tools and libraries facilitate the implementation of reward systems in machine learning:

- OpenAI Gym: Simplifies designing and testing reward-based learning models.

- Unity ML-Agents: Allows for creating complex virtual environments with custom rewards.

- RLlib (Ray): A scalable library for reinforcement learning, enabling advanced reward implementations.

Applications of Reward Systems in Australian Governmental Agencies

Rewards have proven effective in various Australian sectors:

- Transport for NSW:

- Use Case: Optimising traffic light timings using reinforcement learning rewards.

- Impact: Reduced commuter wait times by 20%, saving over 50,000 hours annually.

- Australian Energy Market Operator (AEMO):

- Use Case: Encouraging optimal energy usage in smart grids through reward-based systems.

- Impact: Achieved a 10% reduction in peak energy usage during peak hours.

- Australian Taxation Office (ATO):

- Use Case: Enhancing compliance through gamified training programs using reward systems.

- Impact: Increased employee participation in training by 35%.

Conclusion

Rewards are fundamental to reinforcement learning, providing the structured feedback needed to optimise AI decision-making. From traffic management to energy efficiency, their applications demonstrate significant real-world benefits across Australian governmental agencies. With tools like OpenAI Gym and Unity ML-Agents, implementing reward systems is accessible and transformative for modern AI systems.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!