A Brief History of This Tool: Who Developed It?

The Gated Recurrent Unit (GRU) was introduced in 2014 by Kyunghyun Cho and his team as part of their work on neural machine translation. GRU was designed as a streamlined alternative to the Long Short-Term Memory (LSTM) networks, retaining their effectiveness but simplifying the structure for faster computation and implementation.

What Is It?

Imagine a GRU as a well-organised backpack with two compartments: one for items you need now and another for items you’ll need later. It keeps your essentials easily accessible while deciding what to discard or keep for future use. GRU is a type of Recurrent Neural Network (RNN) designed to handle sequential data with fewer computational requirements than LSTM.

Why Is It Being Used? What Challenges Are Being Addressed?

GRU solves many of the same issues as LSTM, such as the vanishing gradient problem, which hampers traditional RNNs’ ability to learn long-term dependencies. GRU is preferred in scenarios where computational efficiency is critical, offering faster training and inference while maintaining comparable performance to LSTM. This makes it ideal for tasks like real-time speech recognition, stock market predictions, and chatbots.

How Is It Being Used?

GRUs are widely applied in fields where sequential data is key:

- Speech-to-Text: GRU models power real-time transcription tools.

- Time-Series Prediction: Used for forecasting trends in financial markets or weather patterns.

- Natural Language Processing (NLP): GRU networks are employed in chatbots and text generation.

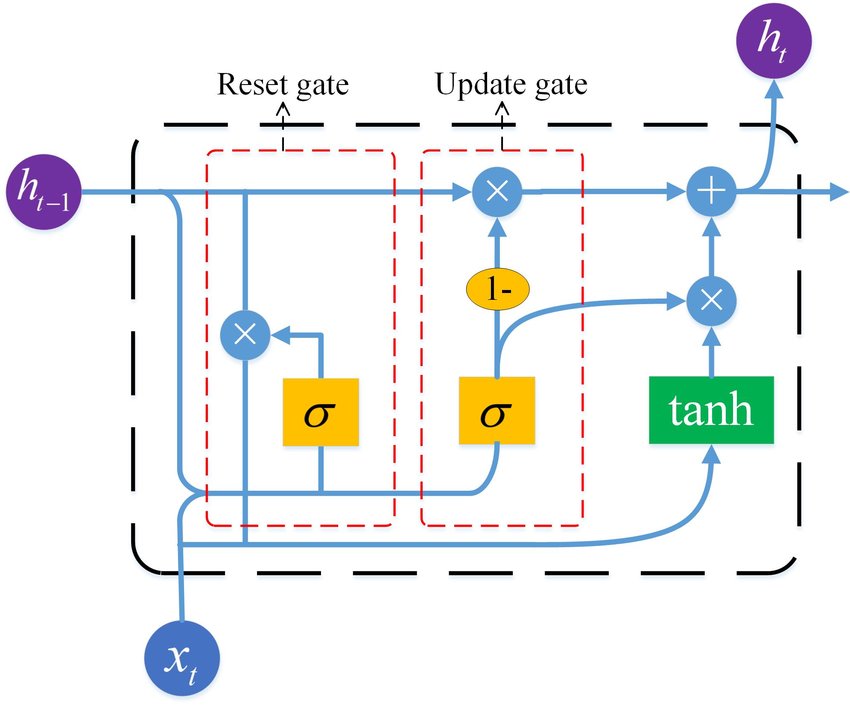

GRU networks process input data through two gates—the reset gate and the update gate—to manage the flow of information efficiently.

Different Types

While GRU itself is a streamlined architecture, it can be adapted in various configurations, such as:

- Bidirectional GRU: Processes data in both forward and backward directions for better context understanding.

- Stacked GRU: Incorporates multiple GRU layers to capture complex dependencies in sequential data.

Different Features

GRU features include:

- Reset Gate: Controls how much past information to forget.

- Update Gate: Balances how much new information to add while retaining old data.

- Fewer Parameters: GRU has a simpler architecture with fewer parameters than LSTM, resulting in faster computation.

Different Software and Tools for GRU

Developers can implement GRU using various tools and frameworks, such as:

- TensorFlow: Offers robust GRU support for easy integration.

- PyTorch: Provides flexibility for creating custom GRU layers.

- Keras: Simplifies GRU implementation with a high-level API.

- Scikit-Learn: Can be used for lightweight GRU-based tasks.

3 Industry Application Examples in Australian Governmental Agencies

- Bureau of Meteorology:

- Use Case: Leveraging GRU models for precise weather prediction by analysing historical data trends.

- Department of Health:

- Use Case: Using GRU to monitor and forecast disease outbreaks based on sequential data inputs like hospital admissions and case reports.

- Transport for NSW:

- Use Case: Applying GRU to analyse commuter patterns and optimise public transport schedules in real time.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!