A Brief History of This Tool: Who Developed It?

Denoising autoencoders were introduced in 2008 by Pascal Vincent and his team as part of their research on improving data representation in unsupervised learning. The goal was to develop a model that could learn robust representations by removing noise from input data while preserving its key features.

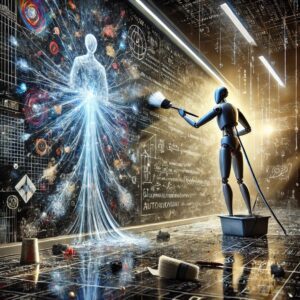

What Is It?

Think of a denoising autoencoder as a digital janitor. Imagine a messy blackboard covered in scribbles (noise) where meaningful text (data) is hidden. The denoising autoencoder learns to clean the blackboard, revealing only the meaningful content. It is a type of neural network trained to reconstruct clean data from noisy input, making it highly effective for data pre-processing and feature extraction.

Why Is It Being Used? What Challenges Are Being Addressed?

Denoising autoencoders address key challenges, such as:

- Noisy Data: Removing irrelevant noise from data inputs (e.g., errors in scanned documents, corrupted audio, or blurred images).

- Feature Extraction: Learning meaningful representations of data for downstream tasks like classification or clustering.

- Robustness: Improving the model’s ability to generalise by training on noisy data.

These properties make denoising autoencoders invaluable for tasks like medical imaging, satellite data analysis, and speech enhancement.

How Is It Being Used?

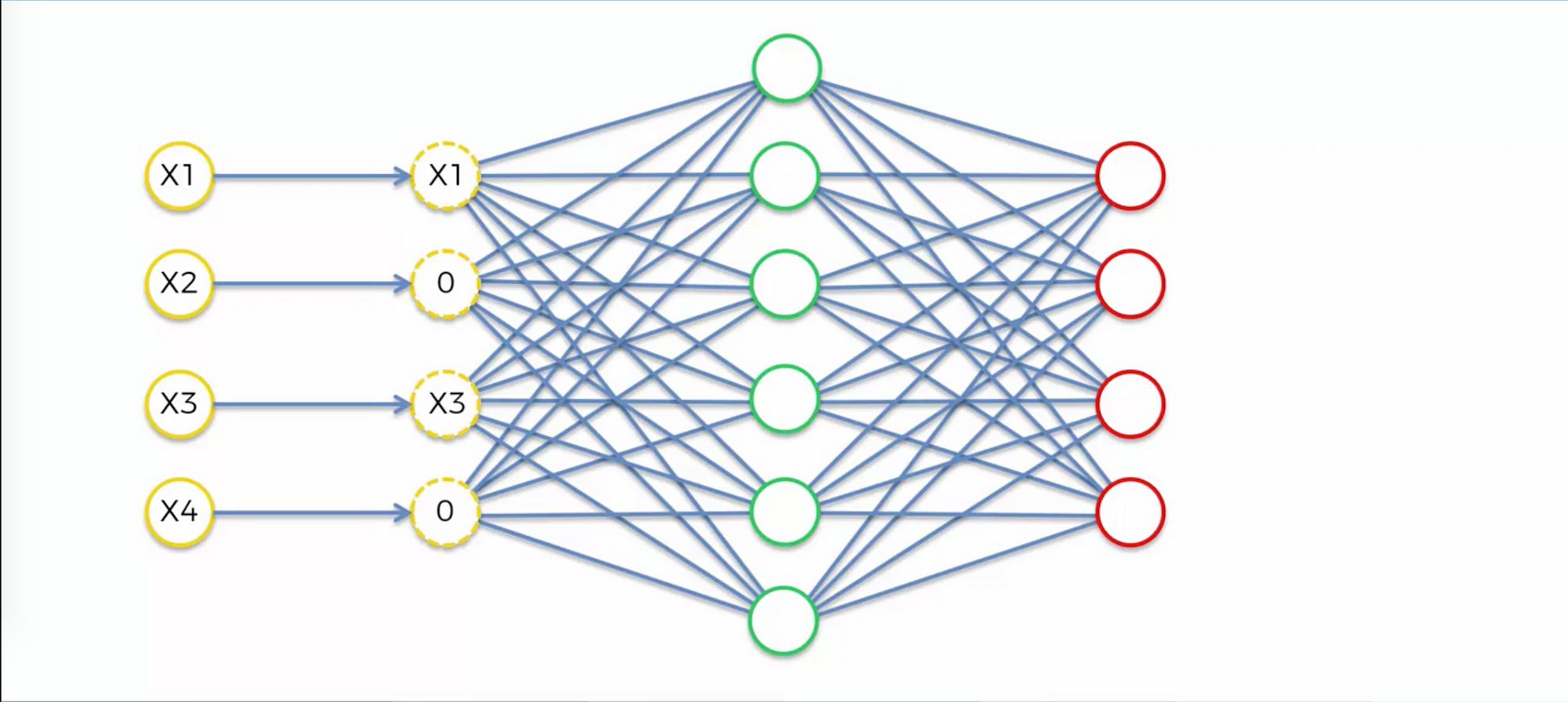

Denoising autoencoders work by:

- Adding Noise to Input Data: A controlled amount of noise is introduced to the data.

- Training the Autoencoder: The model learns to reconstruct the original clean data from the noisy input.

- Applying the Model: Once trained, the model removes noise from new data inputs, ensuring high-quality outputs.

Applications include:

- Image Denoising: Enhancing photos by removing grain, blur, or artefacts.

- Speech Processing: Reducing background noise in audio recordings.

- Document Scanning: Cleaning scanned text or images for better readability.

Different Types

Variants of denoising autoencoders include:

- Convolutional Denoising Autoencoders: Tailored for image data, leveraging convolutional layers for spatial feature extraction.

- Recurrent Denoising Autoencoders: Ideal for sequential data like time series or audio.

- Stacked Denoising Autoencoders: Use multiple layers to capture hierarchical features, making them effective for complex datasets.

Different Features

Key features of denoising autoencoders include:

- Noise Robustness: They are trained to perform well even with significant data corruption.

- Latent Space Representation: They extract compressed, meaningful data representations.

- Versatility: They work with various data types, from images to audio and text.

Different Software and Tools for Denoising Autoencoders

Denoising autoencoders can be implemented using popular tools like:

- TensorFlow: With its powerful library for building and training neural networks.

- PyTorch: Offering flexibility for creating custom denoising architectures.

- Keras: Simplifies the development of denoising autoencoders with pre-built layers.

- OpenCV: Provides additional tools for pre-processing noisy image data.

3 Industry Application Examples in Australian Governmental Agencies

- Department of Health:

- Use Case: Using denoising autoencoders to enhance medical imaging by removing artefacts, leading to better diagnostic accuracy.

- Australian Bureau of Statistics (ABS):

- Use Case: Leveraging autoencoders to clean and preprocess large-scale survey data for improved analysis.

- Geoscience Australia:

- Use Case: Applying denoising techniques to satellite images to detect subtle geological features and monitor land-use changes.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!