A Brief History of This Tool: Who Developed It?

Autoencoders, a type of artificial neural network, were introduced in the 1980s by researchers like Geoffrey Hinton. Initially designed for dimensionality reduction and feature extraction, their popularity grew with advancements in deep learning. Today, autoencoders are pivotal in applications ranging from data compression to anomaly detection.

What Is It?

Imagine autoencoders as a photocopy machine that learns to reproduce an image but with fewer ink cartridges. They compress input data into a simpler form (encoding) and then reconstruct it as closely as possible to the original (decoding). This process trains the system to understand data by its essential features.

Why Is It Being Used? What Challenges Are Being Addressed?

Autoencoders solve several challenges in machine learning:

- Dimensionality Reduction: Reducing complex datasets into manageable representations.

- Noise Removal: Extracting clean signals from noisy data.

- Anomaly Detection: Identifying patterns that deviate from the norm, such as fraudulent transactions.

By learning from the data itself, autoencoders remove the dependency on labelled datasets, making them invaluable for unsupervised learning tasks.

How Is It Being Used?

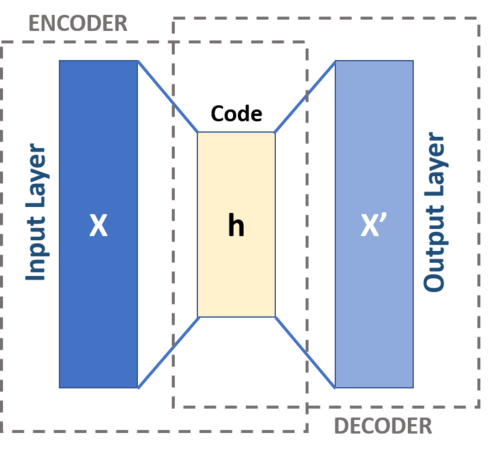

Autoencoders function in two main stages:

- Encoder: Compresses input into a latent representation (a smaller dimension).

- Decoder: Reconstructs the input from this compressed representation.

Applications include:

- Data Compression: Reducing storage requirements while preserving key information.

- Image Processing: Enhancing or denoising images for medical or security purposes.

- Recommender Systems: Learning user preferences for more accurate recommendations.

Different Types

Autoencoders come in various types tailored to specific tasks:

- Vanilla Autoencoders: Standard architecture for encoding and decoding.

- Sparse Autoencoders: Focus on learning significant features by imposing sparsity constraints.

- Denoising Autoencoders: Train the model to reconstruct clean data from noisy inputs.

- Variational Autoencoders (VAE): Designed for generating new data points similar to the training set, often used in generative tasks.

Different Features

Key features of autoencoders include:

- Latent Space Representation: A compressed, meaningful abstraction of the input data.

- Reconstruction Loss: Measures the difference between the input and reconstructed output, guiding model training.

- Flexibility: Can adapt to various input types, including images, text, and numerical data.

Different Software and Tools for Autoencoders

Several tools make it easier to implement autoencoders:

- TensorFlow: Offers robust libraries for designing custom autoencoders.

- PyTorch: Known for its flexibility and dynamic computation graphs.

- Keras: Provides pre-built layers for quick implementation.

- Scikit-Learn: Simplifies autoencoder application for small-scale tasks.

3 Industry Application Examples in Australian Governmental Agencies

- Department of Home Affairs:

- Use Case: Using autoencoders for anomaly detection in visa application patterns, identifying irregular activities.

- Australian Bureau of Statistics (ABS):

- Use Case: Employing autoencoders for data compression to handle large-scale census data efficiently.

- Bureau of Meteorology:

- Use Case: Utilising autoencoders for noise reduction in weather satellite images, improving forecasting accuracy.