A Brief History of Gradient Perturbation

Gradient perturbation has significantly evolved, drawing from advancements in privacy-preserving technologies and machine learning optimisation techniques. The groundwork for these algorithms was laid in the 1940s with George Dantzig’s development of linear programming. Today, gradient perturbation plays a critical role in protecting sensitive data in AI models.

What Is Gradient Perturbation?

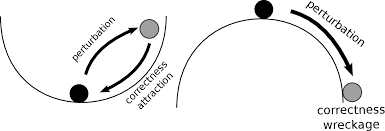

Gradient perturbation can be compared to a chef adding a pinch of spice to a dish: it introduces controlled noise to a machine learning model’s gradient updates. This approach ensures data privacy without compromising the model’s performance or utility.

Why Is It Being Used? What Challenges Does It Address?

Gradient perturbation tackles key challenges in AI and machine learning:

- Data Privacy Protection: Ensures compliance with global regulations like GDPR and the Australian Privacy Act.

- Adversarial Attack Prevention: Minimises the risk of exploiting sensitive data within AI models.

- Trust in AI Systems: Boosts confidence in AI adoption by mitigating privacy risks and creating secure environments for machine learning.

How Is Gradient Perturbation Used?

Gradient perturbation is implemented during the training of machine learning models. Key applications include:

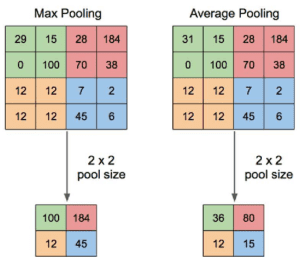

- Noise Injection: Adds controlled noise to gradients at each step of the optimisation process, preserving privacy.

- Federated Learning: Facilitates collaborative model training across organisations without exposing raw data.

- Differential Privacy Mechanisms: Implements mathematical frameworks to guarantee data protection.

Different Types of Gradient Perturbation

- Gaussian Noise Perturbation:

- Adds Gaussian noise for a balance between privacy and model utility.

- Laplacian Noise Perturbation:

- Employs Laplace noise to enhance privacy, particularly in highly sensitive datasets.

- Adaptive Perturbation:

- Dynamically adjusts noise levels based on the sensitivity of gradients.

Features of Gradient Perturbation

Gradient perturbation is valued for its:

- Scalable Privacy Solutions: Suitable for datasets of varying sizes and complexities.

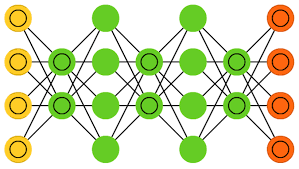

- Integration with AI Algorithms: Compatible with gradient-based optimisers like Stochastic Gradient Descent (SGD).

- Customisable Privacy Levels: Allows adjustments to meet specific privacy needs and regulatory requirements.

Tools Supporting Gradient Perturbation

Several tools and frameworks make implementing gradient perturbation accessible:

- TensorFlow Privacy: Integrates gradient perturbation into TensorFlow models.

- PySyft: Facilitates privacy-preserving AI development with noise injection capabilities.

- PyTorch Opacus: Specialises in applying differential privacy techniques to PyTorch models.

- IBM Differential Privacy Library: Enterprise-grade privacy solutions, including gradient perturbation.

Applications of Gradient Perturbation in Australian Governmental Agencies

Gradient perturbation is instrumental across various sectors in Australia:

- Healthcare Data Analysis (AIHW):

- Application: Safeguards patient data during advanced analytics for public health programs.

- AI Models in Education (Department of Education):

- Application: Protects sensitive student information while improving predictive models for educational outcomes.

- Energy Grid Optimisation (AEMO):

- Application: Ensures data security while using AI to optimise energy supply and demand across Australia.

Conclusion

Gradient perturbation strikes a crucial balance between data privacy and model performance, enabling compliance with privacy laws and fostering trust in AI. Its applications in healthcare, education, and energy highlight its transformative potential. With tools like TensorFlow Privacy and PyTorch Opacus, adopting gradient perturbation is both efficient and impactful for secure machine learning.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!