A Brief History of RMSProp

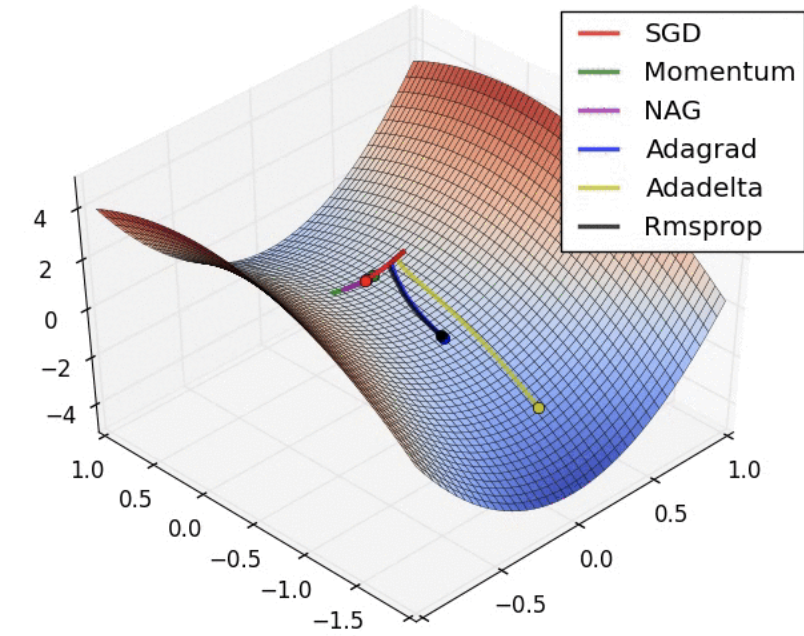

RMSProp (Root Mean Square Propagation) was introduced by Geoffrey Hinton in 2012 during a lecture series on neural networks. The optimiser was designed to address the challenges of training deep learning models by dynamically adapting the learning rate for each parameter, ensuring stability and efficiency.

What Is RMSProp?

Imagine running on a winding path with varying terrain: RMSProp acts like customised shoes that adjust their grip based on the surface, helping you move efficiently. It is an adaptive gradient descent algorithm that modifies the learning rate for each parameter based on its past gradient behaviour, making optimisation smoother and faster.

Why Is It Used? What Challenges Does It Address?

RMSProp addresses critical challenges in deep learning optimisation:

- Vanishing or Exploding Gradients: By normalising gradients, RMSProp ensures a stable learning process.

- Flat Regions in Loss Functions: Dynamically adjusts learning rates to avoid stagnation.

- Faster Convergence: Ensures consistent optimisation progress, even with noisy data.

How Is It Used?

RMSProp is widely implemented in deep learning frameworks. Here’s how it is typically applied:

- Define the Optimiser: Specify RMSProp as the optimisation algorithm, with adjustable hyperparameters such as the learning rate.

- Compile the Model: Integrate the optimiser into the neural network’s configuration alongside the loss function and metrics.

- Train the Model: Run the training process, leveraging RMSProp’s adaptive learning rate for efficient convergence.

Different Types

While RMSProp does not have explicit variations, it can be combined with other techniques, such as:

- Nesterov Momentum: For enhanced precision in optimisation.

- Adam Optimiser: Incorporates RMSProp principles for improved performance in diverse scenarios.

Key Features of RMSProp

RMSProp is valued for its unique features:

- Adaptive Learning Rates: Automatically adjusts learning rates for different parameters.

- Memory Efficiency: Tracks recent gradient magnitudes rather than the entire gradient history.

- Robust Performance: Excels in nonstationary objectives, such as training neural networks.

Popular Tools and Software Supporting RMSProp

RMSProp is integrated into leading machine learning frameworks:

- TensorFlow/Keras: Provides the RMSprop optimiser for seamless integration.

- PyTorch: Features RMSProp in the

torch.optim.RMSpropmodule. - MXNet: Includes RMSProp support for advanced optimisation.

Applications of RMSProp in Australian Governmental Agencies

RMSProp drives innovation in various Australian public sectors:

Healthcare Analytics (AIHW):

- Application: Enhances patient outcome prediction models using large healthcare datasets.

Transportation Systems (Transport for NSW):

- Application: Optimises traffic flow prediction systems for real-time transport planning and scheduling.

Environmental Monitoring (CSIRO):

- Application: Refines AI models for forecasting climate change impacts, adapting to dynamic environmental datasets.

Key Statistics on RMSProp Adoption

- Global Adoption: According to a 2023 Statista report, RMSProp is among the top three optimisers used for training deep learning models worldwide.

- Local Impact (ANZ): The Australian Institute of Machine Learning (AIML) reported that adopting RMSProp reduced model training times by up to 25% in public-sector AI projects.

Conclusion

RMSProp has revolutionised deep learning optimisation by introducing adaptive learning rates that improve stability and convergence. Widely applied in Australian industries like healthcare, transport, and environmental monitoring, RMSProp continues to demonstrate its versatility and efficiency. With tools like TensorFlow and PyTorch, implementing RMSProp has never been easier, ensuring its place as a cornerstone of modern AI development.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!