A Brief History: Who Developed It?

The concept of ensemble methods, including voting classifiers, emerged in the 1990s. Researchers like Thomas Dietterich and Leo Breiman pioneered the idea of combining multiple models to enhance predictive performance. Voting classifiers apply this principle by aggregating outputs from diverse models to improve decision-making accuracy.

What Is a Voting Classifier?

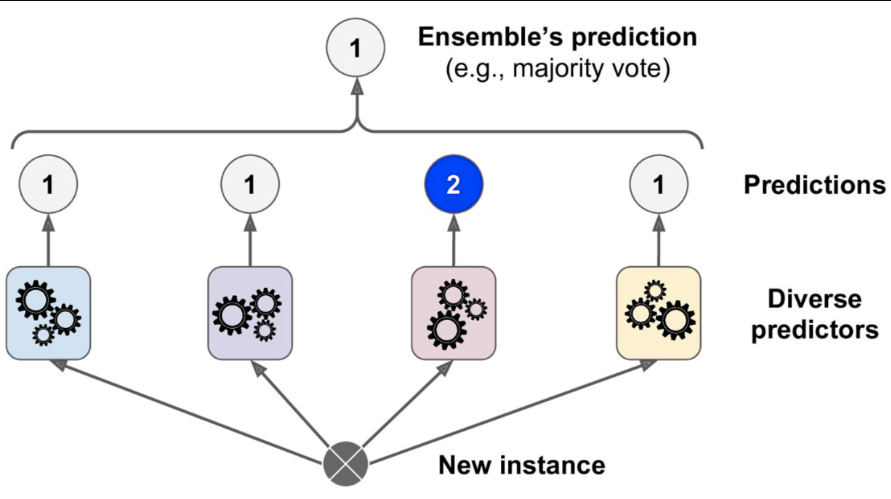

Imagine a talent competition where judges cast their votes to decide the winner. Each judge brings a unique perspective, but the final decision reflects their collective wisdom. Similarly, voting classifiers integrate predictions from various machine learning models, leveraging their combined strengths for better outcomes.

Why Are Voting Classifiers Used?

Voting classifiers address critical challenges in machine learning:

- Enhanced Accuracy: Improves predictions by combining the strengths of multiple algorithms.

- Model Diversity: Balances overfitting risks by leveraging varied models.

- Robust Performance: Handles noisy and complex datasets effectively.

Without ensemble voting, individual models might struggle to generalise on challenging datasets, leading to suboptimal predictions.

How Are Voting Classifiers Used?

The workflow of a voting classifier includes the following steps:

Train Multiple Models: Train models such as decision trees, logistic regression, and support vector machines on the same dataset.

Select a Voting Strategy:

- Hard Voting: Predicts the class with the majority vote.

- Soft Voting: Averages model probabilities for a final prediction.

- Combine Predictions: Aggregate the outputs to produce a unified and accurate result.

This approach maximises the complementary strengths of individual models, ensuring superior outcomes.

Different Types of Voting Classifiers

Voting classifiers are classified into two types based on their strategy:

- Hard Voting: Relies on majority votes for prediction.

- Soft Voting: Utilises weighted probabilities for enhanced accuracy.

Key Features of Voting Classifiers

Voting classifiers are valued for their unique features:

- Flexibility: Allows integration of diverse machine learning models.

- Customisable Voting Strategies: Supports both hard and soft voting approaches.

- Adaptability: Works effectively for classification and regression tasks.

Popular Software and Tools for Voting Classifiers

Several tools simplify the implementation of voting classifiers:

- Scikit-learn (Python): Features the VotingClassifier for easy ensemble integration.

- Weka: Provides built-in support for creating voting ensembles.

- R Libraries: Packages like caretesenble simplify ensemble modelling with voting strategies.

Applications of Voting Classifiers in Australian Governmental Agencies

Voting classifiers enhance decision-making across various Australian industries:

Healthcare Analytics:

- Application: Combining predictions from multiple models to classify patient risk levels with high precision.

Traffic Management:

- Application: Forecasting congestion patterns using diverse predictive models to improve infrastructure planning.

Economic Analysis:

- Application: Aggregating outputs from economic simulations to comprehensively assess the impact of public policies.

Conclusion

Voting classifiers unify the strengths of multiple machine learning models, ensuring robust and accurate predictions across diverse applications. Their flexibility and efficiency make them indispensable in fields like healthcare, transport, and economics. With accessible tools like Scikit-learn, Weka, and R libraries, voting classifiers provide a reliable framework for solving complex data challenges.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!