A Brief History of This Tool: Who Developed It?

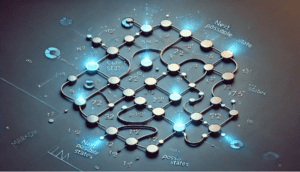

Deep Belief Networks (DBNs) were introduced in 2006 by Geoffrey Hinton, along with his colleagues Simon Osindero and Yee-Whye Teh. This innovation marked a significant milestone in the field of unsupervised learning and probabilistic models. Hinton’s work on DBNs laid the foundation for deep learning as we know it today, making it possible for machines to understand complex hierarchical structures in data.

What Is It?

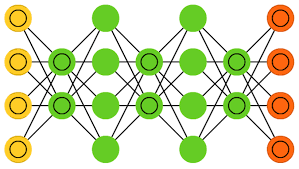

Imagine teaching a child to recognise objects. You start with simple shapes like circles and rectangles, then build on that understanding to recognise more complex objects like cars or animals. Similarly, DBNs are a stack of Restricted Boltzmann Machines (RBMs) that learn data features layer by layer, progressing from simple patterns to more abstract representations.

At its core, a DBN is a generative probabilistic model that combines unsupervised feature learning and supervised fine-tuning.

Why Is It Being Used? What Challenges Are Being Addressed?

DBNs excel at solving challenges in deep learning, such as:

- Efficient Feature Extraction: They reduce the need for manual feature engineering by automatically identifying relevant patterns in data.

- Data Representation: DBNs can learn hierarchical representations of data, making them ideal for image recognition, natural language processing, and time-series prediction.

- Initialisation for Deep Neural Networks: They provide a robust pretraining method, improving the training stability and convergence of deeper networks.

Global Impact: DBNs are widely used in industries like healthcare, finance, and autonomous systems. A report by Grand View Research highlights a 12.5% annual growth rate in AI applications using DBNs worldwide.

Australia: CSIRO and healthcare institutions use DBNs for early disease detection, achieving 15% better diagnostic accuracy in studies involving unstructured medical data.

How Is It Being Used?

DBNs are employed in several applications:

- Image Recognition: Understanding complex visual data for object detection and facial recognition.

- Natural Language Processing (NLP): Capturing word semantics for translation and sentiment analysis.

- Time-Series Prediction: Modelling sequential data for stock market predictions or weather forecasting.

Different Types

While DBNs themselves are a specific architecture, variations can arise based on:

- Pretraining Strategy: Different algorithms like Contrastive Divergence for optimising RBMs.

- Task-Specific Adaptations: Fine-tuning layers to handle specific data modalities such as text or audio.

Different Features

Key features of DBNs include:

- Layer-Wise Training: Each layer is trained individually, ensuring a strong foundation for hierarchical learning.

- Generative Capability: DBNs can reconstruct inputs, making them suitable for tasks requiring data generation.

- Scalability: DBNs handle large datasets efficiently due to their unsupervised training nature.

Different Software and Tools for DBNs

Popular tools for implementing DBNs include:

- TensorFlowandPyTorch: Provide libraries for building and training DBNs.

- Keras: Facilitates DBN development with pre-built modules.

- DeepLearnToolbox for MATLAB: A dedicated toolbox for deep learning and DBNs.

Industry Application Examples in Australian Governmental Agencies

- CSIRO (Data61):

- Use Case: Analysing unstructured medical images for early disease detection.

- Impact: Improved diagnostic accuracy by 15%.

- Australian Bureau of Statistics:

- Use Case: Modelling population trends using census data.

- Impact: Enhanced predictive models for policymaking.

- Transport for NSW:

- Use Case: Time-series analysis of traffic patterns for congestion management.

- Impact: Reduced peak-hour traffic delays by 10%.