A Brief History: Who Developed It?

The foundation for weight initialization traces back to the pioneers of artificial neural networks. In the early days, Frank Rosenblatt’s development of the Perceptron introduced the concept of weights, serving as the initial stepping stones for modern advancements. Over time, researchers like Geoffrey Hinton, Yann LeCun, and Xavier Glorot tackled significant challenges such as vanishing gradients, leading to innovative initialization techniques that revolutionized the field.

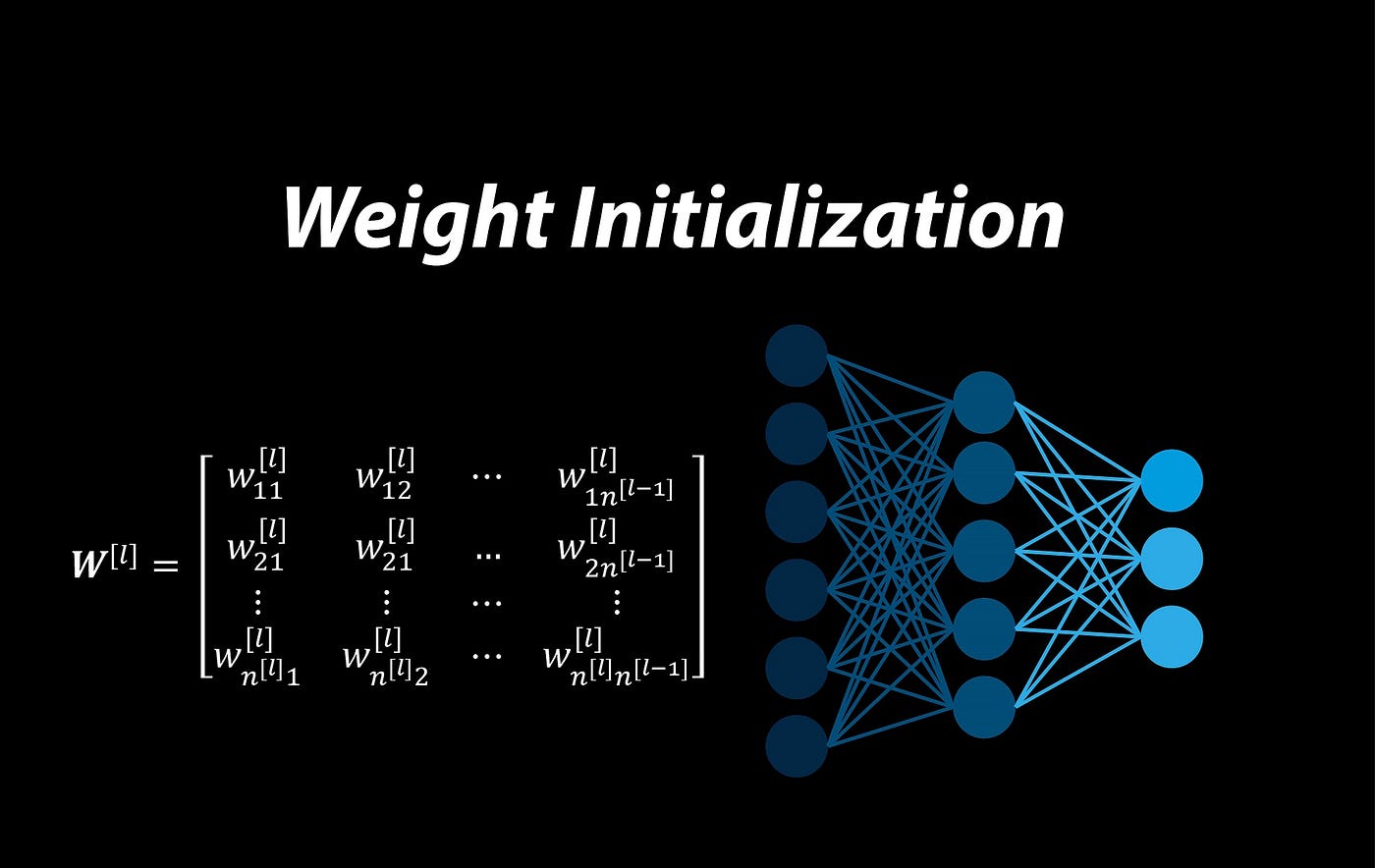

What Is Weight Initialization?

Weight initialization is akin to starting a painting with a well-prepared canvas and balanced brushes. It involves assigning initial values to a neural network’s weights before training begins. These starting values profoundly affect the network’s ability to learn efficiently and converge on an optimal solution.

Why Is It Used? What Challenges Does It Address?

Imagine tuning an instrument before a symphony. Properly initialized weights ensure the network’s “instrument” is ready to play its best tune. Without it, training becomes chaotic or may fail altogether.

Purpose of Weight Initialization:

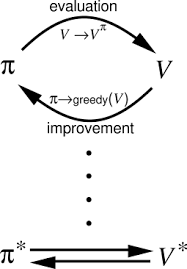

- Speeds up convergence.

- Reduces errors such as vanishing and exploding gradients.

- Enhances the model’s stability and accuracy.

Challenges Solved:

- Vanishing Gradients: Keeps gradients from shrinking to near zero, particularly in deep networks.

- Exploding Gradients: Prevents excessively large values from destabilizing the model.

- Slow Convergence: Facilitates quicker optimization of weights.

How Is Weight Initialization Used?

Weight initialization strategies are deliberate and structured, much like packing for a hiking trip. You need the right balance of essentials—not too heavy, not too light—to ensure a smooth journey. Well-initialized weights act as a carefully packed backpack, enabling efficient training and seamless progress.

Strategies:

- Random Initialization: Assigns small random values to weights.

- Normalized Methods: Techniques like Xavier Initialization or He Initialization adapt weights based on network depth.

Metaphor: Picture a tightrope walker setting off across a high wire. The pole they carry for balance is like weight initialization—it distributes weight evenly to prevent tipping over (exploding gradients) or losing momentum (vanishing gradients).

Different Types of Weight Initialization

- Zero Initialization: Sets all weights to zero but causes the network to learn ineffectively.

- Random Initialization: Uses small, random values but may not suit deeper networks.

- Xavier Initialization: Balances variance across layers for smoother learning.

- He Initialization: Optimized for ReLU-based networks, addressing deeper architectures.

Features of Effective Weight Initialization

- Adaptability: Aligns with the size and depth of the network.

- Efficiency: Reduces computational cycles, speeding up learning.

- Stability: Prevents gradients from becoming unstable during backpropagation.

Tools and Software Supporting Weight Initialization

- TensorFlow: Offers initializers for various initialization methods.

- PyTorch: Includes built-in options like nn.init.

- Keras: Simplifies initialization with user-friendly configurations.

Industry Applications in Australian Government

- Healthcare Analytics: Accurate weight initialization ensures fast, precise diagnosis models.

- Transport Systems: Enhances traffic management and predictive planning.

- Climate Monitoring: Improves data analysis for environmental assessments.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!