A Brief History of Adversarial Training: Who Developed It?

Adversarial training was conceptualised to address vulnerabilities in machine learning models, particularly against adversarial attacks. The concept gained prominence in 2014 when Ian Goodfellow and his team introduced it as a robust defence mechanism. This innovation laid the groundwork for resilient AI systems capable of withstanding manipulative inputs.

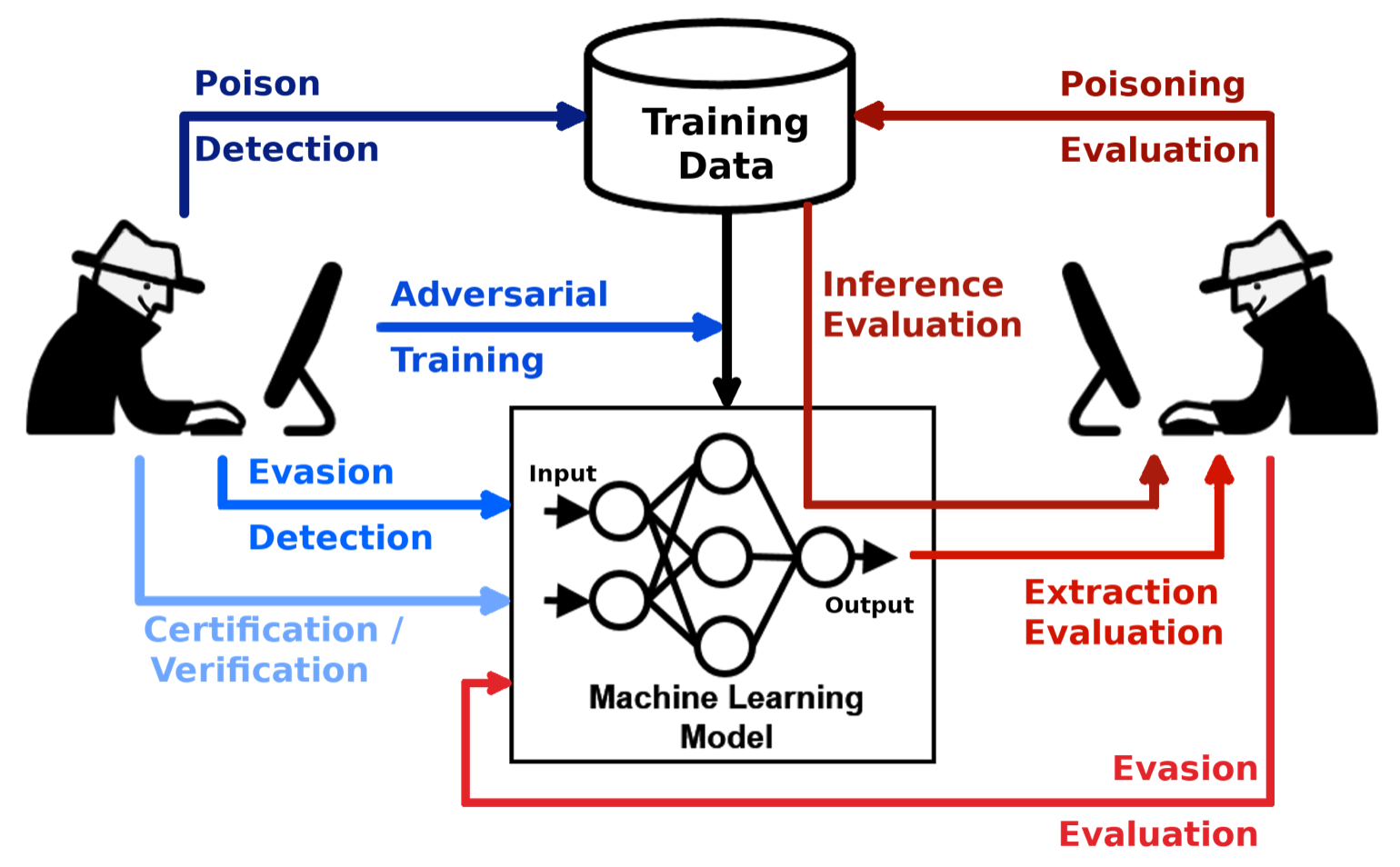

What Is Adversarial Training?

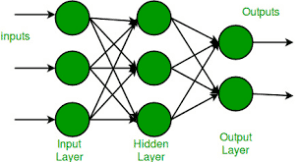

Adversarial training can be compared to a sparring session between a boxer and their coach. The coach deliberately tests the boxer with challenging punches, forcing them to anticipate and defend effectively. Similarly, in adversarial training, machine learning models are exposed to adversarial examples—intentionally crafted inputs designed to exploit model weaknesses. Over time, the models learn to resist these attacks, enhancing their overall robustness.

Why Is Adversarial Training Being Used? What Challenges Are Being Addressed?

Why Use Adversarial Training?

- Enhancing Security: Safeguards AI systems from exploitation, such as deceiving facial recognition software.

- Improving Robustness: Prepares models to perform reliably even in adversarial environments.

- Boosting Trust: Ensures AI solutions can be deployed in sensitive areas like healthcare, finance, and autonomous systems.

Challenges Addressed

- Adversarial Vulnerabilities: Prevents malicious actors from manipulating model predictions.

- System Integrity: Strengthens models to ensure consistent and accurate performance under hostile conditions.

How Is It Being Used?

The adversarial training process involves:

- Generating Adversarial Examples: Creating inputs designed to expose weaknesses in the model.

- Incorporating Examples into Training: Adding these adversarial examples to the training dataset to improve model resilience.

- Iterative Learning: Continuously refining the model through repeated exposure to adversarial attacks.

Common Applications

- Financial Security: Fortifying fraud detection systems.

- Healthcare AI: Ensuring diagnostic models are accurate and secure.

- Autonomous Systems: Protecting navigation models from deceptive signals.

Different Types of Adversarial Training

- PGD-Based Training: Focuses on defending against strong Projected Gradient Descent attacks.

- Certified Robust Training: Provides guarantees for model performance under specific attack conditions.

- Hybrid Approaches: Combines adversarial training with techniques like gradient masking or defensive distillation.

Features of Adversarial Training

- Proactive Defence: Teaches models to identify and resist attacks before deployment.

- Scalability: Suitable for various machine learning models and datasets.

- Enhanced Generalisation: Improves model performance beyond adversarial scenarios.

Tools for Adversarial Training

- Adversarial Robustness Toolbox (ART): Comprehensive tools for training and testing robust models.

- CleverHans: Utilities for creating adversarial examples and defences.

- PyTorch and TensorFlow Libraries: Built-in support for implementing adversarial defences.

- IBM Adversarial Robustness Toolkit: Designed for industry-grade applications requiring high security.

Industry Application Examples in Australian Governmental Agencies

Australian Taxation Office (ATO)

- Use Case: Fortifying fraud detection systems against adversarial manipulation.

- Impact: Reduced financial fraud and enhanced public trust in the taxation system.

Department of Home Affairs

- Use Case: Securing facial recognition systems in border control.

- Impact: Improved national security and reliable identity verification.

Australian Institute of Health and Welfare (AIHW)

- Use Case: Protecting healthcare diagnostic tools from adversarial interference.

- Impact: Ensures accurate diagnostics and maintains data integrity.

Official Statistics and Industry Impact

- Global: A 2022 Gartner report revealed that 35% of organisations using AI faced adversarial attacks, highlighting the need for robust defences (Source: Gartner, AI and Machine Learning Security Report, 2022).

- Australia: The Australian Cyber Security Centre (ACSC) reported increasing adversarial attacks in critical sectors like defence and healthcare, underscoring the importance of adversarial training.

References

- Goodfellow, I., et al. (2014). Explaining and Harnessing Adversarial Examples.

- Adversarial Robustness Toolbox Documentation.

- Gartner, AI and Machine Learning Security Report, 2022.

- Australian Cyber Security Centre (ACSC): Cyber Threat Reports.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!