Imagine you’re baking a cake for a competition: you test the recipe multiple times at home before presenting the final version to the judges. The home trials are like the training set, where you perfect your recipe, while the competition is like the validation set, where the outcome is judged. Similarly, in machine learning, training and validation sets ensure that your AI models perform reliably when faced with new, unseen data.

A Brief History of Training and Validation Sets

The practice of splitting data into training and validation sets stems from early statistical methods in the 20th century. As machine learning algorithms advanced during the 1980s and 1990s, this approach became essential: ensuring models were accurate, unbiased, and generalizable across different datasets.

What Are Training and Validation Sets?

- Training Set: The portion of the data used to teach the model. It’s like testing your cake recipe repeatedly to refine it.

- Validation Set: A separate portion of the data used to evaluate the model’s performance on unseen examples. This ensures that the model generalizes well to new data rather than memorizing the training data.

Together, they form the backbone of machine learning workflows, addressing problems like overfitting (too tailored to training data) and underfitting (failing to learn patterns).

Why Are Training and Validation Sets Used?

These sets address critical challenges in data science and AI development:

- Improving Generalization: Validating on unseen data ensures the model works well beyond the training set.

- Measuring Model Performance: Validation provides unbiased evaluations of the model’s accuracy and efficiency.

- Reducing Bias: Properly splitting the data reduces the risk of biased predictions.

For instance, in fraud detection, an improperly validated model could misclassify innocent transactions, leading to customer dissatisfaction and financial loss.

How Are Training and Validation Sets Used?

The process typically involves these steps:

- Splitting Data: Most commonly, 70-80% of data is used for training and 20-30% for validation.

- Training the Model: The model learns patterns and relationships from the training data.

- Validating the Model: The validation set is used to test the model’s predictions and adjust parameters.

Cross-validation techniques like K-Fold Cross-Validation are also popular, ensuring robust evaluation by rotating training and validation sets.

Types of Validation Techniques

- Hold-Out Validation: A single split between training and validation sets.

- K-Fold Cross-Validation: Rotates validation and training data across multiple folds for comprehensive testing.

- Stratified Sampling: Ensures the distribution of classes (e.g., labels) is consistent across both sets.

Categories of Training and Validation

- Supervised Learning: Training involves labelled data, and validation checks prediction accuracy.

- Unsupervised Learning: Training uncovers patterns, and validation evaluates clustering or grouping quality.

Software and Tools for Training and Validation

Here are popular tools used in data pre-processing and machine learning workflows:

- Python Libraries:

- Scikit-learn: Offers train_test_split() and cross-validation methods.

- Pandas: Useful for data preparation and splitting.

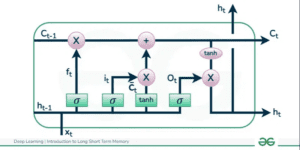

- Deep Learning Frameworks:

- TensorFlow/Keras: Built-in utilities for splitting and validation.

- PyTorch: Data loaders and validation support.

- R Programming: Functions like sample.split() for data partitioning in statistical computing.

Industry Applications in Australian Governmental Agencies

- Disease Prediction Models: The Australian Institute of Health and Welfare uses training and validation sets to ensure accuracy in disease outbreak forecasts.

- Satellite Data Analysis: Geoscience Australia applies these techniques for resource management and environmental monitoring.

- Traffic Flow Prediction: Transport for NSW trains predictive models for traffic optimization, validating them on unseen data to improve public transport efficiency.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!