Origins of the Multilayer Perceptron (MLP)

The Multi-layer Perceptron (MLP) originated from the single-layer perceptron introduced by Frank Rosenblatt in 1958. Its true power was unlocked in the 1980s when Geoffrey Hinton and collaborators unveiled backpropagation— a groundbreaking algorithm that enabled training on non-linear problems, setting the stage for modern machine learning.

Understanding the Multilayer Perceptron (MLP)

Imagine a Multilayer Perceptron as a sophisticated factory assembly line. Each worker (neuron) is trained to specialise in processing specific components (patterns). These components are passed along the line through various workstations (layers), each refining the product until the final output is polished and ready for delivery—whether it’s a classification, prediction, or decision.

Why MLPs Are Essential in Machine Learning

MLPs are indispensable in machine learning for these reasons:

- Solving Non-linear Problems: They excel with complex data that simpler methods cannot handle.

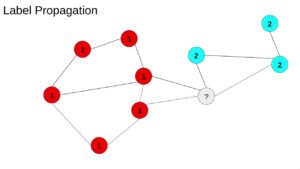

- Automated Feature Extraction: MLPs identify patterns without manual intervention.

- Versatility: From image recognition to natural language processing, MLPs adapt to diverse tasks.

Their multilayer structure allows them to unravel intricate relationships within data, making them a cornerstone of deep learning.

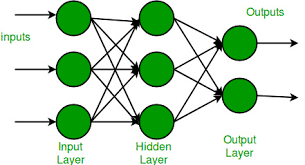

How MLPs Work: A Step-by-Step Overview

MLPs work like this:

- Input Layer: Accepts numerical features of data.

- Hidden Layers: Apply activation functions to uncover patterns.

- Output Layer: Provides the final prediction or classification.

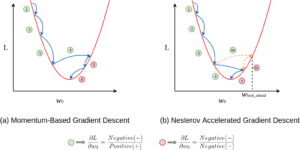

- Backpropagation: Continuously adjusts the network to minimise errors and improve accuracy.

Types of MLPs: Shallow vs Deep

-

- Shallow MLPs: With a single hidden layer, these handle moderately complex tasks.

- Deep MLPs: Multiple hidden layers make them ideal for high-dimensional, intricate datasets.

Key Features of MLPs

Key features of MLPs include:

-

- Hidden Layers: Facilitate non-linear problem-solving.

- Activation Functions: Transform inputs non-linearly, allowing complex pattern detection.

- Backpropagation: A powerful learning algorithm that fine-tunes the model iteratively.

Top Tools for Implementing MLPs

Popular tools for implementing MLPs:

- TensorFlow: A robust, scalable framework for MLPs.

- PyTorch: Best for dynamic, experimental designs.

- Keras: User-friendly and great for beginners.

- scikit-learn: Perfect for foundational MLP implementations.

Real-World Applications of MLPs in Australia

- Healthcare Analytics: Predicting patient outcomes from multidimensional datasets.

- Education Systems: Forecast student success and improve educational programs.

- Environmental Planning: Modelling climate scenarios to support sustainable policies.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!