A Brief History of This Tool

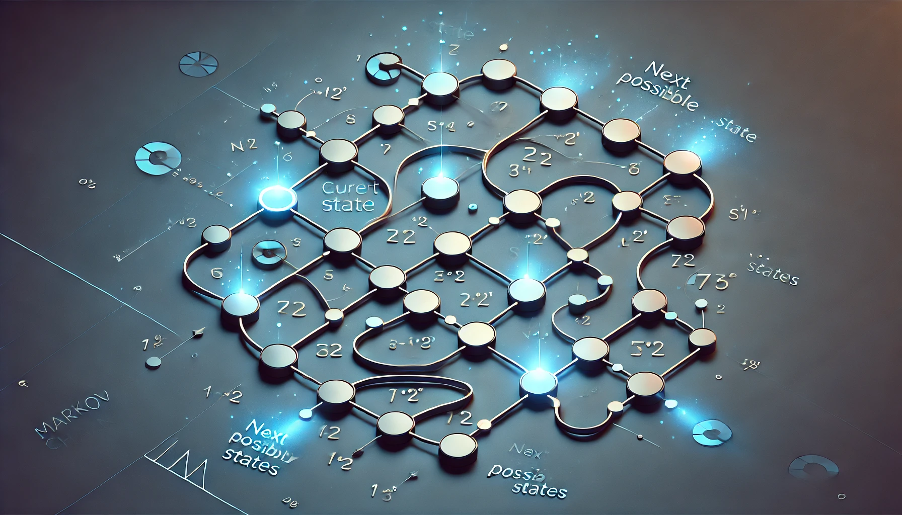

Markov Chains, introduced by Russian mathematician Andrey Markov in 1906, revolutionized stochastic modeling: his work on probability transitions provided a framework for analyzing dynamic systems. Today, Markov Chains are foundational in machine learning, financial analysis, and biological research, offering tools to predict sequential behavior efficiently.

What Is It?

A Markov Chain is a stochastic process that models transitions between states, adhering to the memoryless property: the probability of moving to the next state depends only on the current state, not the preceding sequence of states.Think of a Markov Chain as a path through a maze: your next step depends only on your current position, not on the path you’ve taken to get there.

Why It Is Being Used? What Challenges Are Being Addressed?

Markov Chains simplify the analysis of complex systems and address key challenges like:

- Modeling Dynamic Processes: Analyze behaviors that evolve over time, such as consumer choices.

- Sequential Predictions: Make decisions based on current conditions without relying heavily on historical data.

- Reducing Complexity: Streamline probabilistic models for systems with many potential outcomes.

How It Is Being Used?

- Define States: Identify all possible states in the system.

- Set Transition Probabilities: Assign probabilities to each state transition.

- Construct the Transition Matrix: Organize probabilities into a structured table.

- Analyze Behavior: Use algorithms to predict long-term trends and simulate transitions.

Different Types of Markov Chains

- Discrete-Time Markov Chains: Progress at fixed intervals.

- Continuous-Time Markov Chains: Allow state changes at any moment.

- Absorbing Markov Chains: Contain states that, once entered, cannot be exited.

Different Features of Markov Chains

- Memoryless Property: Future states depend only on the current state.

- Stationary Distribution: Predicts stable, long-term probabilities for each state.

- Flexibility: Adapts to applications in logistics, genomics, and decision science.

Different Software and Tools for It

- Python (NumPy, PyMC3): Libraries for building and analyzing Markov Chains.

- R (markovchain package): Tools for modeling discrete-time Markov Chains.

- MATLAB: Provides comprehensive functions for advanced simulations.

- TensorFlow Probability: Extends Markov Chain modeling to machine learning applications.

Three Industry Application Examples in Australian Governmental Agencies

- Department of Transport: Optimizes transit schedules and passenger flow with Markov Chain modeling.

- Australian Bureau of Statistics: Forecasts demographic trends using state-based migration models.

- Australian Energy Market Operator (AEMO): Uses Markov Chains for energy demand predictions and grid optimization.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!