The History of Hinge Loss: A Foundation of Machine Learning

The Hinge cost function, also known as Hinge loss, was introduced in the 1990s by Vladimir Vapnik and Alexey Chervonenkis as part of their development of Support Vector Machines (SVMs). Designed to solve binary classification problems, it has become a cornerstone in supervised learning algorithms, widely used in modern machine learning workflows.

What Is Hinge Loss? Understanding Its Role in Classification

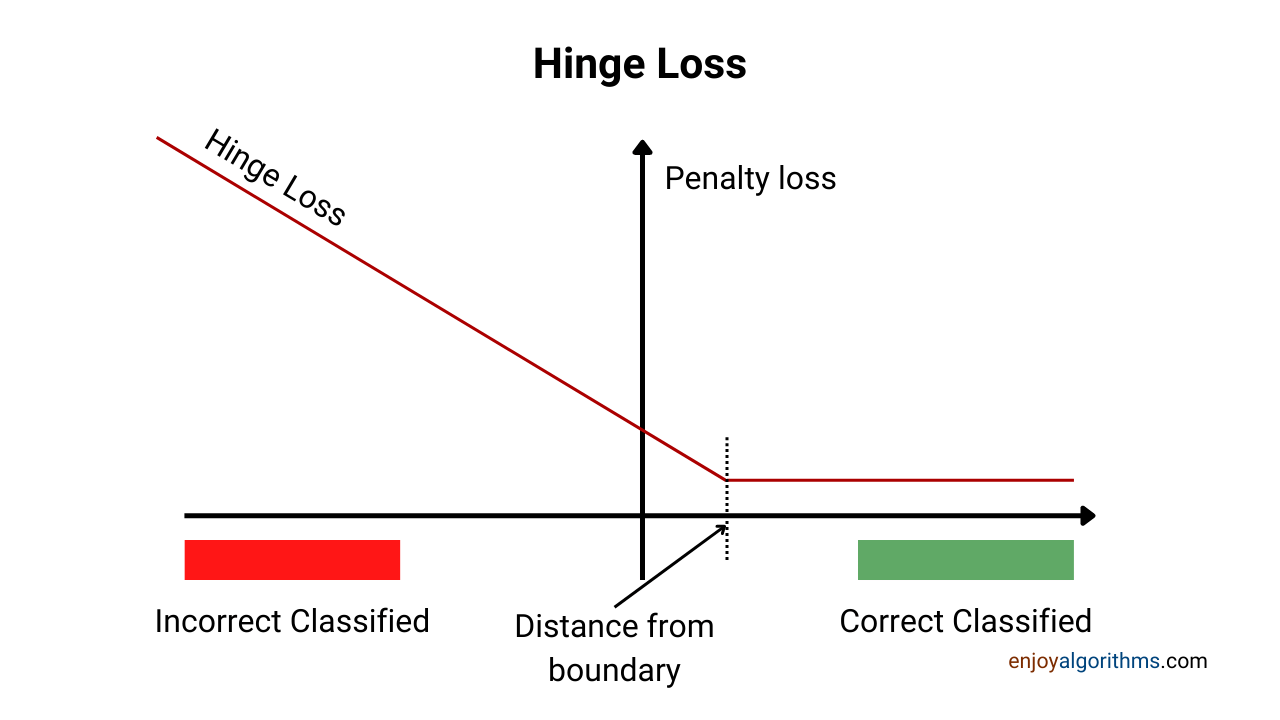

The Hinge cost function evaluates how effectively a model separates data into two classes. It measures prediction confidence and penalises those predictions that are too close to, or on the wrong side of, the decision boundary.

Imagine walking a tightrope: the goal is to stay balanced in the centre (correct classification) and avoid falling off the edge (misclassification). The farther predictions are from the boundary, the safer and more reliable they become.

Why Use the Hinge Cost Function? Challenges and Solutions

Hinge loss addresses several critical challenges in classification tasks:

- Maximising Margin: Ensures models maintain a safe distance from the decision boundary.

- Penalising Errors: Assigns heavier penalties to misclassified data, driving better class separation.

- Improving Generalisation: Enhances model performance on unseen data, reducing the risk of overfitting.

Without Hinge loss, classification models may struggle to effectively separate classes in noisy datasets or maintain sufficient margins for reliable predictions.

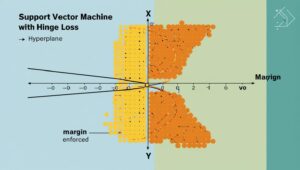

How Hinge Loss Works: From SVMs to Optimisation

Hinge loss plays a critical role in model training, particularly in SVMs:

- Support Vector Machines (SVMs): Hinge loss is central to SVM optimisation, guiding the creation of the optimal hyperplane.

- Calculation: Evaluates prediction distances from the decision boundary and assigns penalties for errors.

- Optimisation: Minimises misclassification errors during training, improving the model’s overall accuracy.

Variations of Hinge Loss: Exploring Squared Hinge Loss

One key variation is Squared Hinge Loss, which squares penalties for misclassified predictions. This approach enables smoother optimisation processes, making it especially effective for complex datasets.

Key Features of Hinge Loss in Machine Learning

Hinge loss is defined by several notable features:

- Margin Enforcement: Creates a buffer zone between classes to improve separation.

- Linear Compatibility: Integrates seamlessly with linear classifiers like SVMs.

- Robustness: Performs well in noisy datasets or those with overlapping classes.

Top Tools Supporting Hinge Loss: Scikit-Learn, TensorFlow, and More

Several libraries and tools support the implementation of Hinge loss:

- Scikit-learn: Implements Hinge loss in its SVM classifiers.

- TensorFlow and PyTorch: Offer flexibility for custom Hinge loss implementations in deep learning models.

- Platforms: Jupyter Notebooks and Google Colab provide accessible environments for experimentation and visualisation.

Hinge Loss in Action: Applications Across Australian Industries

Hinge loss has practical applications in several Australian government sectors:

- Healthcare (Department of Health):

- Application: Classifying patient risk levels for chronic diseases.

- Use of Hinge Loss: Improves confidence in distinguishing high-risk and low-risk groups, enabling timely interventions.

- Environmental Management (Department of Agriculture, Water, and the Environment):

- Application: Identifying bushfire-prone zones based on environmental indicators.

- Use of Hinge Loss: Precisely classifies high-risk regions, enabling better resource allocation for safety measures.

- Transportation (Department of Infrastructure, Transport, and Regional Development):

- Application: Predicting traffic congestion patterns in urban areas.

- Use of Hinge Loss: Accurately classifies high-traffic zones, supporting proactive road safety planning and resource optimisation.

Conclusion

The Hinge cost function is an essential component of machine learning, enabling robust classification models that maximise margins, penalise errors, and improve generalisation. Its versatility ensures it remains a critical tool in fields like healthcare, environmental management, and transportation.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!