Imagine building a toy car: each part—the wheels, the engine, the body—plays a specific role in its performance. Similarly, the features of a machine learning model are its building blocks, extracted from data, that enable accurate predictions. Understanding features is key to creating powerful and reliable machine learning models.

A Brief History of Features in Machine Learning

The concept of “features” in machine learning originated from statistical models in the mid-20th century, where variables were used to describe datasets. As machine learning algorithms advanced in the 1980s and 1990s, feature engineering emerged as a critical process for improving model performance. Today, features are foundational in AI workflows, driving accurate predictions across industries.

What Are Features in Machine Learning?

Features are the individual measurable properties or characteristics of data that serve as inputs for machine learning models. They act as the model’s “ingredients,” influencing how well it learns and generalizes.

For example:

- In predicting house prices, features might include square footage, location, and number of bedrooms.

- In fraud detection, features could include transaction amount, time of transaction, and customer location.

Each feature contributes uniquely to a model’s ability to make predictions, just like ingredients in a recipe determine the final dish’s flavor.

Why Are Features Important?

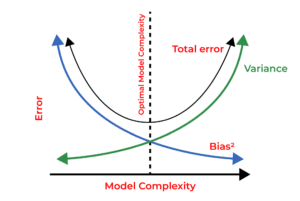

Features are crucial for the performance of machine learning models because they directly influence accuracy and reliability. High-quality features solve key challenges, including:

- Improving Model Accuracy: Selecting relevant features helps the model make better predictions.

- Reducing Data Noise: Eliminating irrelevant information enhances model focus.

- Simplifying Data Complexity: Focusing on essential features reduces computation time and overfitting.

For example, in customer churn prediction, a well-designed feature like “complaints per month” can significantly improve the model’s predictive power.

How Are Features Used?

Using features effectively involves several steps:

- Feature Selection: Choosing the most relevant variables for the problem.

- Feature Engineering: Transforming raw data into meaningful inputs (e.g., creating “time of day” from date and time).

- Feature Scaling: Normalizing features to ensure equal contributions to the model.

In fraud detection, for instance, raw data like “transaction frequency” can be combined with “average transaction value” to create a new feature: “spending consistency.”

Different Types of Features

Features can be categorized as follows:

- Numerical Features: Continuous values such as income, temperature, or age.

- Categorical Features: Discrete labels like gender, region, or product type.

- Text Features: Extracted from text data, such as word counts or sentiment analysis scores.

- Derived Features: Created from existing variables, like ratios, averages, or time-based aggregations.

Categories of Feature Engineering

Feature engineering falls into two main categories:

- Manual Feature Engineering: Data scientists create features using domain knowledge and statistical methods.

- Automated Feature Engineering: Tools like AutoML automate the creation of features, saving time and improving efficiency.

Software and Tools for Feature Engineering

Popular tools for feature engineering in machine learning workflows include:

- Python Libraries:

- Pandas: For data manipulation and transformation.

- Scikit-learn: Provides tools for feature selection, scaling, and preprocessing.

- FeatureTools: Automates the generation of complex features.

- Deep Learning Frameworks:

- TensorFlow/Keras and PyTorch: Enable on-the-fly feature creation during training.

Industry Applications in Australian Governmental Agencies

- Healthcare Modeling: The Australian Institute of Health and Welfare uses engineered features like “age-adjusted mortality rates” to predict disease trends effectively.

- Environmental Monitoring: Geoscience Australia analyzes satellite imagery with features such as “vegetation density” and “distance to water sources” to manage natural resources.

- Traffic Optimization: Transport for NSW uses features like “peak-hour traffic volume” and “road condition index” to improve public transport efficiency and reduce congestion.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!