A Brief History of the EM Algorithm

Imagine trying to solve a jigsaw puzzle where some pieces are missing, but you still need to construct the full image. The Expectation-Maximization (EM) Algorithm, introduced in 1977 by Arthur Dempster, Nan Laird, and Donald Rubin, serves as the ultimate tool for such scenarios in the realm of data. Rooted in earlier probabilistic works, their paper provided a systematic framework that has revolutionized statistical modeling and machine learning.

What is the EM Algorithm?

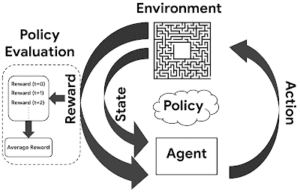

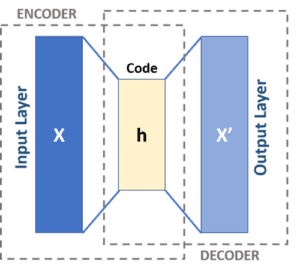

The EM Algorithm is like a meticulous puzzle solver. It alternates between making educated guesses (Expectation or E-Step) about the missing pieces and adjusting the puzzle (Maximization or M-Step) to fit these guesses better. By repeating these steps, it sharpens the overall picture, maximizing the likelihood function in models with unobserved data.

Why is it Used?

The EM Algorithm thrives in situations where parts of the “puzzle” (data) are missing. It’s the go-to method for:

-

- Parameter Estimation: Refining models like Gaussian Mixture Models (GMMs) and Hidden Markov Models (HMMs).

-

- Data Imputation: Filling in gaps in datasets.

-

- Enhanced Predictions: Improving classification and decision-making through likelihood maximization.

How is it Used?

Think of the EM Algorithm as iteratively zooming in on a blurry image:

-

- E-Step: Forms a clearer expectation of the hidden data based on the current “resolution” (parameters).

-

- M-Step: Adjusts the parameters to refine the image further, improving clarity.

This cycle repeats until the image (model) converges to its sharpest, most accurate form.

Different Types

The EM Algorithm shapes its puzzle-solving expertise into various forms:

-

- Gaussian Mixture Models (GMMs): Clustering and density estimation tools for intricate datasets.

-

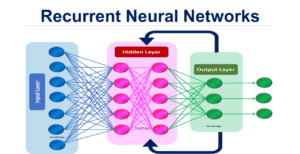

- Hidden Markov Models (HMMs): Analyzing sequential data, like speech or time series.

-

- Topic Modeling: Latent Dirichlet Allocation (LDA) helps identify themes in large text datasets.

Features

Why is the EM Algorithm the master puzzle solver?

-

- Handles incomplete data adeptly.

-

- Optimizes parameters in probabilistic models.

-

- Iterative process ensures convergence to a locally optimal solution.

Software and Tools

-

- Python: Libraries like scikit-learn and hmmlearn.

-

- R: Packages like mclust for clustering tasks.

-

- MATLAB: Tools for highly customized models.

Industry Applications

The EM Algorithm solves real-world “puzzles” across industries:

-

- Healthcare Analytics

Filling in data gaps to refine patient diagnostics and resource allocation models in Australian healthcare systems.

- Healthcare Analytics

-

- Traffic Predictions

Using HMMs to analyze urban traffic patterns in Sydney, enabling efficient real-time flow management.

- Traffic Predictions

-

- Climate Modeling

Helping Australian agencies predict weather anomalies and climate trends by modeling complex datasets.

- Climate Modeling