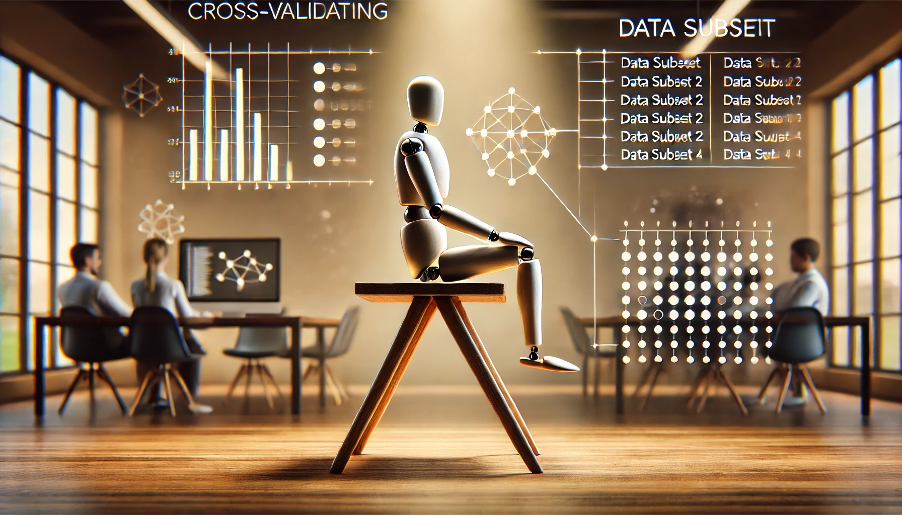

Imagine you’re testing the strength of a chair: instead of sitting on it just once, you test each leg to ensure stability. Cross-validation works similarly in machine learning workflows: it evaluates a model’s reliability by testing it on multiple subsets of data. This ensures the model performs well not just on training data but also on unseen, real-world datasets.

A Brief History of Cross-Validation

Cross-validation, rooted in statistical methods from the 1930s, gained prominence in machine learning during the 1980s and 1990s. As algorithms grew more complex, reliable validation methods became critical: cross-validation emerged as a key technique to ensure model accuracy, robustness, and generalization.

What Is Cross-Validation?

Cross-validation is a statistical method used to evaluate the performance of a model. It divides the dataset into multiple folds, training the model on some folds and validating it on the remaining ones.

Think of it as testing a chair by checking each leg individually: if all legs are stable, the chair is reliable. Similarly, cross-validation tests every subset of data to ensure overall model stability.

Why Is Cross-Validation Used?

Cross-validation solves critical challenges in machine learning and data science:

- Generalization: Ensures the model performs well on new data, not just the training set.

- Overfitting Prevention: Prevents the model from memorizing patterns in the training data rather than learning them.

- Reliable Evaluation: Provides an accurate measure of the model’s performance across various data subsets.

For instance, in fraud detection, cross-validation ensures the model detects new fraud patterns effectively, not just those present in historical data.

How Is Cross-Validation Used?

The process involves:

- Splitting Data into Folds: Divide the dataset into equal-sized subsets (e.g., 5 folds for K-fold cross-validation).

- Training and Validation: Train the model on all but one fold, and validate it on the remaining fold. Repeat for all folds.

- Calculating Metrics: Average the results across all folds to assess the model’s performance.

Techniques like stratified cross-validation ensure balanced class distributions across folds, improving performance in classification tasks.

Types of Cross-Validation

- K-Fold Cross-Validation: Splits the dataset into K folds, rotating the validation set.

- Leave-One-Out Cross-Validation (LOOCV): Uses one data point for validation and the rest for training, repeated for all points.

- Stratified K-Fold Cross-Validation: Ensures consistent class proportions across folds.

- Time-Series Cross-Validation: Preserves the temporal order of data, critical for time-dependent models.

Categories of Cross-Validation

Cross-validation is categorized based on the dataset:

- Standard Cross-Validation: For static datasets without temporal dependencies.

- Temporal Cross-Validation: For datasets with time-sequential data, ensuring the order is preserved.

Software and Tools for Cross-Validation

Here are commonly used tools for data validation in machine learning workflows:

- Python Libraries:

- Scikit-learn: Includes cross_val_score() and KFold.

- Pandas: Useful for data preparation and manipulation.

- Deep Learning Frameworks:

- TensorFlow/Keras: Supports custom pipelines for cross-validation.

- PyTorch: Offers utilities for creating data loaders with validation support.

- R Programming: Packages like caret provide robust cross-validation options.

Industry Applications in Australian Governmental Agencies

- Healthcare Modelling: The Australian Institute of Health and Welfare uses cross-validation techniques to ensure models predicting disease outbreaks work across diverse demographics.

- Satellite Data Analysis: Geoscience Australia applies cross-validation to improve resource-mapping models, ensuring accurate analysis of satellite data.

- Traffic Optimization: Transport for NSW validates predictive traffic models using cross-validation, improving road and rail efficiency while reducing congestion.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!