A Brief History of Recurrent Networks

Recurrent neural networks (RNNs) were first introduced in the 1980s by John Hopfield, who pioneered early recurrent structures. By the 1990s, David Rumelhart advanced the field with backpropagation through time, enabling RNNs to analyse sequential data. Further innovations, such as Long Short-Term Memory (LSTM) by Hochreiter and Schmidhuber in 1997 and Gated Recurrent Units (GRU) in 2014, refined RNNs to handle long-term dependencies in complex tasks effectively.

Understanding Recurrent Networks

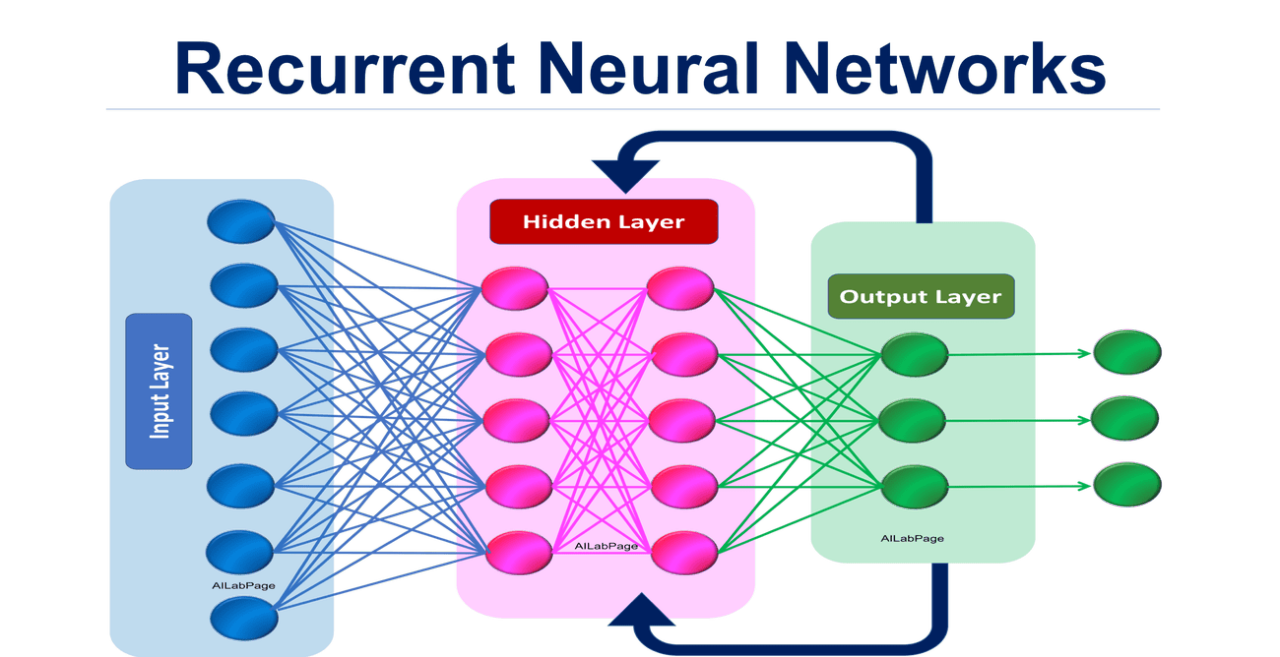

Recurrent networks operate as systems with “memory,” allowing past inputs to influence current outputs. Unlike feedforward networks, RNNs include loops to process temporal or sequential data, making them ideal for applications in natural language processing, audio analysis, and time series predictions.

Why Use Recurrent Networks?

Recurrent networks address critical challenges in machine learning:

Advantages

- Sequential Understanding: Handles context-sensitive sequences for meaningful analysis.

- Dynamic Memory: Maintains previous inputs to enhance predictive accuracy.

- Versatility: Adapts to diverse tasks such as text analysis, time series modelling, and predictive maintenance.

Challenges Solved

- Sequence Learning: Identifies patterns in data streams like speech or market trends.

- Dependency Modelling: Captures temporal relationships across inputs.

- Context Retention: Processes long sequences effectively, ensuring coherence.

How Recurrent Networks Work

RNNs process input step-by-step in a structured manner:

- Input Propagation: Data is fed one timestep at a time.

- Memory Update: Combines past states with new inputs to update the network’s memory.

- Output Generation: Produces predictions or labels for the next step in the sequence.

Advanced RNNs, such as LSTMs and GRUs, incorporate memory-regulating gates to mitigate issues like vanishing gradients, making them better suited for long-term dependencies.

Types of Recurrent Networks

RNNs come in various types, tailored to specific applications:

- Vanilla RNNs: Basic models with simple loops.

- LSTM (Long Short-Term Memory): Incorporates gates to control memory precision, addressing vanishing gradient issues.

- GRU (Gated Recurrent Unit): A simplified and computationally efficient alternative to LSTMs.

- Bidirectional RNNs: Processes sequences both forward and backward for enhanced context understanding.

- Attention-Based RNNs: Highlights crucial parts of sequences for improved predictions.

Key Features of Recurrent Networks

RNNs are valued for their key features:

- Dynamic Memory: Maintains essential information over time for accurate predictions.

- Adaptability: Handles varying data types and sequence lengths effectively.

- Efficiency: GRUs offer computational simplicity without compromising performance.

- Enhanced Learning: LSTMs effectively resolve vanishing gradient issues for better long-term modelling.

Popular Software and Tools for Building RNNs

Modern frameworks make RNN implementation accessible:

- TensorFlow: High-level APIs for RNN, LSTM, and GRU development.

- PyTorch: Offers flexible modules for customisable RNN architectures.

- Keras: Simplifies the creation of recurrent models with intuitive layers.

- Scikit-learn: Provides basic tools for time series tasks.

- MXNet: Designed for scalable RNN training and deployment.

Applications of RNNs in Australian Governmental Agencies

RNNs are driving innovation across various Australian sectors:

- Healthcare:

- Application: Predicts patient diagnoses by analysing sequential medical records.

- Transport and Infrastructure:

- Application: Forecasts traffic patterns to optimise routes and improve urban planning.

- Environmental Monitoring:

- Application: Monitors climate changes using satellite imagery sequences to inform resource allocation and policy decisions.

References

- Hochreiter, S., & Schmidhuber, J. (1997). Long Short-Term Memory.

- TensorFlow Documentation.

- Australian Government AI Applications Report (2023).

Conclusion

Recurrent neural networks bring memory to machine learning, enabling the analysis of sequential data and capturing long-term dependencies. With powerful frameworks like TensorFlow and PyTorch, RNNs have become indispensable for tasks ranging from healthcare analytics to climate monitoring. Their evolution, particularly with LSTMs and GRUs, ensures they remain at the forefront of AI innovation.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!