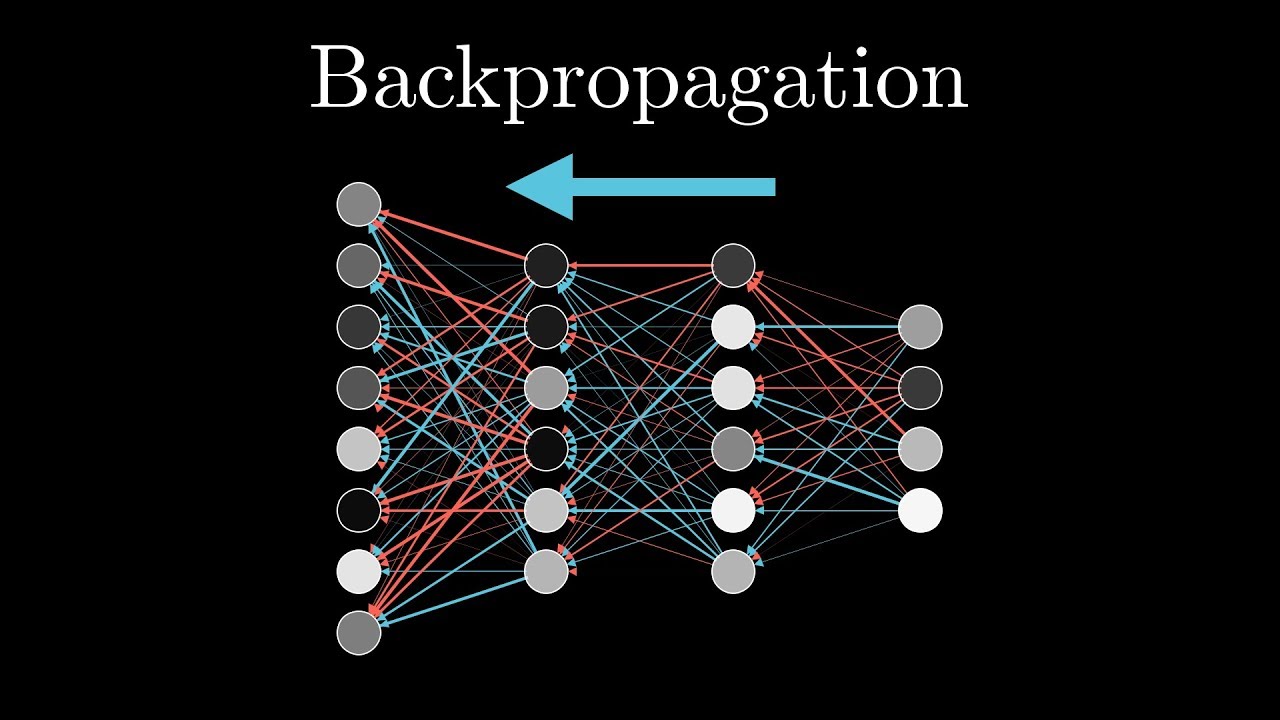

The back-propagation algorithm revolutionised machine learning by enabling efficient training of deep neural networks. This blog explores its history, functionality, applications, and use in Australian industries such as healthcare, energy optimisation, and traffic systems.

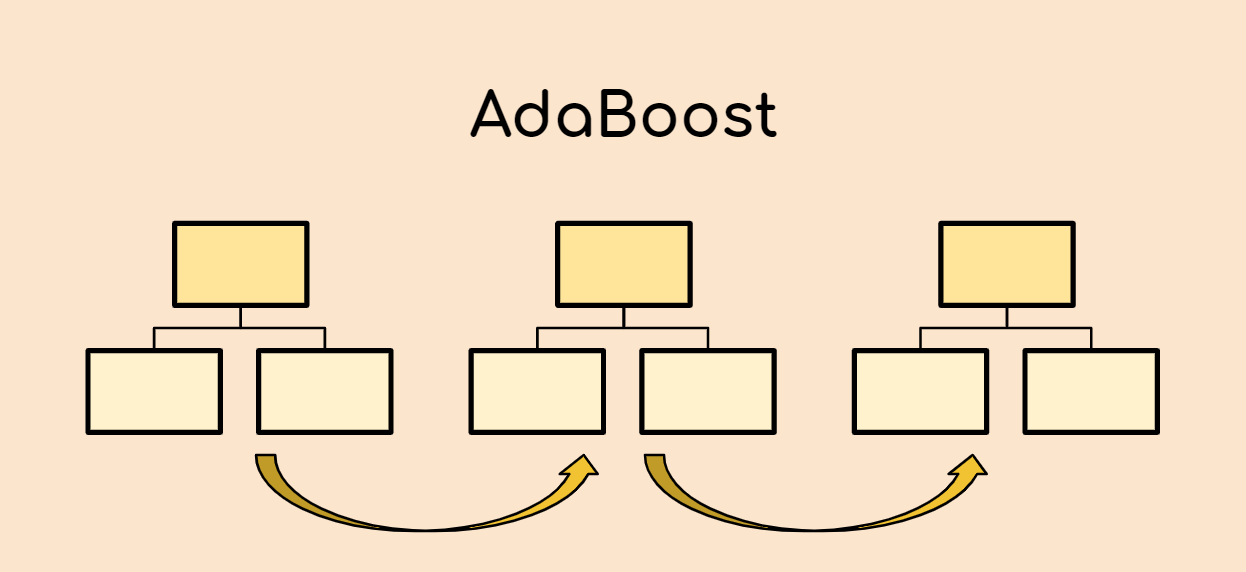

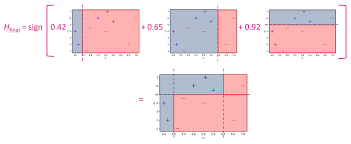

AdaBoost.SAMME extends the original AdaBoost algorithm to efficiently tackle multi-class classification problems by iteratively prioritising errors and combining weak learners. This blog explores its history, functionality, variations, and applications in healthcare, traffic forecasting, and education segmentation in Australia.

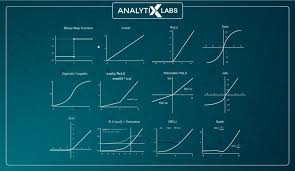

Activation functions are essential in neural networks, introducing non-linearity to enable the modelling of complex patterns. This blog explores their history, types, and applications in Australian sectors such as healthcare, traffic management, and environmental research

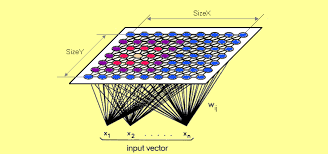

The Self-Organizing Map (SOM), developed by Teuvo Kohonen in the 1980s, is a powerful tool for simplifying high-dimensional data. By clustering and visualising data relationships, SOMs are widely used in Australian sectors like policy-making, geoscience, and public transport.

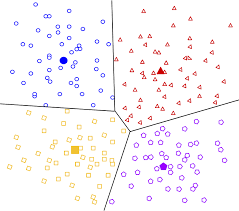

A Brief History: Who Developed It? Clustering algorithms originated at the intersection of statistics and computational advancements. Hugo Steinhaus introduced the k-means clustering concept in 1956, while James MacQueen refined it in 1967, transforming it into a practical tool for …

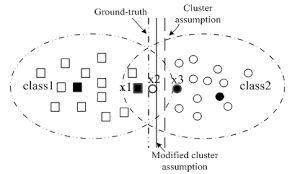

A Brief History: Who Developed the Cluster Assumption? The cluster assumption, a foundational concept in semi-supervised learning (SSL), was introduced in the late 1990s. Researchers like Xiaojin Zhu and Avrim Blum formalized this principle, leveraging clustering and manifold learning theories …

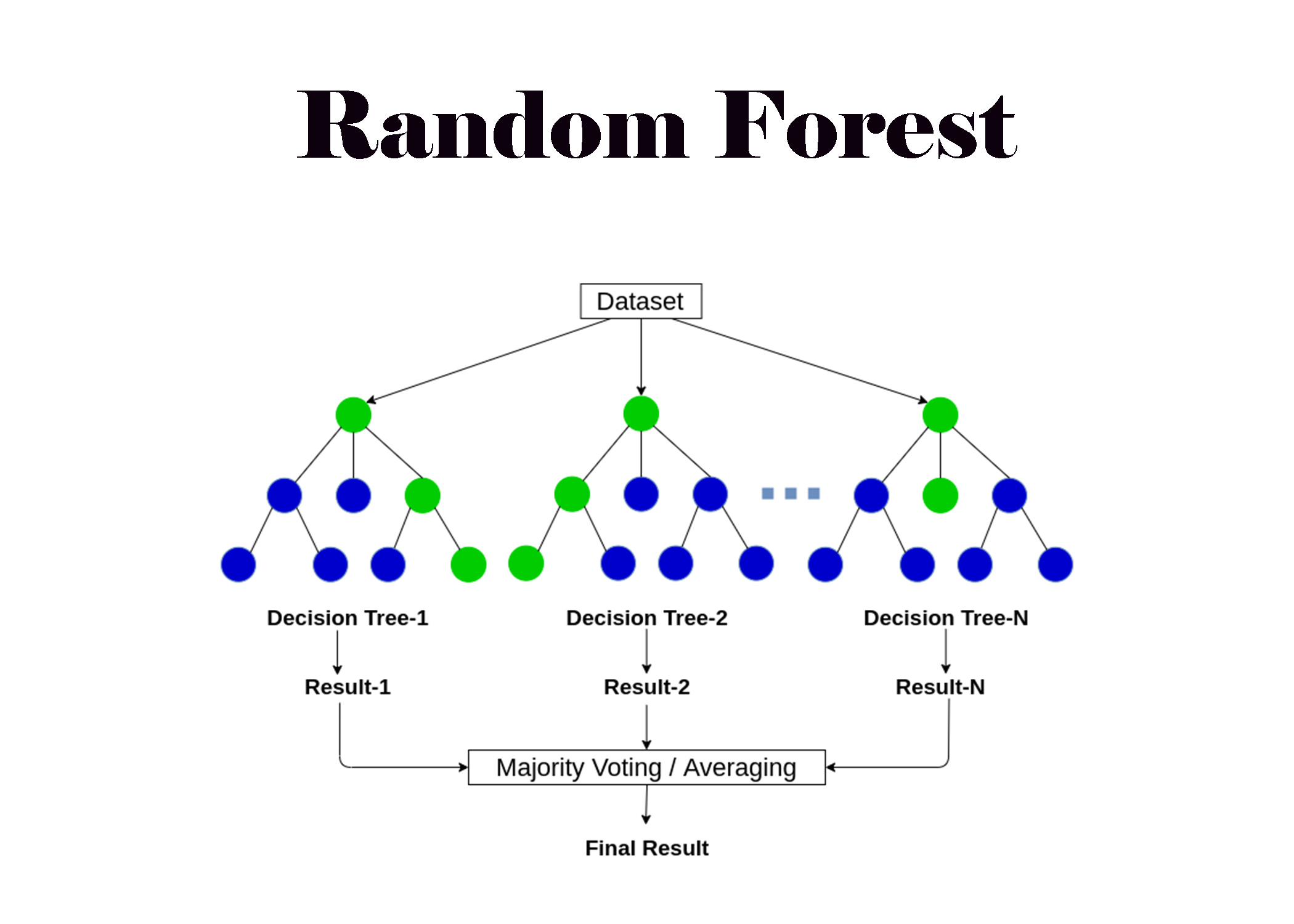

Random Forest, introduced in 2001 by Leo Breiman and Adele Cutler, combines multiple decision trees to enhance prediction accuracy and reduce overfitting. It is a versatile machine learning tool widely applied in healthcare, transport planning, and environmental risk assessment in Australia.

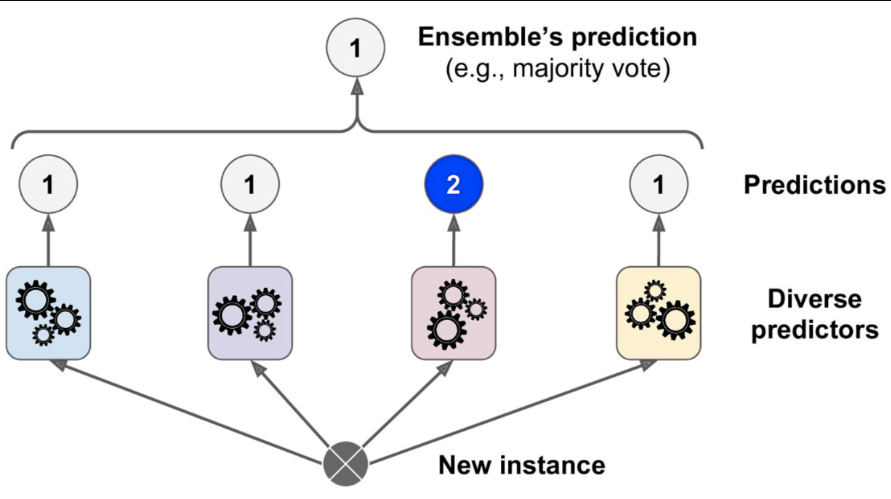

Voting classifiers combine the predictions of multiple machine learning models to improve accuracy and robustness. Widely applied in healthcare, traffic management, and economic analysis in Australia, they ensure reliable decision-making in complex scenarios.

AdaBoost R2, introduced by Drucker in 1997, extends AdaBoost to predict continuous variables, addressing challenges in regression analysis. Its iterative boosting mechanism ensures accurate forecasts for diverse datasets, making it a valuable tool in fields like environmental analytics and healthcare.

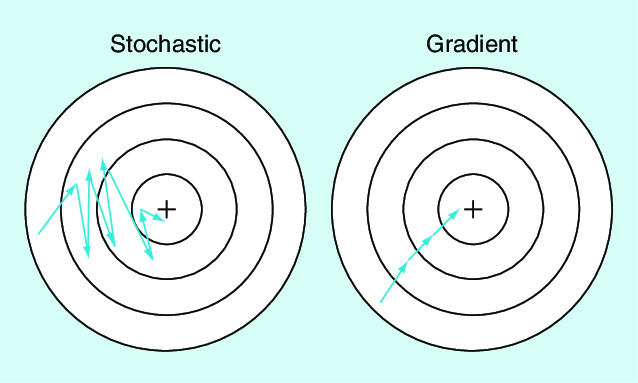

Stochastic Gradient Descent (SGD) is a foundational optimisation algorithm that improves machine learning efficiency by processing small data subsets. Widely used in industries like healthcare, urban planning, and climate forecasting, SGD ensures scalable and robust model training.