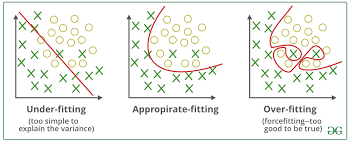

Imagine tuning a guitar: if the strings are too loose, the sound is flat and off-key—this is like underfitting, where a model is too simple to capture data patterns. If the strings are too tight, the sound becomes sharp and …

A Brief History of Weighted Log Likelihood Weighted log likelihood emerged from advancements in statistics and machine learning: statisticians recognized the need to address the varying importance of data points in unbalanced datasets. Refined through years of research, it has …

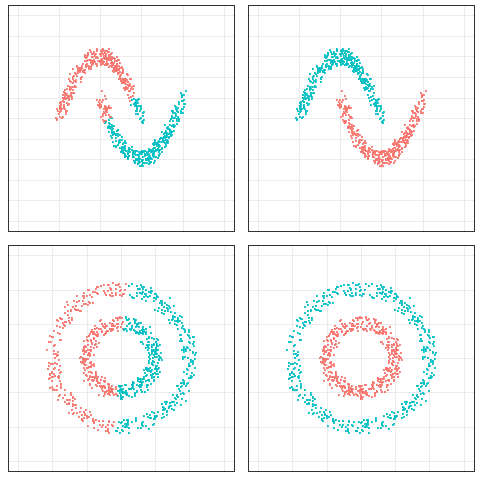

A Brief History: Who Developed It? Spectral clustering was developed in the late 1990s: it quickly became a cornerstone for analyzing non-linear data. Combining graph theory and linear algebra, it offered a robust solution for handling datasets with intricate relationships. …

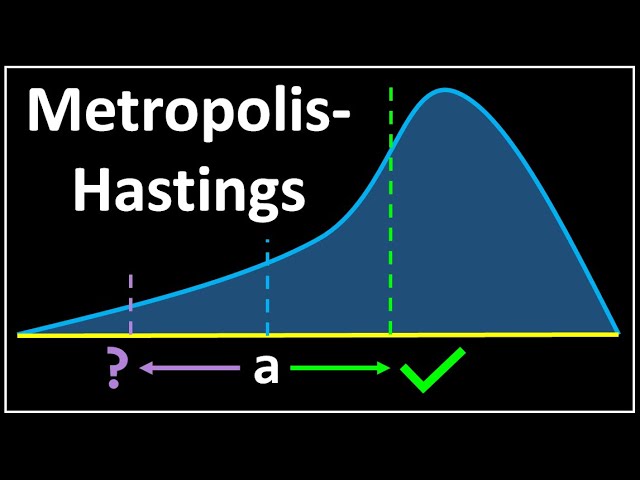

A Brief History of This Tool The Metropolis-Hastings algorithm, a cornerstone of Bayesian computation, began its journey in 1953 with Nicholas Metropolis and gained further refinement in 1970 through W.K. Hastings. Initially devised for thermodynamic simulations, this algorithm has since …

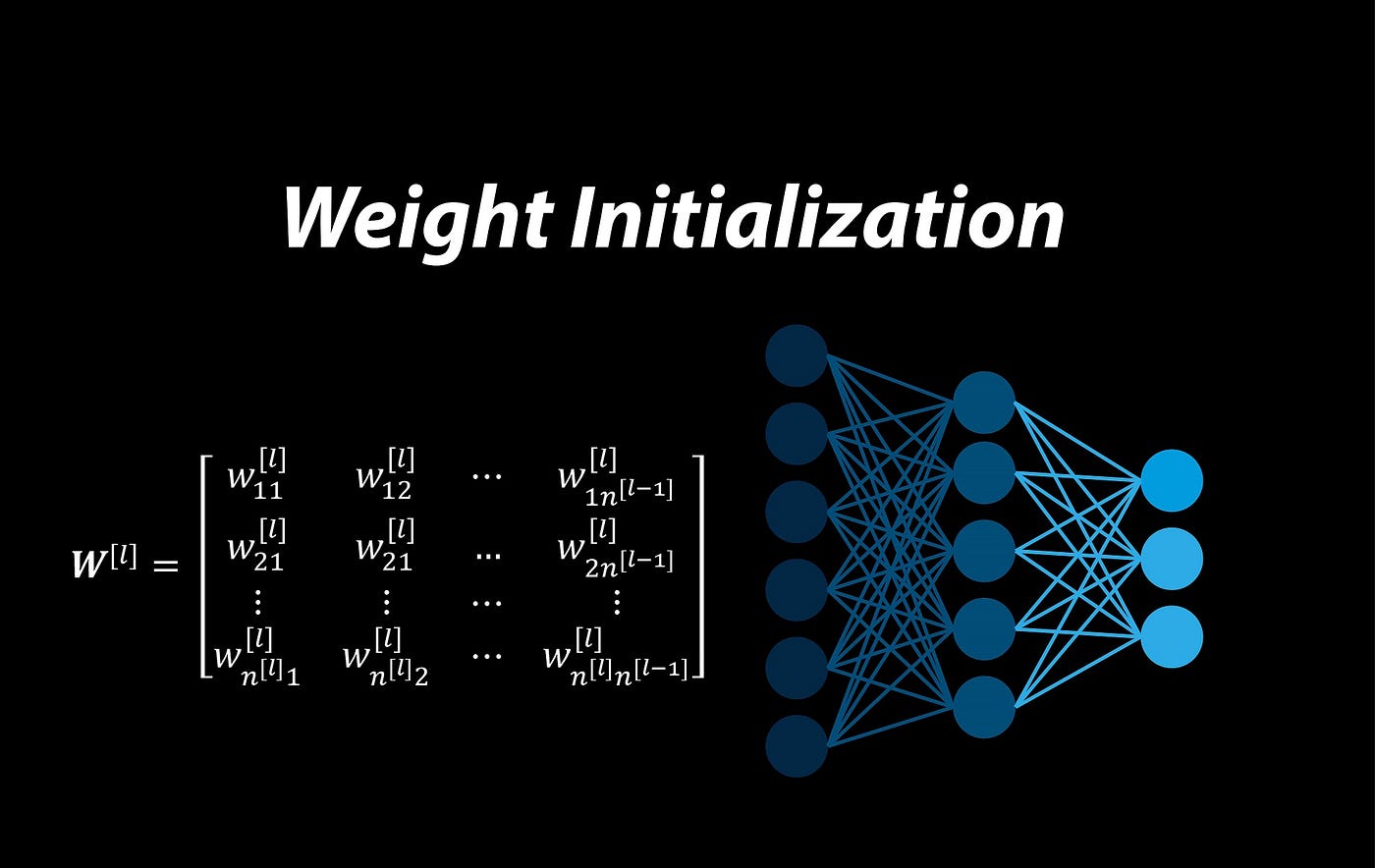

A Brief History: Who Developed It? The foundation for weight initialization traces back to the pioneers of artificial neural networks. In the early days, Frank Rosenblatt’s development of the Perceptron introduced the concept of weights, serving as the initial stepping …

A Brief History: Who Developed It? The Viterbi Algorithm was introduced by Andrew Viterbi in 1967 to decode convolutional codes in communication systems. Its efficiency and reliability have since made it a cornerstone in fields like speech recognition, bioinformatics, and …

The Overwhelming Power of Coles and Woolworths in Australia’s Grocery Market In Supermarket Monsters, Malcolm Knox unveils the unchecked dominance of Australia’s grocery giants, Coles and Woolworths, collectively dubbed “Colesworths.” These two conglomerates control over 70% of the grocery market, …

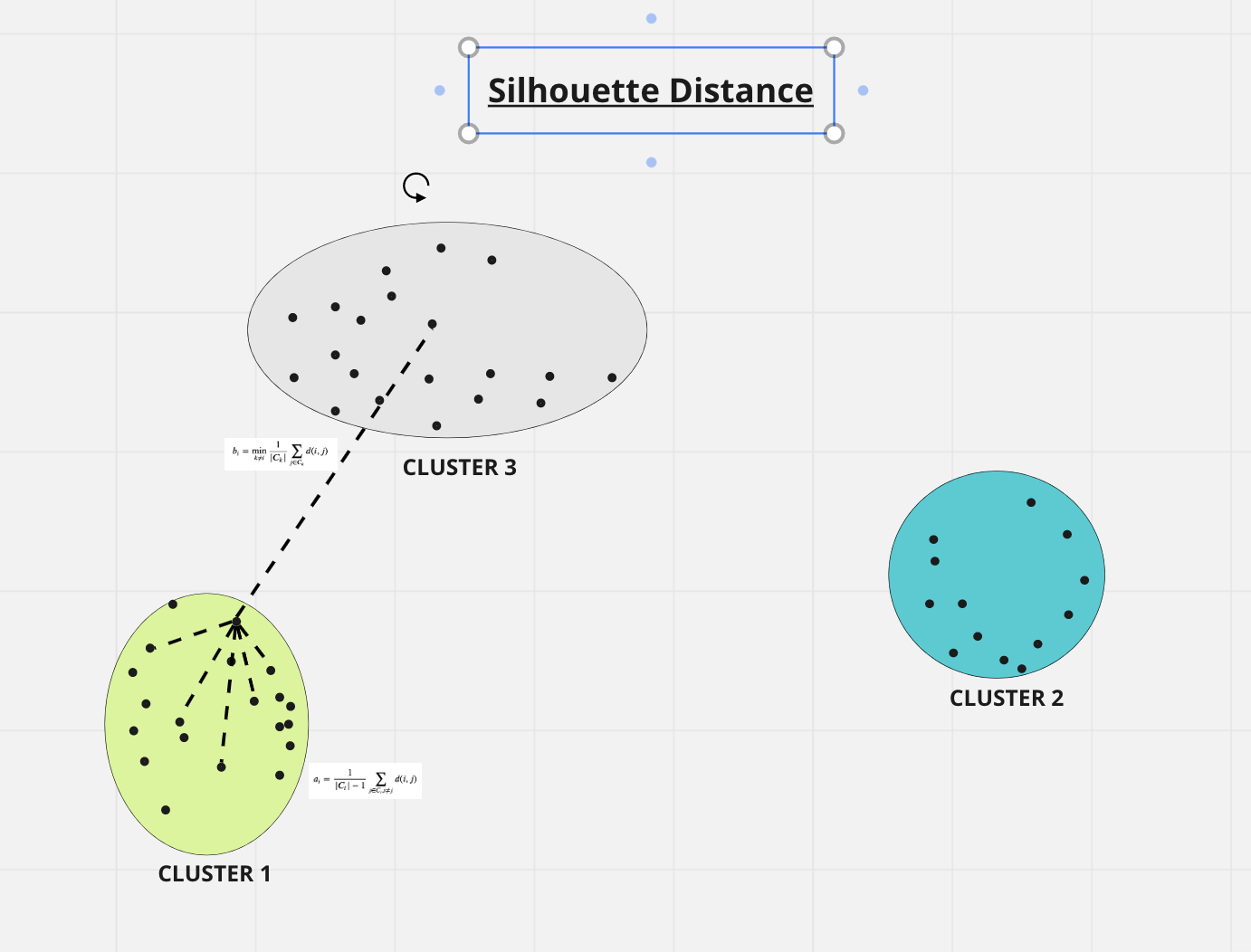

A Brief History: Who Developed It? The Silhouette Score was introduced in 1986 by Belgian statistician Peter J. Rousseeuw: it evaluates the consistency of clusters in data. Over time, it has become a cornerstone metric for assessing clustering quality in …

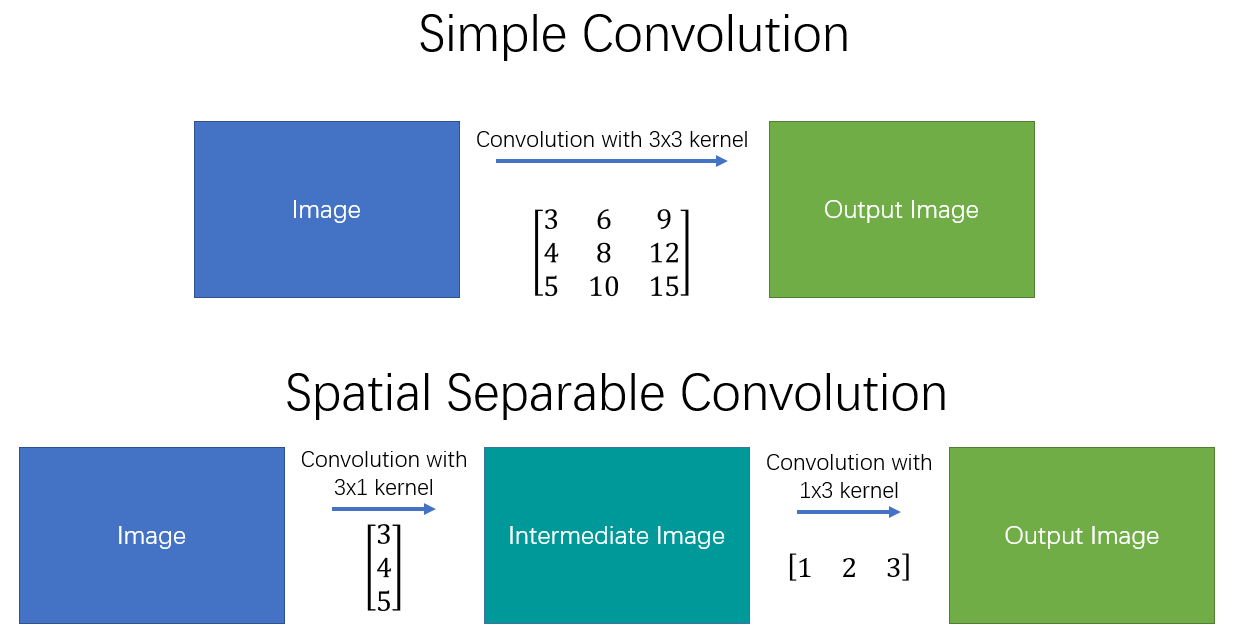

A Brief History of Separable and Transpose Convolutions Imagine a versatile crafting toolkit, refined over decades to balance precision and scale. Separable convolutions, rooted in the field of signal processing, became popular in the 1990s for efficiently tackling detailed patterns. …

Sanger’s network, introduced by Terence D. Sanger in 1989, is a neural network model designed for online principal component extraction, enhancing feature extraction in unsupervised learning systems.

O’REILLY MEDIA

It simplifies complex datasets by identifying key patterns, making it valuable for dimensionality reduction and data compression. Australian government agencies, such as the Australian Bureau of Statistics and Transport for NSW, utilize Sanger’s network for tasks like census data processing and real-time traffic analysis, respectively. Tools like Scikit-learn and TensorFlow facilitate its implementation in various applications.