A Brief History: Who Developed Semi-Supervised Scenarios?

The concept of semi-supervised learning (SSL) was introduced in the late 1990s to address the growing need to utilize unlabeled data in machine learning. Researchers like Xiaojin Zhu pioneered frameworks for combining labeled and unlabeled data, significantly reducing reliance on fully labeled datasets. Today, SSL and semi-supervised scenarios are critical tools in industries like healthcare, environmental science, and public policy, where data labeling is time-consuming and costly.

What Is a Semi-Supervised Scenario?

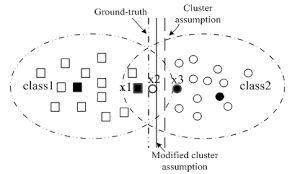

A semi-supervised scenario is a machine learning approach where a model is trained using a combination of labeled and unlabeled data. It bridges the gap between supervised learning, which relies entirely on labeled data, and unsupervised learning, which uses none.

This approach mirrors human learning: limited explicit instruction (labeled data) combined with observation and exploration (unlabelled data) creates a deeper understanding and better outcomes.

Why Is It Used? What Challenges Does It Address?

Semi-supervised scenarios solve several challenges in data processing and machine learning:

- Reducing Labeling Costs: Minimizes dependency on expensive manual labeling.

- Leveraging Unlabeled Data: Utilizes large, readily available, and inexpensive unlabeled datasets.

- Improving Model Accuracy: Enhances generalization by exposing models to diverse, representative examples.

Organizations employing semi-supervised scenarios save time and resources while achieving scalable, high-performing machine learning models.

How Is It Used?

- Start with Labeled Data: Train the model on a small, high-quality labeled dataset.

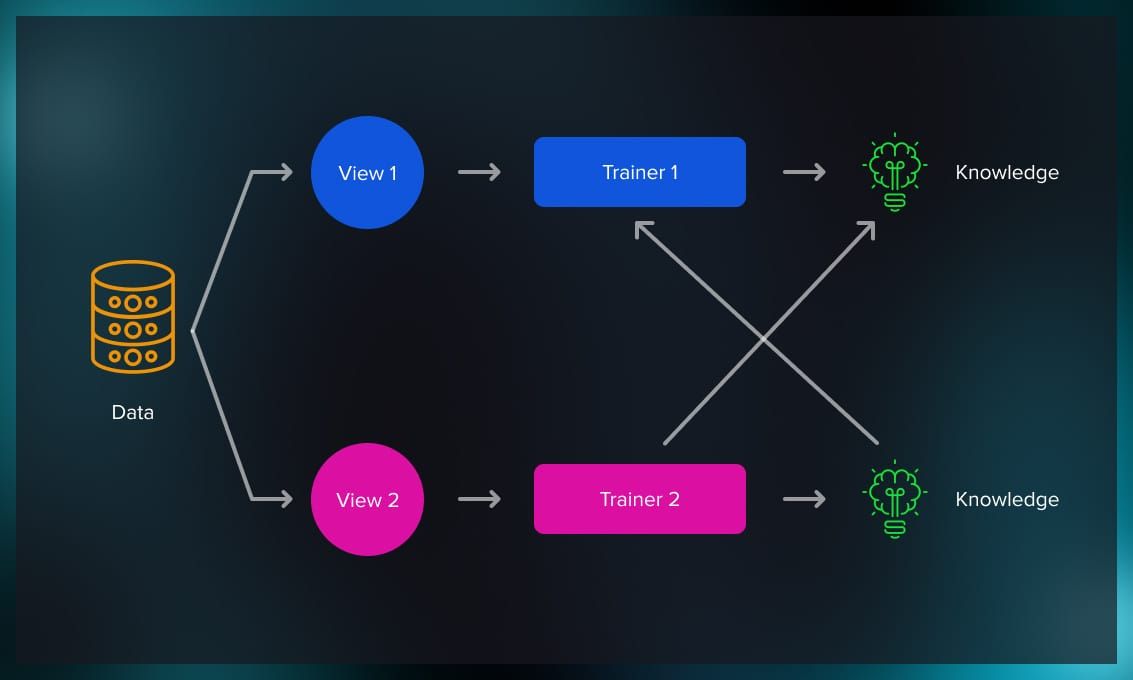

- Incorporate Unlabeled Data: Apply techniques like pseudo-labeling or clustering to integrate unlabeled examples.

- Refine Iteratively: Retrain the model iteratively to improve predictions and consistency.

Different Types of Semi-Supervised Scenarios

- Self-Training: Uses the model’s predictions to generate pseudo-labels for unlabeled data, which are then used for further training.

- Consistency Regularization: Ensures the model generates consistent predictions, even when input data undergo transformations.

- Graph-Based Learning: Propagates labels through a graph structure to classify unlabeled data points effectively.

Key Features

- Cost-Efficient: Reduces reliance on large labeled datasets, lowering annotation costs.

- Scalable: Handles large datasets effectively, especially when labeled data is limited.

- Versatile: Applies to tasks like classification, clustering, and regression, making it adaptable to various industries.

Software and Tools Supporting Semi-Supervised Scenarios

- Python Libraries:

- Scikit-learn: Includes label propagation and label spreading methods.

- TensorFlowand PyTorch: Provide frameworks for implementing advanced SSL algorithms.

- FastAI: Simplifies semi-supervised model development with user-friendly abstractions.

- Platforms: Tools like Google Colab and Jupyter Notebooks offer interactive environments for testing semi-supervised scenarios.

3 Industry Application Examples in Australian Governmental Agencies

- Healthcare (Department of Health):

- Application: Predicting disease trends from patient records.

- Use of Semi-Supervised Scenarios: Combines limited labeled diagnostic data with large unlabeled datasets to improve accuracy.

- Environmental Monitoring (Department of Agriculture, Water, and the Environment):

- Application: Classifying satellite images for deforestation tracking.

- Use of Semi-Supervised Scenarios: Leverages labeled environmental data and large unlabeled imagery to enhance monitoring efficiency.

- Public Policy (Australian Bureau of Statistics):

- Application: Analyzing census data to forecast population trends.

- Use of Semi-Supervised Scenarios: Bridges gaps in partially labeled datasets to generate accurate demographic predictions.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!