A Brief History: Who Developed Inductive Learning?

Inductive learning, a fundamental concept in machine learning, originates from the principles of inductive reasoning studied by Aristotle. In the 20th century, pioneers like Alan Turing and Tom Mitchell applied these principles to machine learning, creating predictive models capable of generalizing from data. Today, inductive learning is widely used in artificial intelligence for tasks such as classification, regression, and pattern recognition.

What Is Inductive Learning?

Inductive learning is a supervised learning method where models generalize patterns from labeled data to make predictions on new, unseen examples. It enables AI systems to learn rules from training datasets and apply them effectively in real-world scenarios.

This process is similar to planting seeds: with proper data (seeds) and training (care), models (plants) grow to provide fruitful predictions.

Why Is It Used? What Challenges Does It Address?

Inductive learning tackles major challenges in data-driven decision-making:

- Pattern Detection: Identifies meaningful trends in labeled datasets.

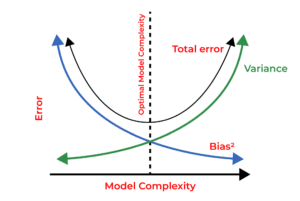

- Generalization: Applies learned rules to predict outcomes in new scenarios.

- Real-World Applications: Powers AI systems like fraud detection, spam filters, and recommendation engines.

Without inductive learning, many machine learning models would lack the adaptability needed for real-world tasks, limiting their functionality and accuracy.

How Is It Used?

- Data Preparation: Provide labeled training datasets.

- Training Phase: Use algorithms like decision trees, neural networks, or probabilistic models to learn patterns.

- Prediction Phase: Apply learned rules to classify or predict outcomes for new data.

Different Types of Inductive Learning

- Instance-Based Learning: Compares new examples to stored training data (e.g., k-Nearest Neighbors).

- Rule-Based Learning: Extracts explicit rules from training data (e.g., decision trees).

- Probabilistic Learning: Uses statistical models to predict outcomes (e.g., Naive Bayes).

Key Features

- Accurate Predictions: Learns patterns from labeled data and applies them effectively.

- Flexibility: Supports tasks like classification, regression, and anomaly detection.

- Scalability: Handles datasets of varying sizes and complexities.

Software and Tools Supporting Inductive Learning

- Python Libraries:

- Scikit-learn: Provides tools for decision trees, Naive Bayes, and k-NN.

- TensorFlowand PyTorch: Support scalable neural network implementations.

- WEKA: A platform offering rule-based and probabilistic learning methods.

- Platforms: Interactive tools like Google Colab and Jupyter Notebooks enable testing and visualizing inductive learning models.

3 Industry Application Examples in Australian Governmental Agencies

- Healthcare (Department of Health):

- Application: Predicting disease outcomes from patient records.

- Use of Inductive Learning: Models analyze historical data to identify patterns and improve diagnostic accuracy.

- Environmental Management (Department of Agriculture, Water, and the Environment):

- Application: Classifying species in biodiversity surveys.

- Use of Inductive Learning: Utilizes labeled ecological datasets to classify species in new regions efficiently.

- Transportation (Department of Infrastructure, Transport, and Regional Development):

- Application: Predicting traffic congestion for urban planning.

- Use of Inductive Learning: Uses historical traffic data to forecast patterns and optimize road infrastructure.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!