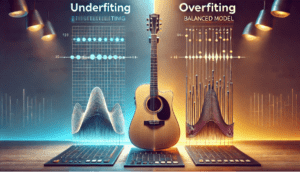

Imagine tuning a guitar: if the strings are too loose, the sound is flat and off-key—this is like underfitting, where a model is too simple to capture data patterns. If the strings are too tight, the sound becomes sharp and distorted—this is like overfitting, where a model captures every detail, including noise, and fails to generalize. Just as a well-tuned guitar creates harmonious music, a balanced machine learning model avoids underfitting and overfitting to deliver reliable predictions.

A Brief History of Underfitting and Overfitting

The concepts of underfitting and overfitting have their roots in early statistical analysis and regression modeling. As machine learning algorithms gained popularity in the late 20th century, researchers such as Vladimir Vapnik formalized these ideas in statistical learning theory. Today, addressing underfitting and overfitting is crucial in building robust artificial intelligence (AI) and predictive analytics models.

What Are Underfitting and Overfitting?

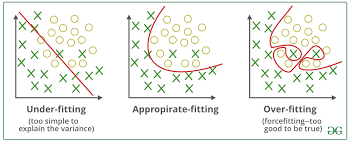

- Underfitting occurs when a machine learning model is too simplistic, failing to capture the patterns in the data. This results in poor performance on both training and test datasets.

- Overfitting happens when a model is too complex, memorizing noise and outliers in the training data, which reduces its ability to generalize to unseen data.

Key Differences:

|

Aspect |

Underfitting |

Overfitting |

|

Model Complexity |

Too simple |

Too complex |

|

Training Error |

High |

Low |

|

Test Error |

High |

High |

| Generalization | Poor |

Poor |

Why Are These Concepts Important?

Understanding and addressing underfitting and overfitting ensures that machine learning models are both accurate and generalizable:

- Improved Accuracy: Balancing model complexity reduces both training and test errors.

- Generalization: A well-tuned model performs effectively on unseen data.

- Efficiency: Identifying these issues early saves time and computational resources.

For example, in healthcare analytics, underfitting might overlook critical disease trends, while overfitting could identify irrelevant patterns, leading to unreliable diagnoses.

How Are Underfitting and Overfitting Addressed?

- Underfitting Solutions:

- Increase model complexity by adding layers, features, or degrees in polynomials.

- Train the model longer to allow it to learn from the data.

- Use feature engineering to provide the model with more informative inputs.

- Overfitting Solutions:

- Apply regularization techniques like L1 or L2 penalties to prevent over-complexity.

- Use early stopping during training to avoid memorizing noise.

- Increase the size of the training dataset to improve generalization.

For instance, in climate modeling, addressing overfitting ensures accurate temperature predictions, while mitigating underfitting captures critical environmental patterns.

Different Types of Underfitting and Overfitting

- Underfitting:

- Structural Underfitting: The model type (e.g., linear regression) is too simple for the data.

- Training Underfitting: Insufficient training iterations or poor algorithms.

- Overfitting:

- Data Overfitting: The model depends heavily on noise or irrelevant data.

- Model Overfitting: Excessive parameters or features lead to memorization of training data.

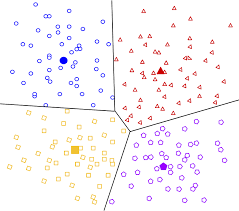

Categories of Models Prone to Underfitting or Overfitting

- Prone to Underfitting:

- Linear regression models for non-linear datasets.

- Shallow neural networks with insufficient layers or neurons.

- Prone to Overfitting:

- Deep neural networks trained without regularization.

- Decision trees with excessive branching and depth.

Software and Tools to Address Underfitting and Overfitting

Popular tools include:

- Python Libraries:

- Scikit-learn: Features cross-validation, regularization, and evaluation tools.

- TensorFlow/Keras: Offers dropout layers, early stopping, and model complexity tuning.

- R Programming: Packages like caret for detecting and mitigating these issues in models.

- MATLAB: Provides advanced visualization and refinement tools to assess and resolve underfitting or overfitting.

Industry Applications in Australian Governmental Agencies

- Healthcare Analytics: The Australian Institute of Health and Welfare fine-tunes models to avoid underfitting or overfitting, ensuring reliable disease predictions and resource allocation.

- Environmental Forecasting: Geoscience Australia improves satellite data analysis by balancing model complexity to deliver accurate land-use and resource management predictions.

- Traffic Management: Transport for NSW optimizes models to avoid underfitting or overfitting, ensuring accurate traffic flow predictions and efficient public transport schedules.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!

1 Comment

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.