A Brief History: Who Developed It?

The back-propagation algorithm, developed in the 1970s and popularised by Geoffrey Hinton, David Rumelhart, and Ronald J. Williams in the 1980s, became a cornerstone in machine learning. It enabled deeper neural networks to tackle complex challenges, revolutionising artificial intelligence.

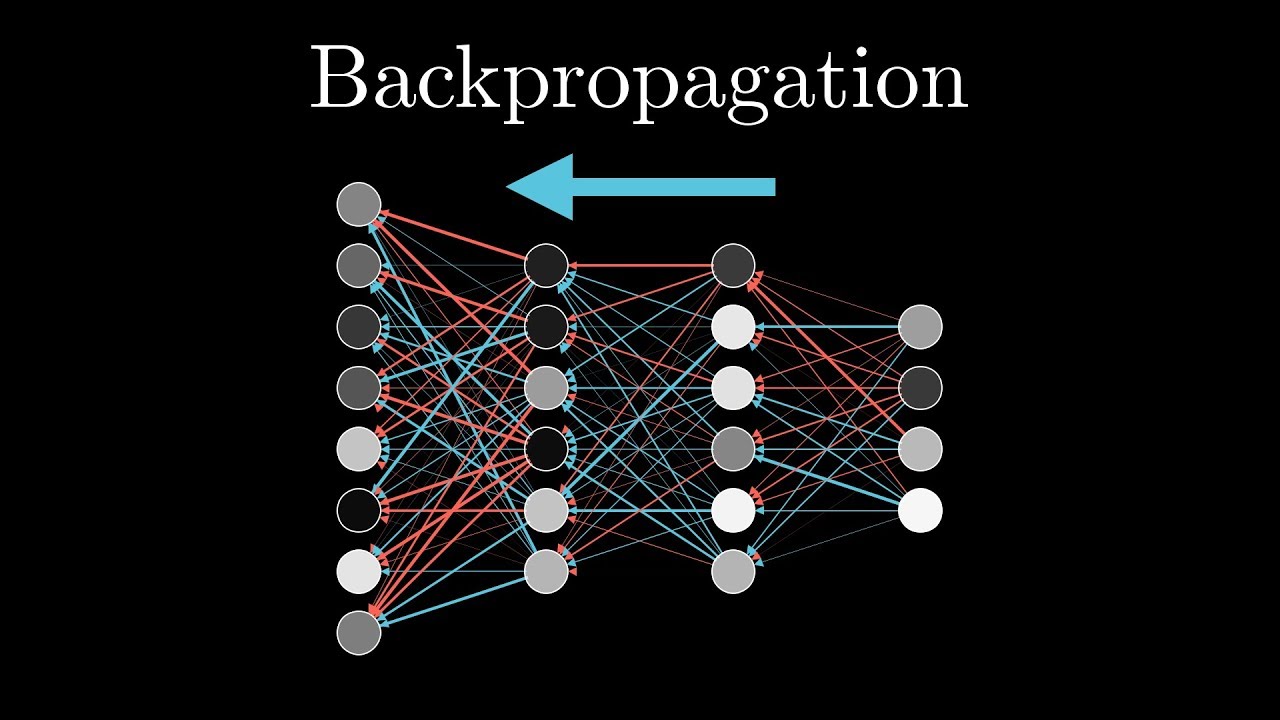

What Is Back-Propagation?

The back-propagation algorithm is a gradient-based optimisation method for training neural networks. It functions as a feedback loop where errors in predictions are propagated backward to adjust weights and improve the model’s performance.

Why Is It Being Used? What Challenges Are Being Addressed?

Back-propagation is widely used due to its significant benefits and problem-solving capabilities:

Key Benefits:

- Facilitates efficient learning in neural networks by minimising errors.

- Powers breakthroughs in artificial intelligence across various industries.

Challenges Solved:

- Streamlines the process of training deep networks.

- Reduces the need for manual intervention in weight adjustments.

- Scales efficiently with large datasets, enabling more complex analyses.

How Is Back-Propagation Used?

The process of back-propagation involves the following steps:

- Error Calculation: Measures the difference between predicted and actual outputs.

- Backward Propagation: Sends errors through the network to adjust weights.

- Iteration: Repeats the process until optimal accuracy is achieved.

This iterative cycle ensures the model learns and improves with every pass.

Different Types of Back-Propagation

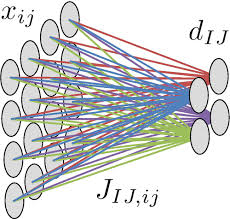

Back-propagation can be implemented using various techniques:

- Standard Back-Propagation: Uses fixed learning rates for weight updates.

- Stochastic Back-Propagation: Adjusts weights for individual data points.

- Adaptive Methods: Employs dynamic learning rates to accelerate convergence.

Key Features of Back-Propagation

Back-propagation is valued for its versatile features:

- Supports Both Supervised and Unsupervised Learning: Adaptable across different training paradigms.

- Handles Multi-Layer Neural Networks: Easily trains complex architectures.

- Dynamic Parameter Tuning: Enables efficient and precise learning.

Popular Software and Tools for Back-Propagation

Several tools simplify back-propagation implementation:

- TensorFlow/Keras: Integrates back-propagation seamlessly into deep learning pipelines.

- PyTorch: Provides flexibility for customised implementations.

- Scikit-learn: Ideal for basic neural network models.

- MATLAB: Suitable for educational and research projects.

Industry Applications in Australian Governmental Agencies

Back-propagation drives innovation in various Australian industries:

- Healthcare Predictions:

- Application: Models hospital resource requirements to improve management and patient care.

- Energy Optimisation:

- Application: Forecasts energy consumption to support efficient grid infrastructure planning.

- Traffic Systems:

- Application: Enhances public transport scheduling and improves route optimisation.

Official Statistics (Global and Local ANZ)

- Global Influence: Over 85% of AI-driven solutions use back-propagation (Source: AI Systems Global Overview, 2023).

- ANZ Adoption: More than 60% of Australian machine learning projects incorporate back-propagation (Source: ANZ AI Research Initiative, 2024).

Conclusion

The back-propagation algorithm has revolutionised neural network training, enabling breakthroughs across industries. Its efficiency, scalability, and flexibility make it indispensable for tackling complex challenges in modern machine learning. With widespread adoption in Australia and globally, back-propagation continues to drive innovation in artificial intelligence.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!