A Brief History: Who Developed It?

AdaBoost.SAMME, an extension of the original AdaBoost algorithm, was developed by Zhu et al. in 2006. This innovative approach introduced a multi-class exponential loss function to address classification challenges involving multiple categories. It significantly expanded the applicability of boosting algorithms in machine learning and data science.

What Is AdaBoost.SAMME?

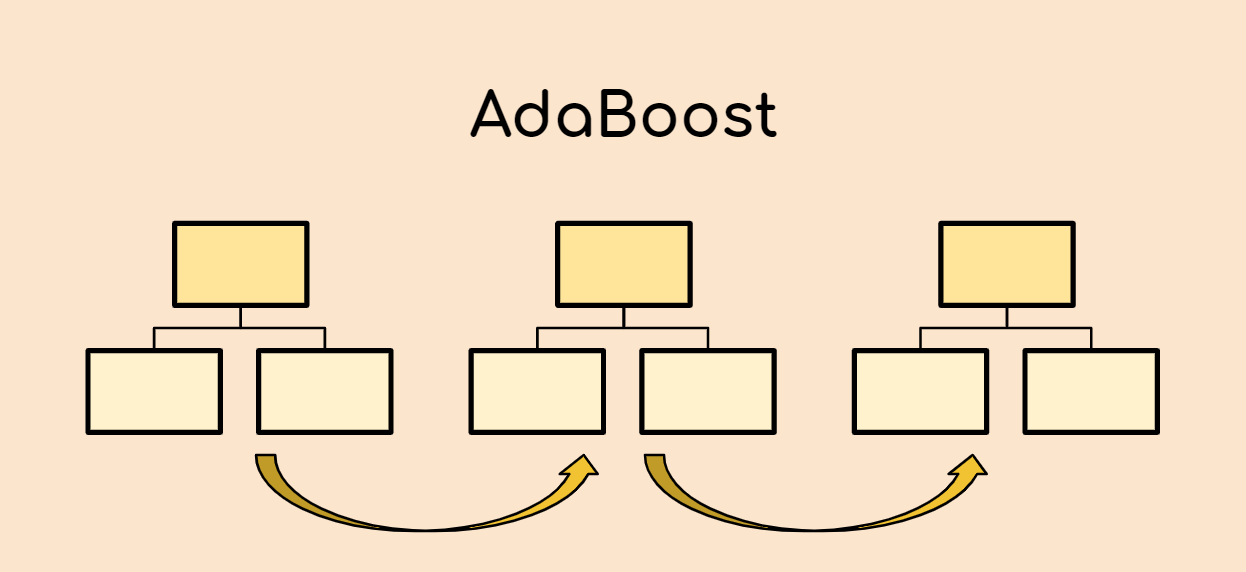

Picture a sports coach refining strategies for players based on their individual performance during a game. AdaBoost.SAMME operates similarly: it iteratively improves predictions across multiple classes by prioritising errors and combining weak models into a strong ensemble for enhanced classification accuracy.

Why Is AdaBoost.SAMME Used?

AdaBoost.SAMME addresses critical challenges in machine learning:

- Multi-Class Classification Capability: Efficiently handles datasets with more than two categories.

- Error Focus: Prioritises misclassified data to iteratively refine predictions.

- Versatility Across Domains: Adapts to both structured and unstructured datasets for diverse applications.

Without AdaBoost.SAMME, tackling multi-class problems often requires more complex and less efficient alternatives.

How Is AdaBoost.SAMME Used?

The process of implementing AdaBoost.SAMME involves these steps:

- Train a Weak Learner: Train a base model (e.g., a decision stump) on the dataset.

- Weight Assignment: Assign weights to samples, emphasising misclassified instances.

- Combine Predictions: Use a weighted majority vote across all classes to make final predictions.

This process produces a robust model capable of making accurate multi-class predictions.

Different Types of AdaBoost.SAMME

AdaBoost.SAMME has two main variations:

- SAMME: Utilises weighted majority voting for class predictions.

- SAMME.R: Incorporates probability outputs from weak learners to refine accuracy.

These variations enable AdaBoost.SAMME to cater to datasets with varying levels of complexity.

Key Features of AdaBoost.SAMME

The algorithm is known for its distinct features:

- Multi-Class Adaptability: Efficiently handles datasets with three or more categories.

- Iterative Learning: Focuses on challenging samples in each round to improve model performance.

- Compatibility with Weak Models: Works seamlessly with decision trees and stumps.

Popular Tools for Implementing AdaBoost.SAMME

Several tools make it easy to implement AdaBoost.SAMME:

- Scikit-learn (Python): Offers SAMME through the AdaBoostClassifier with configurable parameters.

- XGBoost: Adapts SAMME concepts for advanced gradient boosting implementations.

- R Libraries: Tools such as ada and gbm simplify model development using SAMME.

Applications of AdaBoost.SAMME in Australian Governmental Agencies

AdaBoost.SAMME has been applied across various Australian sectors, solving real-world problems:

- Healthcare Risk Analysis:

- Application: Classifying patients into risk levels to optimise preventive care strategies.

- Traffic Forecasting:

- Application: Predicting congestion patterns across regions to enhance infrastructure planning.

- Education Segmentation:

- Application: Grouping student performance levels to design targeted intervention programmes.

Conclusion

AdaBoost.SAMME has revolutionised multi-class classification by efficiently combining weak models to build robust ensembles. Its flexibility and accuracy make it indispensable in fields such as healthcare, traffic management, and education analytics. With tools like Scikit-learn and XGBoost, AdaBoost.SAMME remains accessible and impactful for machine learning practitioners worldwide.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!