A Brief History: Who Developed It?

The concept of activation functions in neural networks draws inspiration from biological neurons. Their theoretical foundation was established during the mid-20th century. Geoffrey Hinton popularised the ReLU (Rectified Linear Unit) function in 2000, which has since become a cornerstone for training deep learning models.

What Is an Activation Function?

An activation function acts as a switchboard that controls signal flow in a neural network. It determines which signals pass through the layers, enabling the network to learn and adapt effectively.

Why Are Activation Functions Used?

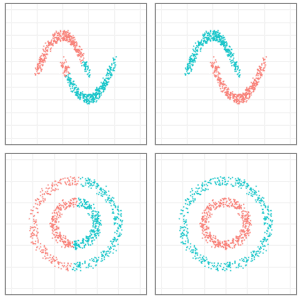

Activation functions address several critical challenges in neural networks:

- Handle Non-Linearity: Enable networks to learn and process complex patterns.

- Optimise Gradients: Ensure smooth gradient propagation during training.

- Increase Computational Efficiency: Improve the speed and accuracy of computations.

Without activation functions, neural networks would be limited to solving linear problems, restricting their real-world applications.

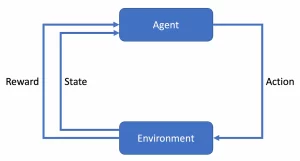

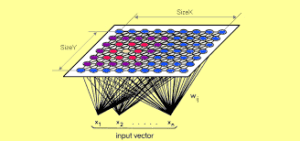

How Do Activation Functions Work?

Activation functions operate through a structured mechanism:

- Input Evaluation: Each neuron calculates a weighted sum of inputs.

- Activation Execution: The function applies a mathematical transformation.

- Signal Transmission: The output passes to subsequent layers for further computation.

This step-by-step process allows neural networks to model complex relationships in data.

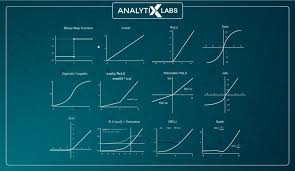

Different Types of Activation Functions

Activation functions are classified into several types, each suited for specific use cases:

- Linear Activation: Simplistic and ideal for straightforward problems.

- Sigmoid Function: Outputs values between 0 and 1, often used for probabilities.

- Tanh (Hyperbolic Tangent): Ranges from -1 to 1, centralising data.

- ReLU (Rectified Linear Unit): Outputs positive values, accelerating training speed.

- Softmax Function: Converts outputs into probabilities for classification tasks.

Key Features of Activation Functions

Activation functions possess unique features that make them indispensable:

- Range Control: Limits output values (e.g., sigmoid’s range is 0 to 1).

- Non-Linearity Introduction: Crucial for solving advanced, non-linear problems.

- Ease of Differentiability: Necessary for backpropagation in neural networks.

Popular Software and Tools for Activation Functions

Several frameworks support the implementation of activation functions:

- TensorFlow: Implements ReLU and Tanh for scalable projects.

- PyTorch: Ideal for experimenting with dynamic neural networks.

- Keras: Simplifies activation function integration for beginners.

- Scikit-learn: Suitable for introducing basic activation concepts.

Applications of Activation Functions in Australian Governmental Agencies

Activation functions drive advancements in various Australian sectors:

- Healthcare:

- Application: Utilising ReLU-based models for accurate disease diagnosis.

- Traffic Management:

- Application: Employing Softmax to predict and manage congestion patterns.

- Environmental Research:

- Application: Using Tanh to identify and analyse animal migration patterns effectively.

Conclusion

Activation functions are the backbone of neural networks, enabling them to learn complex patterns and make accurate predictions. Their versatility and efficiency make them essential for applications in fields such as healthcare, traffic management, and environmental research. With tools like TensorFlow, PyTorch, and Keras, implementing activation functions has never been more accessible for machine learning practitioners.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!