A Brief History: Who Developed It?

AdaBoost R2, introduced by Drucker in 1997, revolutionised regression analysis by extending AdaBoost’s functionality to predict continuous values instead of categorical outputs. This breakthrough established AdaBoost R2 as a critical tool for enhancing regression models and addressing limitations in traditional machine learning methods.

What Is AdaBoost R2?

Imagine a team of artists working collaboratively on a mural. Each artist refines their section, addressing imperfections left by others. AdaBoost R2 operates similarly: it iteratively combines weak regression models to minimise errors and deliver highly accurate predictions for continuous data.

Why Is AdaBoost R2 Used? What Challenges Are Being Addressed?

AdaBoost R2 addresses essential challenges in regression tasks, including:

- Improved Prediction Accuracy: Aggregates weak learners to produce reliable forecasts for continuous variables.

- Error Reduction: Focuses on correcting significant discrepancies to optimise model performance.

- Versatility Across Data Types: Adapts seamlessly to noisy, imbalanced, and complex datasets.

Without AdaBoost R2, regression problems often depend on inefficient models that struggle to analyse intricate patterns in data.

How Is AdaBoost R2 Used?

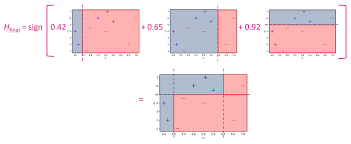

The workflow of AdaBoost R2 involves the following steps:

- Train a Weak Learner: Train a base regression model, such as a decision stump, on the dataset.

- Assign Weights: Prioritise samples with larger errors by assigning higher weights.

- Combine Predictions: Aggregate the outputs using a weighted average to improve overall accuracy.

This iterative process builds a robust model capable of delivering reliable predictions for continuous variables.

Does AdaBoost R2 Have Different Types?

AdaBoost R2 is a dedicated regression extension of AdaBoost and does not have specific subtypes. However, its principles have influenced other boosting methods, such as Gradient Boosting and XGBoost, which are widely used for regression tasks.

Key Features of AdaBoost R2

AdaBoost R2 is known for its unique features:

- Regression-Specific Capability: Tailored specifically for predicting continuous variables.

- Adaptive Weighting Mechanism: Focuses on minimising high-impact errors to improve overall predictions.

- Noise Resilience: Performs effectively on datasets with significant variability, ensuring reliable results.

Popular Software and Tools for AdaBoost R2

Several tools support the implementation of AdaBoost R2:

- Scikit-learn (Python): Offers a straightforward implementation via

AdaBoostRegressor. - XGBoost: Builds on AdaBoost R2 principles for enhanced regression capabilities.

- R Libraries: Packages like

adaandgbmsupport robust regression boosting with ease.

Applications of AdaBoost R2 in Australian Governmental Agencies

AdaBoost R2 plays a critical role in solving complex challenges across Australian industries:

- Environmental Analytics:

- Application: Forecasting air quality indices to inform proactive public health strategies.

- Healthcare Predictions:

- Application: Estimating patient recovery durations to optimise hospital resource allocation.

- Urban Planning and Development:

- Application: Predicting housing market trends to support infrastructure investments.

Conclusion

AdaBoost R2 has transformed regression analysis by enabling accurate predictions for continuous variables through iterative boosting. Its flexibility and noise resilience make it an indispensable tool in fields such as environmental analytics, healthcare, and urban planning. With accessible tools like Scikit-learn and XGBoost, AdaBoost R2 is well-suited for tackling complex data challenges and delivering reliable results.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!