A Brief History: Who Developed It?

The softmax function originated from statistical mechanics and probability theory. It gained prominence in machine learning through the work of Geoffrey Hinton and Yoshua Bengio in the late 20th century, particularly for its applications in classification tasks.

What Is the Softmax Function?

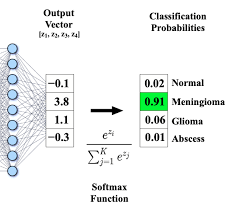

Softmax is a mathematical function that converts raw scores (logits) into probabilities. Imagine a multilingual voting system where a translator ensures every input is understood and converted into clear probabilities. Softmax works similarly, enabling models to produce interpretable predictions.

Why Is It Used? What Challenges Does It Address?

Softmax addresses essential challenges in machine learning:

- Converts Logits into Normalised Probabilities: Ensures model outputs are interpretable as probabilities.

- Enhances Interpretability: Simplifies understanding of machine learning predictions.

- Facilitates Multi-Class Classification Tasks: Reduces ambiguity in multi-class problems by providing clear, normalised outputs.

Challenges Solved:

- Managing complex multi-class classification tasks.

- Reducing ambiguity in model predictions.

- Improving human interpretability of outputs.

How Is the Softmax Function Used?

Softmax plays a critical role in various machine learning applications:

- Neural Networks: Serves as the final activation function in classification models.

- Natural Language Processing: Assigns probabilities to words in predictive text models.

- Image Recognition: Determines the likelihood of objects in visual data.

different types

While the softmax function itself does not have explicit types, its implementation may vary depending on the use case:

Log-Softmax: A variation used to address numerical stability issues during computation.

Key Features of the Softmax Function

The softmax function is widely used due to its unique features:

- Normalisation: Ensures that all probabilities sum to 1, improving interpretability.

- Scalability: Handles large class sizes effectively.

- Transparency: Produces outputs that are easy to interpret, even for complex datasets.

Popular Software and Tools for Softmax

Several tools and libraries simplify the implementation of softmax:

- TensorFlow and Keras: Provide built-in softmax layers for neural networks.

- PyTorch: Features

nn.Softmaxandnn.LogSoftmaxfor flexible implementations. - scikit-learn: Includes softmax regression for multi-class models.

- MATLAB: Offers computational tools for softmax in both research and industry applications.

Applications of Softmax in Australian Governmental Agencies

Softmax supports critical decision-making across various Australian sectors:

- Healthcare Analytics:

- Application: Softmax-based systems predict probabilities for various health outcomes, helping prioritise resources effectively.

- Transportation Planning:

- Application: Categorises traffic flow patterns for efficient infrastructure management and congestion reduction.

- Educational Policy Development:

- Application: Classifies student performance metrics, enabling tailored intervention programs to improve learning outcomes.

Conclusion

The softmax function is a transformative tool in machine learning, enabling models to generate interpretable probabilities for multi-class classification tasks. Its versatility and efficiency make it indispensable across industries such as healthcare, transportation, and education. With accessible tools like TensorFlow and PyTorch, implementing softmax is straightforward, ensuring it remains a critical component of modern AI solutions.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!