A Brief History: Who Developed It?

The sigmoid function originated from logistic regression in the 19th century, providing a foundation for probabilistic modelling. The hyperbolic tangent (Tanh) function followed in the mid-20th century, optimised for neural network applications. By the 1980s, researchers like Yann LeCun popularised these functions for machine learning, cementing their place in neural network development.

What Are Sigmoid and Tanh Functions?

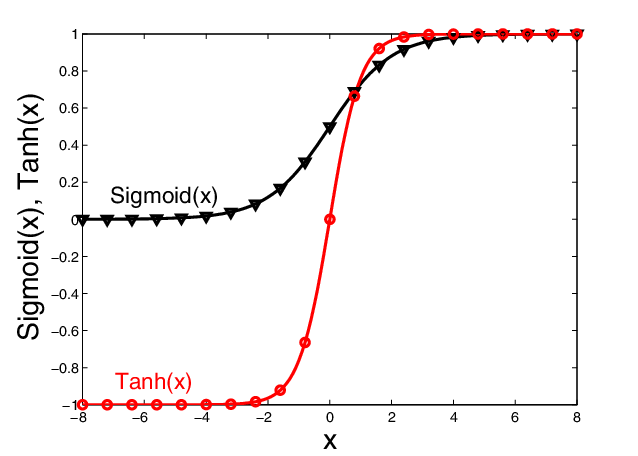

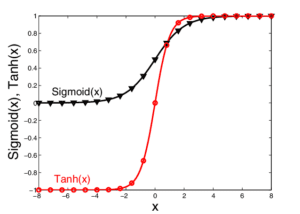

- Sigmoid Function:

Transforms input values into outputs ranging between 0 and 1, creating an S-shaped curve. It is ideal for probabilistic tasks, such as binary classification. - Hyperbolic Tangent (Tanh):

Similar to sigmoid but centred around zero, mapping inputs to a range of -1 to 1. This centring reduces bias accumulation, making it well-suited for hidden layers in neural networks.

These activation functions act as decision-making gates in neural networks, enabling the non-linear transformations needed for complex tasks.

Why Are They Used?

Sigmoid and Tanh address several challenges in neural networks:

- Data Normalisation: Sigmoid maps outputs for classification tasks, while Tanh centres inputs to minimise bias.

- Non-linear Transformation: Essential for modelling intricate data patterns.

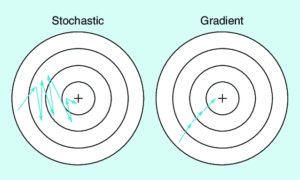

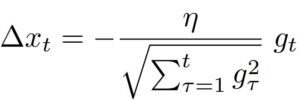

- Gradient Computation: Facilitates smooth weight adjustments during training.

Challenge: Despite their utility, both functions encounter the vanishing gradient problem, reducing their effectiveness in deep networks.

How Are They Used?

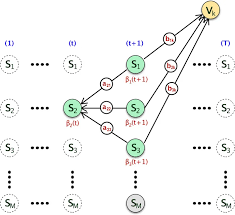

Activation functions operate through the following steps:

- Input Summation: Neurons calculate weighted sums of inputs.

- Non-linear Activation: Sigmoid or Tanh transforms these sums into non-linear outputs.

- Signal Output: The transformed result is transmitted to subsequent layers for further computation.

Different Types

- Sigmoid Variants:

- Includes logistic sigmoid, tailored for binary classification.

- Tanh Variants:

- Enhanced versions optimised for efficient gradient computation.

Key Features

- Sigmoid:

- Output Range: 0 to 1.

- Best Use Case: Ideal for classification layers in neural networks.

- Simplifies Predictions: Suited for probability-based tasks.

- Tanh:

- Output Range: -1 to 1.

- Centres Data: Reduces bias accumulation.

- Best Use Case: Ideal for hidden layers in deep networks.

Popular Tools Supporting Sigmoid and Tanh

These activation functions are widely supported across machine learning frameworks:

- TensorFlow: Provides optimised implementations for large-scale neural networks.

- PyTorch: Offers flexibility for experimenting with customised models.

- Keras: User-friendly for integrating sigmoid and Tanh layers into workflows.

- Scikit-learn: Demonstrates activation functions for straightforward use cases.

Applications in Australian Governmental Agencies

Sigmoid and Tanh functions play critical roles in various Australian public sector applications:

- Healthcare Diagnostics:

- Application: Sigmoid predicts probabilities in disease diagnostics, aiding resource allocation and decision-making.

- Traffic Flow Modelling:

- Application: Tanh handles dynamic, multi-parameter traffic management systems to optimise urban infrastructure.

- Climate Pattern Analysis:

- Application: Tanh models complex environmental data for disaster preparedness and resource planning.

Conclusion

Sigmoid and Tanh functions remain foundational in neural networks, enabling non-linear transformations for tasks like classification and sequential modelling. Despite challenges like the vanishing gradient, their utility in fields such as healthcare, traffic management, and climate analysis demonstrates their continued relevance in modern AI. With tools like TensorFlow and PyTorch, integrating these functions into workflows is both efficient and accessible.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!