A Brief History: Who Developed It?

AdaBoost (Adaptive Boosting) was introduced in 1995 by Yoav Freund and Robert Schapire. Their pioneering work transformed weak models into strong, accurate ensembles, earning them the prestigious Gödel Prize in 2003. This innovation cemented AdaBoost’s position as a foundational algorithm in modern machine learning.

What Is AdaBoost?

Imagine a teacher who dedicates extra time to struggling students while reinforcing the strengths of others. AdaBoost works similarly: it focuses on correcting errors from previous iterations, building an adaptive ensemble model that excels in tackling complex datasets. By combining weak learners, AdaBoost achieves remarkable accuracy and adaptability.

Why Is AdaBoost Used? What Challenges Does It Address?

AdaBoost solves several key challenges in data science:

- Enhanced Predictive Accuracy: Combines outputs from weak learners to deliver highly accurate results.

- Targeted Error Reduction: Iteratively focuses on misclassifications to improve performance.

- Versatility Across Data Types: Handles both structured and unstructured datasets efficiently.

Without AdaBoost, weak models often fail to provide actionable insights, especially when working with imbalanced or noisy data.

How Is AdaBoost Used?

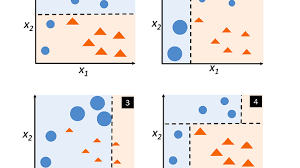

AdaBoost works through three main steps:

- Train a Weak Learner: For example, a decision stump is trained on the dataset.

- Assign Weights to Samples: Misclassified samples are emphasised, ensuring they are prioritised in subsequent iterations.

- Aggregate Predictions: The algorithm combines results using a weighted average or majority voting to produce a robust model.

This iterative process allows AdaBoost to continually refine and improve its performance.

Different Types of AdaBoost

AdaBoost has several variations tailored to specific tasks:

- Real AdaBoost: Utilises probabilistic scores for improved classification accuracy.

- Discrete AdaBoost: Specialises in handling categorical data with weighted voting.

- AdaBoost.M1: Extends the algorithm for multi-class classification problems.

These adaptations make AdaBoost versatile and effective across a wide range of applications.

Key Features of AdaBoost

AdaBoost stands out due to its unique features:

- Adaptive Weighting: Focuses on challenging samples to refine learning.

- Compatibility with Weak Learners: Works seamlessly with decision trees, neural networks, and other algorithms.

- Efficiency: Produces reliable results with minimal parameter tuning.

Popular Software and Tools for AdaBoost

AdaBoost is supported by numerous tools and frameworks, making it accessible for data scientists and developers:

- Scikit-learn (Python): Provides AdaBoostClassifier and AdaBoostRegressor for straightforward implementation.

- XGBoost: Extends AdaBoost concepts with gradient boosting for optimised performance.

- R Packages: Tools like ada and gbm simplify AdaBoost model development for statistical applications.

Applications in Australian Governmental Agencies

AdaBoost plays a vital role in various Australian sectors:

- Healthcare Analytics:

- Application: Predicts patient readmission rates to optimise healthcare interventions and resources.

- Traffic Flow Optimisation:

- Application: Forecasts traffic patterns to inform infrastructure planning and reduce congestion.

- Educational Insights:

- Application: Identifies at-risk students to design targeted support programs and allocate resources effectively.

Conclusion

AdaBoost remains a powerful tool for transforming weak learners into strong, accurate ensemble models. Its applications in healthcare, traffic optimisation, and education demonstrate its versatility and value in solving real-world problems. With tools like Scikit-learn and XGBoost, implementing AdaBoost is accessible to both beginners and advanced practitioners, ensuring its continued relevance in modern machine learning.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!