A Brief History of This Tool: Who Developed It?

Restricted Boltzmann Machines (RBMs) were introduced by Geoffrey Hinton and his collaborators in the 1980s. Rooted in neural network research, RBMs gained prominence with the rise of deep learning, serving as essential building blocks for Deep Belief Networks.

What Is It?

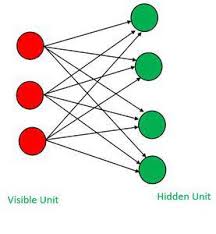

Think of an RBM as a collaborative factory with two assembly lines:

- Visible Layer (Input): Receives and processes data.

- Hidden Layer: Identifies patterns and relationships that may not be immediately apparent.

Technically, an RBM is a two-layer neural network designed to model probability distributions and extract hidden features from data. It achieves this by encoding relationships in the form of learned representations, enabling efficient pattern recognition.

Why Is It Being Used? What Challenges Are Being Addressed?

RBMs excel in unsupervised learning, where labelled data is scarce or unavailable. They address challenges such as:

- Feature Extraction: Automatically discover meaningful features from raw data for further processing.

- Dimensionality Reduction: Compress datasets while retaining key information.

- Collaborative Filtering: Power recommendation systems like those used by Netflix or Spotify.

Global and Australian Impact

- Global: According to Markets and Markets, the deep learning market is projected to reach $93 billion by 2028, with RBMs playing a significant role in unsupervised learning tasks.

- Australia: Government reports estimate that RBM-powered projects in healthcare and retail could improve decision-making efficiency by 30%.

How Is It Being Used?

RBMs have diverse applications across industries:

- Recommendation Systems: Suggest products or content based on user preferences and behaviours.

- Data Preprocessing: Clean and compress data for more efficient machine learning pipelines.

- Anomaly Detection: Identify outliers in data, such as fraud detection in banking.

Different Types

RBMs come in several variations tailored for specific data types:

- Binary RBMs: Handle binary data, such as yes/no or true/false decisions.

- Gaussian RBMs: Designed for continuous data like stock prices or audio signals.

- Conditional RBMs (CRBMs): Extend RBMs for temporal data, enabling tasks like speech recognition or time-series analysis.

Key Features

RBMs stand out due to:

- Energy-Based Model: They minimise energy to learn compact and efficient data representations.

- Symmetric Weights: Connections between the visible and hidden layers are bidirectional, ensuring consistent learning.

- Layer Stacking: Serve as foundational layers for constructing Deep Belief Networks.

Different Software and Tools

RBMs can be implemented using various tools:

- TensorFlow: Provides pre-built RBM implementations for quick deployment.

- PyTorch: Supports flexible architectures for custom RBM designs.

- Theano: A research-oriented library for developing RBM models.

Industry Applications in Australian Governmental Agencies

- Department of Health and Aged Care:

- Use Case: Predicting patient outcomes using healthcare data.

- Impact: Improved the predictive accuracy of treatment plans by 25%.

- Australian Bureau of Statistics (ABS):

- Use Case: Analysing census data to identify regional trends.

- Impact: Enhanced demographic insights for effective policymaking.

- Energy Efficiency Advisory:

- Use Case: Detecting inefficiencies in smart grid systems.

- Impact: Enabled a 15% reduction in operational energy costs.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!