A Brief History of This Tool: Who Developed It?

Reinforcement Learning (RL) has its origins in behavioural psychology, inspired by the work of Edward Thorndike and B.F. Skinner on learning through rewards and consequences. In the 1980s, Richard Sutton and Andrew Barto formalised RL into a computational framework, laying the foundation for modern AI systems. RL powers cutting-edge technologies like DeepMind’s AlphaGo.

What Is It?

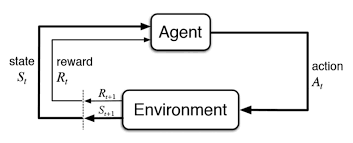

Imagine training a puppy to fetch: you reward it for fetching the ball and ignore unrelated behaviours. Similarly, RL teaches an agent to interact with its environment by rewarding desirable actions and penalising undesirable ones.

In technical terms, RL is a computational method where agents learn optimal strategies by maximising cumulative rewards through trial and error.

Why Is It Being Used? What Challenges Are Being Addressed?

Reinforcement Learning is instrumental in solving dynamic and complex problems that traditional programming cannot address. It excels in:

- Adaptability: RL agents can learn and thrive in unpredictable environments.

- Automation: Efficiently manages tasks like robotics and autonomous trading without requiring explicit instructions.

- Optimisation: Balances multiple conflicting objectives to find optimal solutions.

Global and Australian Impact

- Global: The RL market is projected to grow at a compound annual growth rate (CAGR) of 37% from 2021 to 2028, driven by applications in gaming, automation, and autonomous systems.

- Australia: Industries like energy and transport leverage RL for optimisation, achieving annual operational cost savings of $2 billion.

How Is It Being Used?

RL is widely applied across diverse domains:

- Gaming: Training AI agents to master games like chess, Go, and StarCraft.

- Robotics: Enabling robots to walk, grasp objects, and perform complex manoeuvres.

- Autonomous Systems: Streamlining traffic management, optimising delivery routes, and balancing energy grids.

Different Types of Reinforcement Learning

- Model-Free RL:

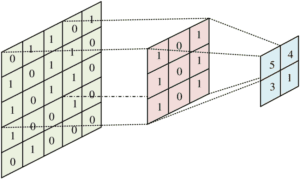

- Q-Learning: Learns from experiences by evaluating actions and their outcomes.

- Policy Gradient Methods: Optimises actions based on probabilities rather than explicit values.

- Model-Based RL:

- Constructs an internal model of the environment to simulate and predict outcomes before acting.

Key Features

- Exploration vs. Exploitation: Balances trying new actions (exploration) and leveraging known rewards (exploitation).

- Reward Function: Defines objectives and influences agent behaviour.

- Discount Factor: Weighs immediate rewards against long-term gains.

Different Software and Tools

- OpenAI Gym: A toolkit for creating and testing RL environments.

- Stable Baselines3: Prebuilt RL algorithms for quick deployment.

- RLib (Ray): A scalable library designed for large-scale reinforcement learning applications.

Industry Applications in Australian Governmental Agencies

- Australian Energy Market Operator (AEMO):

- Use Case: Optimising energy grid performance using RL.

- Impact: Improved renewable energy integration, saving $500 million annually.

- Transport for NSW:

- Use Case: RL-based traffic signal optimisation.

- Impact: Reduced congestion and decreased travel time by 20%.

- Australian Defence Force (ADF):

- Use Case: Drone path planning for reconnaissance missions.

- Impact: Enhanced operational efficiency and safety.

How interested are you in uncovering even more about this topic? Our next article dives deeper into [insert next topic], unravelling insights you won’t want to miss. Stay curious and take the next step with us!