A Brief History of This Tool: Who Developed It?

Long Short-Term Memory (LSTM) networks were introduced in 1997 by Sepp Hochreiter and Jürgen Schmidhuber. These researchers solved a significant challenge in machine learning: the inability of traditional Recurrent Neural Networks (RNNs) to retain long-term dependencies. LSTM became a game-changer, enabling accurate modelling of sequential data and revolutionising fields like speech recognition and time-series forecasting.

What Is It?

Picture your mind as a filing cabinet: some drawers hold short-term information (like remembering a phone number), while others store long-term knowledge. LSTM functions similarly. It selectively retains essential information while discarding what’s unnecessary. This makes it a specialised RNN, adept at understanding relationships within sequences over time.

Why Is It Being Used? What Challenges Are Being Addressed?

Traditional RNNs often suffer from the vanishing gradient problem, making them ineffective for learning long-term dependencies. LSTM’s unique gating mechanism overcomes this limitation, enabling memory retention over extended sequences. This feature is vital for tasks like language translation, weather prediction, and fraud detection, where recognising patterns over time is crucial.

How Is It Being Used?

LSTM networks are employed in various domains:

- Speech Recognition: Translating spoken words into text.

- Natural Language Processing (NLP): Powering chatbots, translation tools, and text summarisation.

- Time-Series Analysis: Identifying trends for stock markets or sales predictions.

Different Types

LSTM variations include:

- Bidirectional LSTM: Processes data in both forward and backward directions for enhanced context.

- Stacked LSTM: Features multiple LSTM layers to capture intricate patterns.

- Peephole LSTM: Adds extra feedback to improve performance in specific use cases.

Different Features

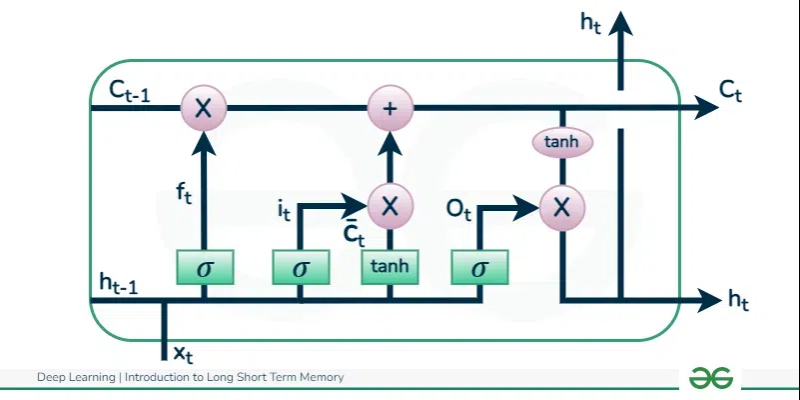

LSTM’s design includes:

- Forget Gate: Removes irrelevant information from memory.

- Input Gate: Selects which new information to store.

- Output Gate: Determines what information to pass forward.

- Memory Cell: A dynamic structure for retaining key information.

Different Software and Tools for LSTM

Tools supporting LSTM implementation include:

- TensorFlow: Offers pre-built modules for easy integration.

- PyTorch: Flexible for building custom models.

- Keras: A beginner-friendly API for rapid prototyping.

- Scikit-Learn: Ideal for simpler applications.

3 Industry Application Examples in Australian Governmental Agencies

- Bureau of Meteorology:

- Use Case: Using LSTM for more precise weather forecasting by analysing historical patterns.

- Department of Transport:

- Use Case: Optimising public transport scheduling based on LSTM-powered traffic predictions.

- Australian Taxation Office (ATO):

- Use Case: Employing LSTM to detect fraudulent patterns in transaction data.