A Brief History of This Tool: Who Developed It?

Sparse autoencoders emerged in the 2000s as a variant of autoencoders designed for efficient feature extraction. Influential work by Andrew Ng and others at Stanford University played a key role in their development, focusing on improving machine learning’s ability to understand meaningful patterns in high-dimensional data.

What Is It?

Think of sparse autoencoders as a minimalist artist who sketches only the essential details to convey an image. In machine learning, they are neural networks trained to encode input data into a compact, efficient representation, emphasising the most critical features while ignoring redundant or less relevant details.

Why Is It Being Used? What Challenges Are Being Addressed?

Sparse autoencoders are used to tackle:

- Feature Selection: Automatically identifying and prioritising the most meaningful features in data.

- High-Dimensional Data: Simplifying datasets with numerous variables, such as images or genomic data.

- Improved Generalisation: Training models to focus on key patterns, reducing overfitting and improving prediction accuracy.

These properties make sparse autoencoders ideal for applications in finance, healthcare, and government analytics.

How Is It Being Used?

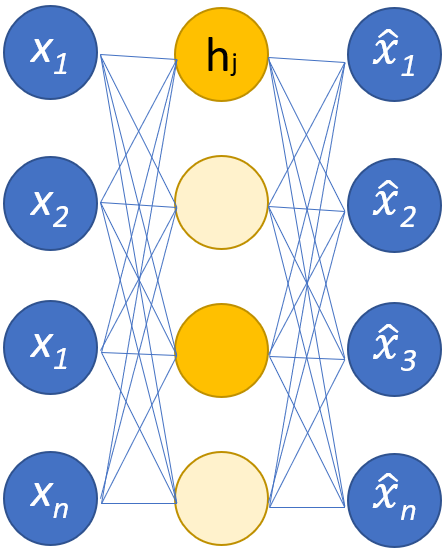

Sparse autoencoders work by:

- Adding a Sparsity Constraint: Ensuring only a few neurons are active at a time during training.

- Learning Compressed Representations: Encoding input data into a latent, compact form during the encoder phase.

- Reconstructing Data: Decoding compressed data to minimise reconstruction errors and ensure important features are preserved.

Applications include:

- Feature Engineering: Extracting features for machine learning pipelines.

- Image Compression: Reducing the size of image datasets for storage or transmission.

- Pattern Recognition: Identifying key trends in complex datasets.

Different Types

Sparse autoencoders come in a few forms:

- Standard Sparse Autoencoders: Focus on introducing sparsity constraints to the traditional architecture.

- Convolutional Sparse Autoencoders: Apply sparsity constraints to spatially structured data, such as images.

- Stacked Sparse Autoencoders: Layered models that capture hierarchical feature representations.

Different Features

Key features of sparse autoencoders include:

- Sparsity Constraints: Force the network to use fewer neurons, highlighting essential data features.

- Latent Space Representation: Encodes data into a lower-dimensional form while preserving critical information.

- Flexibility: Can be applied to different data types, including text, images, and numerical data.

Different Software and Tools for Sparse Autoencoders

Sparse autoencoders can be implemented using:

- TensorFlow: Offers tools for custom model design and sparsity implementation.

- PyTorch: Provides dynamic support for neural network customisation.

- Keras: Simplifies the implementation of sparse autoencoders with pre-built libraries.

- Scikit-learn: Useful for smaller-scale autoencoder projects and integration into pipelines.

3 Industry Application Examples in Australian Governmental Agencies

- Australian Bureau of Statistics (ABS):

- Use Case: Using sparse autoencoders to simplify and compress large-scale census data for efficient analysis and storage.

- Geoscience Australia:

- Use Case: Extracting key geological features from high-dimensional satellite imagery to improve land-use and environmental planning.

- Department of Health:

- Use Case: Employing sparse autoencoders to identify critical biomarkers in genomic data for disease prediction and prevention.