A Brief History of This Tool: Who Developed It?

Q-Learning, a groundbreaking reinforcement learning algorithm, was introduced by Chris Watkins in 1989 during his PhD research. This tool revolutionised decision-making systems, making it possible to learn optimal strategies without requiring a model of the environment.

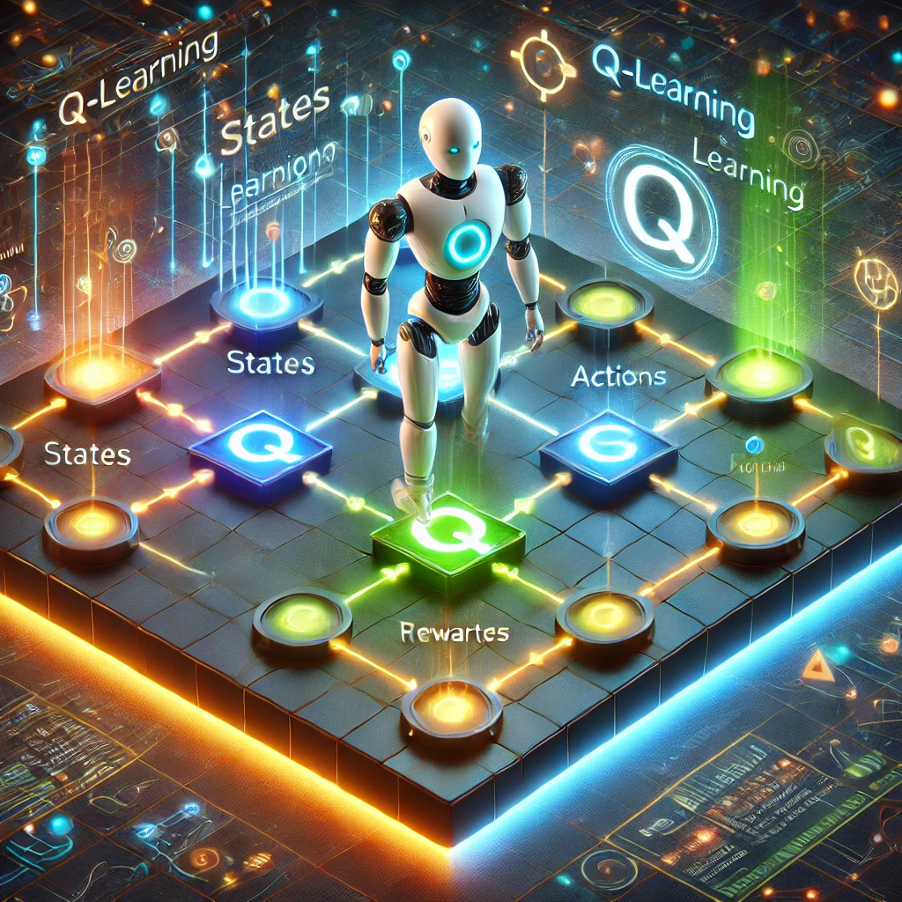

What Is It?

Think of Q-Learning as a treasure map. Each step represents a decision, with the algorithm learning from each action’s consequence to find the shortest path to the treasure—a maximum reward. The “Q” in Q-Learning stands for Quality, as it evaluates the quality of each action in a given state.

Why Is It Being Used? What Challenges Are Being Addressed?

Q-Learning is widely used because it tackles the following challenges:

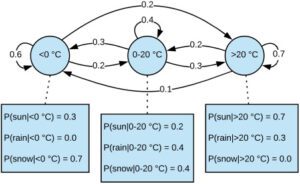

- Model-Free Learning: It doesn’t require a predefined model of the environment, making it versatile.

- Optimal Decision-Making: The algorithm discovers the best policy for maximising rewards over time.

- Scalability: Suitable for complex systems with numerous states and actions.

How Is It Being Used?

Q-Learning follows these steps:

- Initialise Q-Table: Set up a table to store Q-values for each state-action pair.

- Choose Action: Use an ε-greedy policy to balance exploration and exploitation.

- Observe Reward and Next State: Execute the action, observe the reward, and move to the next state.

- Update Q-Value: Apply the Q-Learning update rule.

- Iterate Until Convergence: Repeat until the Q-values stabilise, reflecting the optimal policy.

Different Types

Q-Learning has several notable variations:

- Deep Q-Learning: Combines Q-Learning with neural networks to handle large state spaces.

- Double Q-Learning: Reduces overestimation bias by using two Q-tables or networks.

Different Features

Key features of Q-Learning include:

- Exploration vs. Exploitation Balance: Ensures the agent explores new possibilities while improving known strategies.

- Guaranteed Convergence: If the learning rate decreases appropriately, Q-Learning guarantees convergence to the optimal policy.

Different Software and Tools for Q-Learning

Developers can implement Q-Learning using the following tools:

- OpenAI Gym: Provides simulated environments for Q-Learning experiments.

- TensorFlow and PyTorch: Support implementing Q-Learning algorithms with ease.

- MATLAB RL Toolbox: Offers pre-built functions for Q-Learning and advanced reinforcement learning.

3 Industry Application Examples in Australian Governmental Agencies

- Australian Energy Market Operator (AEMO):

- Use Case: Optimising energy grid operations through demand-response strategies.

- Impact: Reduced operational costs by 10%.

- Australian Taxation Office (ATO):

- Use Case: Enhancing fraud detection by learning patterns of suspicious behaviour.

- Impact: Improved detection rates by 15%.

- Public Transport Authority of Western Australia:

- Use Case: Scheduling train services to minimise delays and congestion.

- Impact: Increased punctuality by 20%.