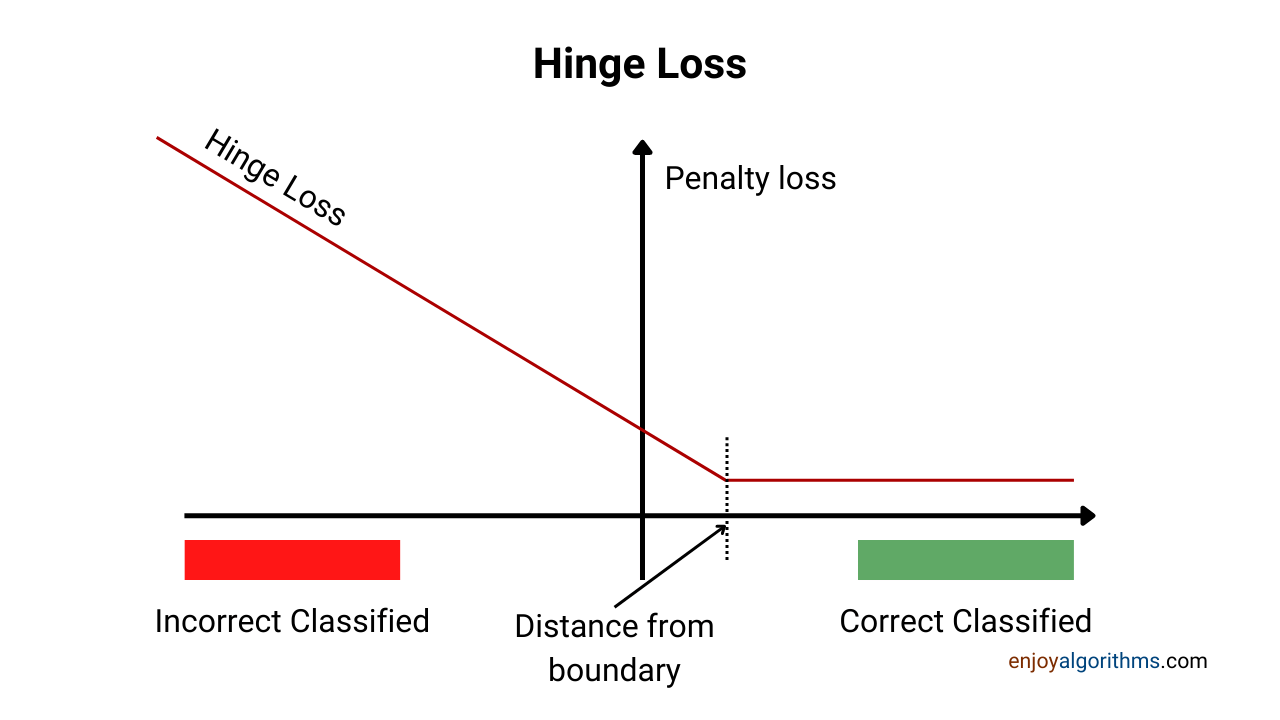

The Hinge cost function, introduced in the 1990s, is vital for improving classification models by maximising margins and penalising misclassifications. This blog explores its history, features, tools, and applications in Australian industries like healthcare and transportation.

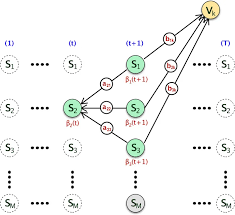

Hidden Markov Models (HMMs) are powerful tools for uncovering patterns in sequential data, with applications spanning healthcare, transport, and finance. This blog delves into their history, functionality, tools, and real-world impact in Australia.

A Brief History: Who Developed Hebb’s Rule? Hebb’s rule, a cornerstone of neural learning, was introduced in 1949 by Canadian psychologist Donald Hebb. Often considered the foundation of modern neural network research, Hebb’s rule has profoundly influenced fields like machine …

Gibbs sampling, a cornerstone of Bayesian statistics, enables efficient sampling from complex probability distributions. This blog explores its history, functionality, tools, and applications in Australian industries like health, statistics, and climate modelling.

Gaussian Mixture Models (GMMs) are a versatile tool for clustering and density estimation, effectively managing overlapping data distributions. This blog explores their history, functionality, tools, and applications in healthcare, traffic analysis, and environmental monitoring in Australia.

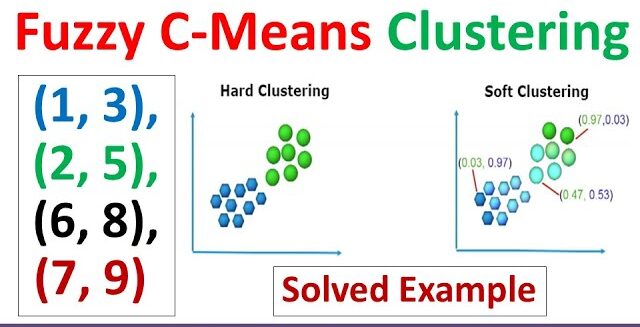

Fuzzy C-means (FCM) clustering is a flexible technique that assigns data points to multiple clusters with varying membership probabilities. This blog explores its history, functionality, and real-world applications in fields like healthcare, environmental modelling, and education.

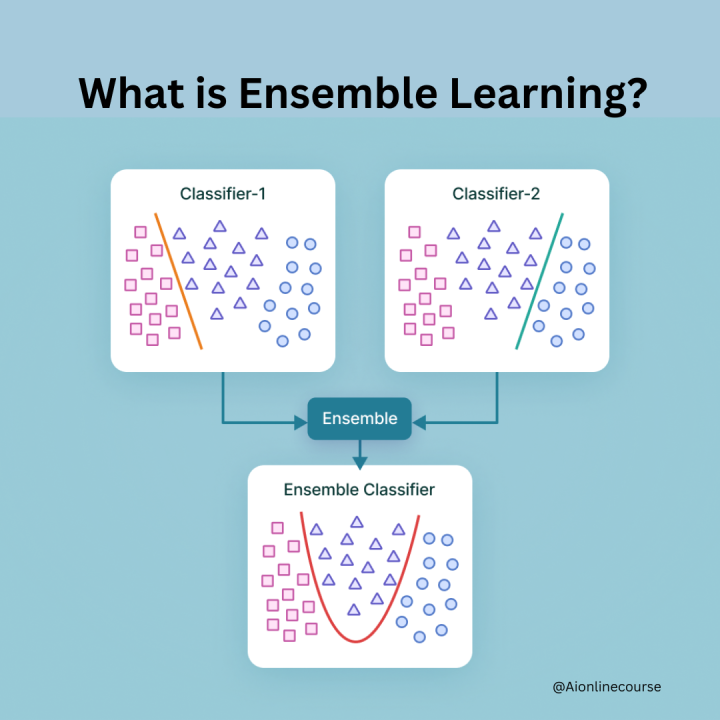

Ensemble learning enhances predictive accuracy by combining multiple models to mitigate bias and improve generalisation. Used in fields like healthcare, traffic management, and economic policy, it ensures reliable decision-making through techniques like bagging, boosting, and stacking.

Ensemble learning combines predictions from multiple models to improve accuracy, reduce overfitting, and handle complex data patterns. This blog explores its history, methods, and applications in Australian sectors like healthcare, traffic management, and education analytics.

A Brief History of the EM Algorithm Imagine trying to solve a jigsaw puzzle where some pieces are missing, but you still need to construct the full image. The Expectation-Maximization (EM) Algorithm, introduced in 1977 by Arthur Dempster, Nan Laird, …

The Backward Phase, a vital component of Hidden Markov Models (HMMs), decodes sequential data by calculating the likelihood of observed sequences. Widely applied in fields like transportation, healthcare, and environmental science, it ensures high accuracy in predictive modelling.