When evaluating a field of crops, focusing on the weakest areas helps identify what’s holding them back. This ensures even the most challenging conditions are addressed, improving the entire field’s health. Contrastive Pessimistic Likelihood Estimation (CPLE) operates similarly: it identifies …

A Brief History: Who Developed Inductive Learning? Inductive learning, a fundamental concept in machine learning, originates from the principles of inductive reasoning studied by Aristotle. In the 20th century, pioneers like Alan Turing and Tom Mitchell applied these principles to …

A Brief History of Label Spreading: Who Developed It? Label spreading, like its sibling label propagation, was developed from the foundations of graph theory and became integral to semi-supervised learning. Researchers sought to improve upon label propagation by introducing a …

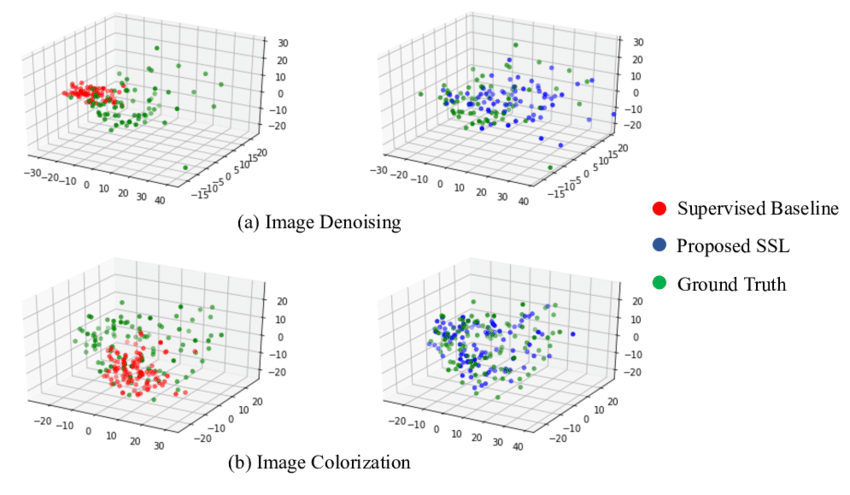

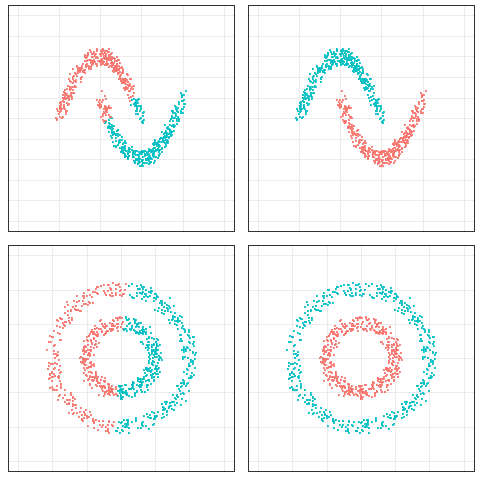

A Brief History: Who Developed the Manifold Assumption? The manifold assumption, a foundational concept in semi-supervised learning (SSL), became widely recognized in the late 1990s and early 2000s. Researchers like Sam Roweis, Lawrence Saul, and Joshua Tenenbaum contributed to its …

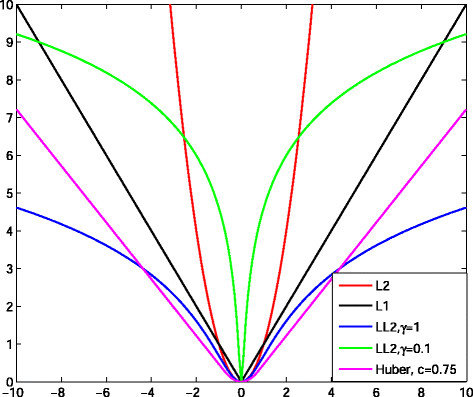

A Brief History: Who Developed the Huber Cost Function? The Huber cost function, also known as Huber loss, was introduced by Peter J. Huber in 1964. Huber, a Swiss statistician, developed this function to create a robust method for regression …

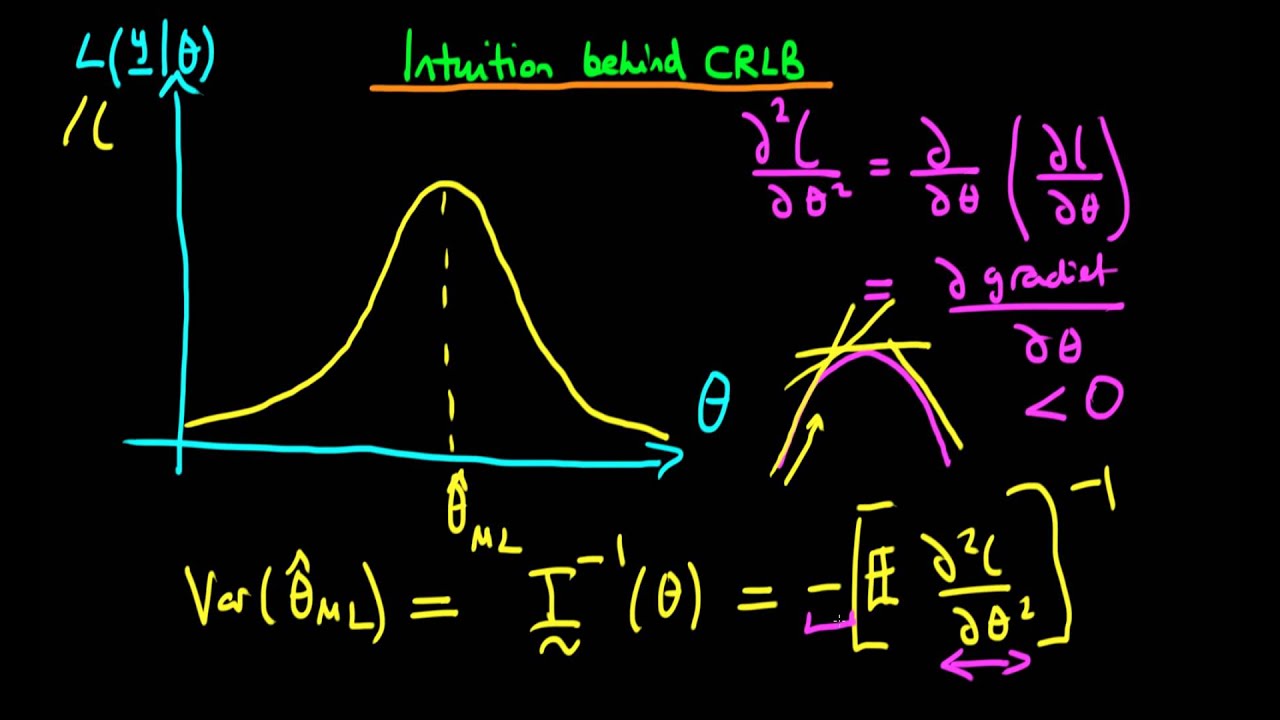

Imagine an archer aiming at a target: the sharpness of the arrow determines how closely it can hit the bullseye. The Cramér-Rao Bound is like the sharpness of an estimator—it defines the theoretical lower limit of variance for an unbiased …

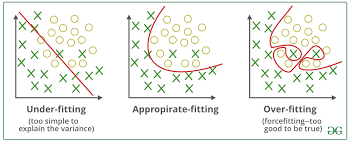

Imagine tuning a guitar: if the strings are too loose, the sound is flat and off-key—this is like underfitting, where a model is too simple to capture data patterns. If the strings are too tight, the sound becomes sharp and …

A Brief History of Weighted Log Likelihood Weighted log likelihood emerged from advancements in statistics and machine learning: statisticians recognized the need to address the varying importance of data points in unbalanced datasets. Refined through years of research, it has …

A Brief History: Who Developed It? Spectral clustering was developed in the late 1990s: it quickly became a cornerstone for analyzing non-linear data. Combining graph theory and linear algebra, it offered a robust solution for handling datasets with intricate relationships. …

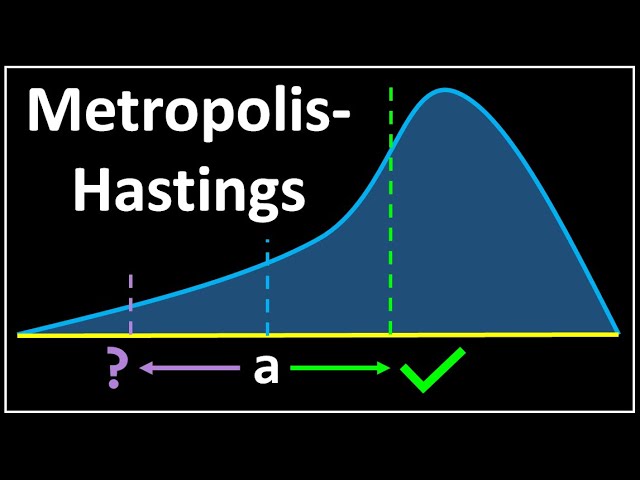

A Brief History of This Tool The Metropolis-Hastings algorithm, a cornerstone of Bayesian computation, began its journey in 1953 with Nicholas Metropolis and gained further refinement in 1970 through W.K. Hastings. Initially devised for thermodynamic simulations, this algorithm has since …