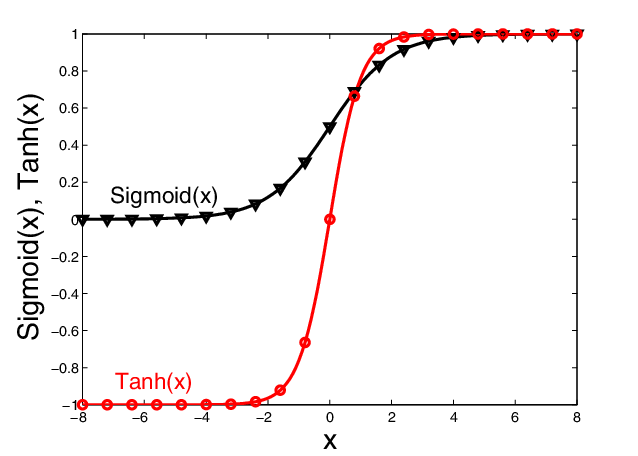

The sigmoid and Tanh functions are foundational activation functions in neural networks, transforming inputs into non-linear outputs for complex modelling tasks. Widely used in classification, traffic management, and climate analysis, they remain essential despite the vanishing gradient challenge.

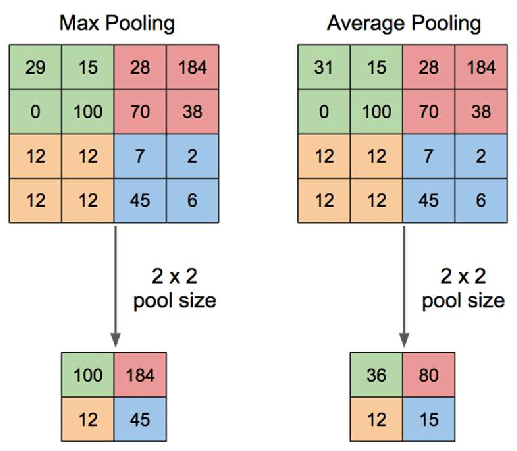

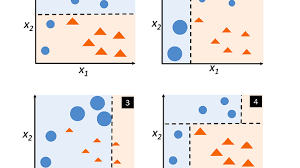

Pooling layers in convolutional neural networks (CNNs) reduce dimensionality, enhance noise tolerance, and ensure robust feature extraction. Widely used in applications like medical imaging, traffic analysis, and satellite monitoring, they are essential for computational efficiency.

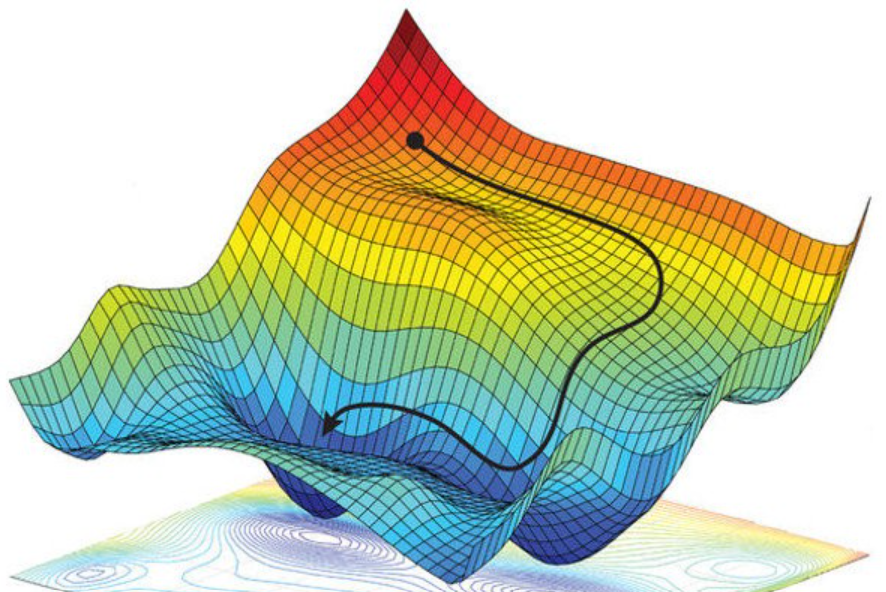

Optimisation algorithms identify the most efficient solutions for complex problems across industries, from supply chain management to energy systems. In Australia, they are widely used in transport, energy, and healthcare to enhance efficiency and resource allocation.

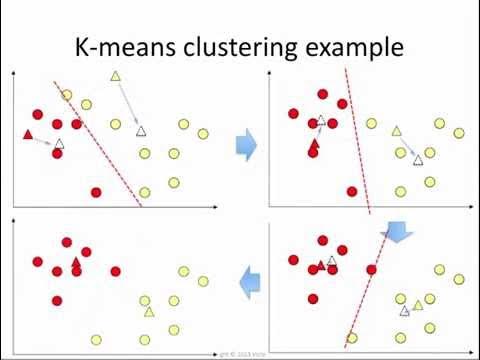

K-Means++, introduced in 2007, refines centroid placement in K-Means clustering for better accuracy and efficiency. Widely used in Australian public health, census analysis, and environmental insights, it ensures faster convergence and more reliable clusters.

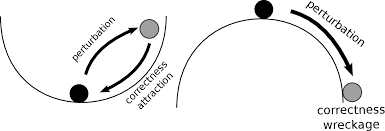

Gradient perturbation enhances data privacy in AI by injecting noise into gradient updates, ensuring compliance with regulations like GDPR and the Australian Privacy Act. Widely used in healthcare, education, and energy sectors, it balances privacy and utility for secure machine learning.

Adam, a powerful optimisation algorithm introduced in 2014, is widely used in deep learning for its adaptive learning rates and momentum integration. Its applications range from healthcare analytics to public transport planning, making it a cornerstone of modern AI.

AdaBoost, introduced in 1995 by Freund and Schapire, transforms weak learners into powerful ensemble models, delivering high predictive accuracy. Widely used in healthcare, traffic optimisation, and education, it remains a cornerstone in machine learning applications.

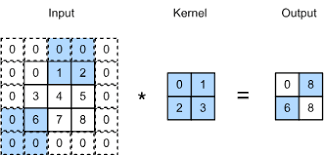

Strides and padding emerged as essential concepts in convolutional neural networks (CNNs), which were first applied to image recognition tasks in the 1980s.

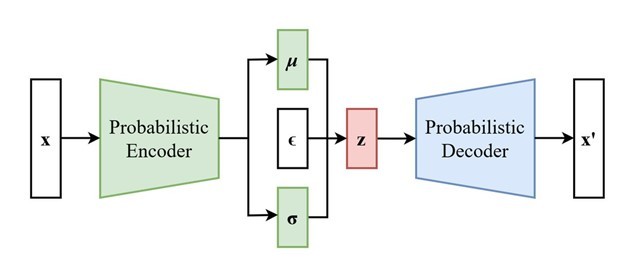

Variational Autoencoders (VAEs) are powerful tools that encode data into probabilistic latent spaces, enabling creative data generation and advanced applications like image synthesis and anomaly detection. Widely used across Australian sectors, they improve statistical modelling, satellite imagery, and health data analysis.

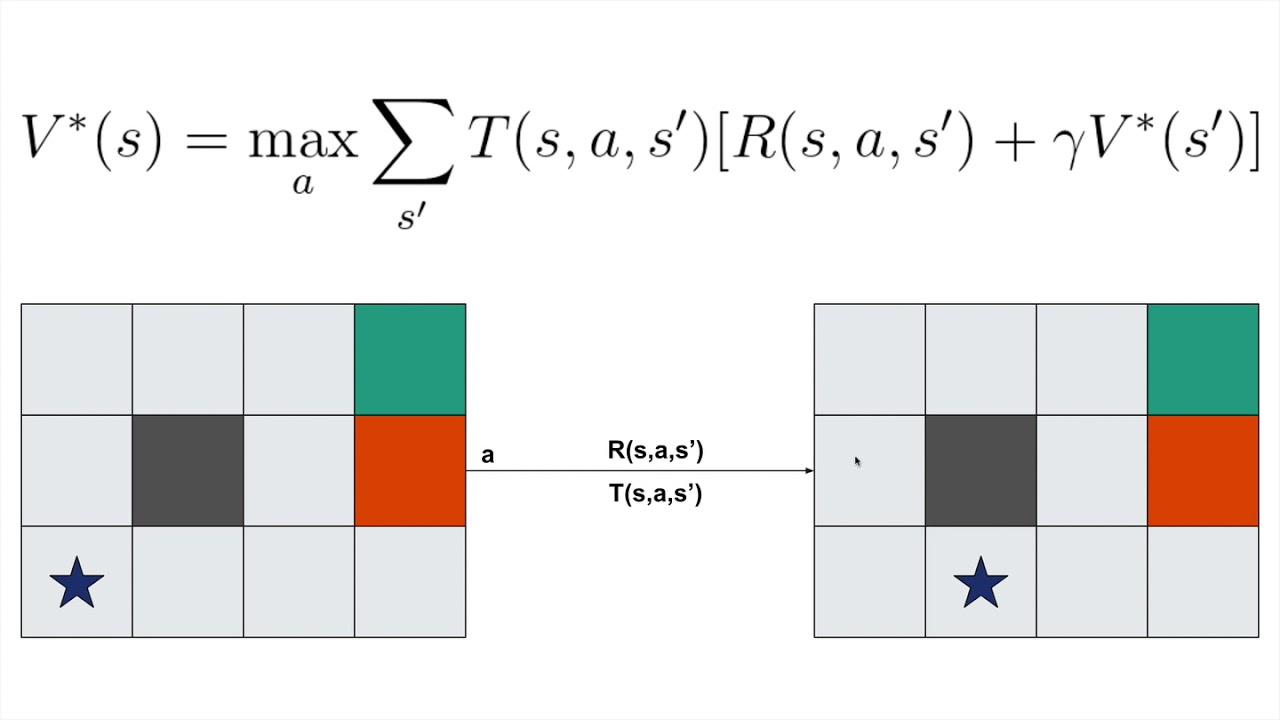

Value iteration, introduced by Richard Bellman in the 1950s, is a dynamic programming algorithm that optimises decision-making in structured environments. Widely used in reinforcement learning, it plays a pivotal role in policy derivation, resource allocation, and traffic optimisation.