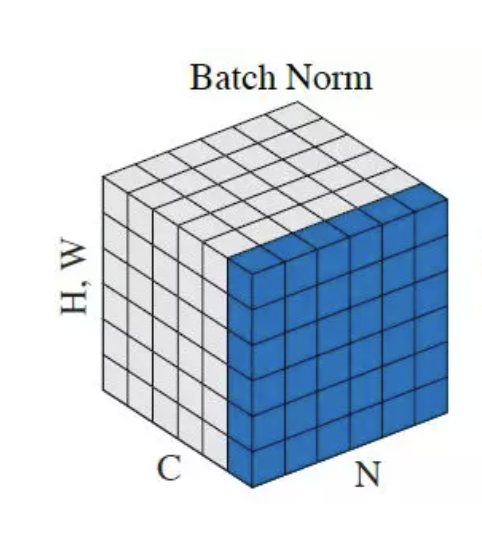

Batch normalisation is a game-changer in deep learning, enabling faster training, stabilising neural networks, and improving overall model performance. It has become a vital tool in AI applications across industries, including healthcare, education, and environmental science.

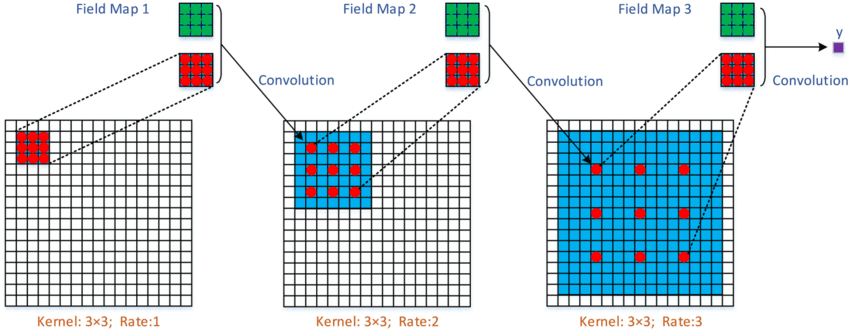

Atrous convolutions, also known as dilated convolutions, enhance convolutional neural networks by expanding the receptive field without increasing parameters, making them ideal for high-resolution image analysis. Introduced in 2016, they have since become a cornerstone in tasks like semantic segmentation, object detection, and geospatial analysis.

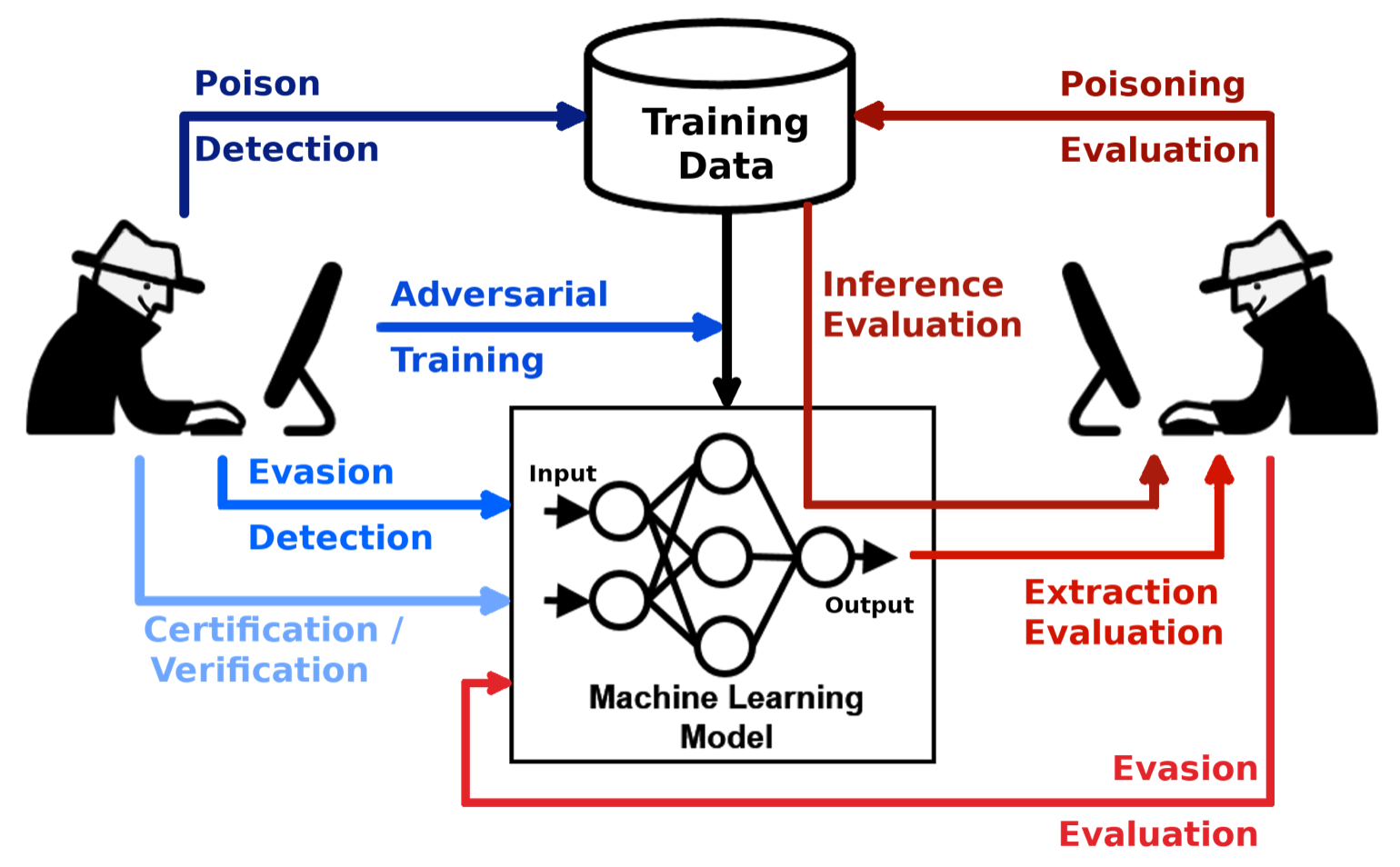

Adversarial training, introduced in 2014 by Ian Goodfellow, fortifies machine learning models against malicious inputs by exposing them to adversarial examples during training. This method enhances model security and robustness, making it critical for applications in sensitive areas like healthcare, finance, and defense.

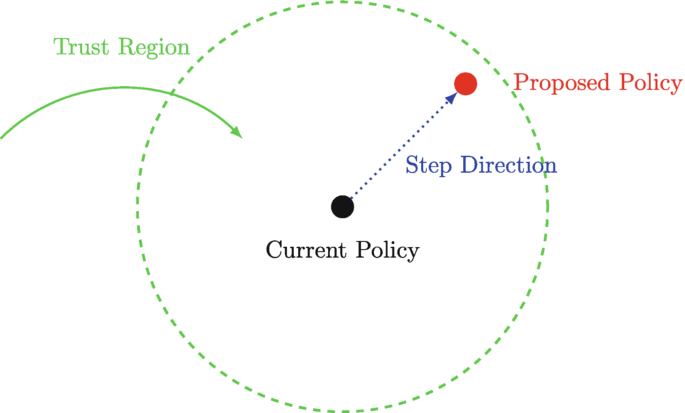

The Advanced Policy Estimation Algorithm (APEA), building on foundational reinforcement learning work, optimizes decision-making in dynamic environments through adaptive and scalable policy learning. It enables smarter resource allocation, fraud detection, and environmental management across industries.

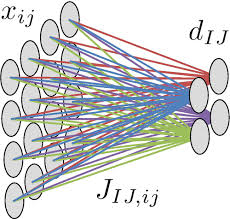

Advanced neural models, inspired by the human brain and shaped by pioneers like Hinton, LeCun, and Bengio, have revolutionized AI with architectures such as CNNs, RNNs, and Transformers. These models address challenges in complex data processing, pattern recognition, and adaptability, finding applications across diverse domains.

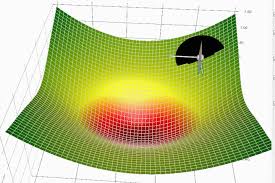

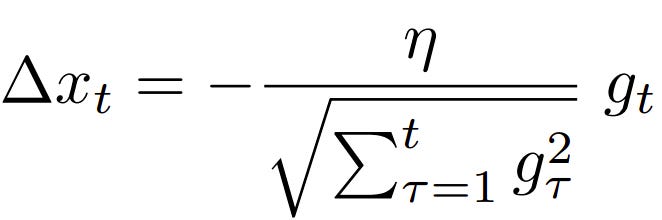

AdaGrad is an adaptive optimisation algorithm introduced in 2011 that adjusts learning rates dynamically, ensuring efficient training, particularly for sparse datasets. Its innovative approach simplifies hyperparameter tuning and enhances convergence in machine learning workflows.

AdaDelta is an adaptive learning rate optimisation algorithm introduced by Matthew D. Zeiler in 2012 as an enhancement to AdaGrad. It is widely used for its efficiency, stability, and ability to address challenges like diminishing learning rates and gradient vanishing, particularly in sparse data tasks.

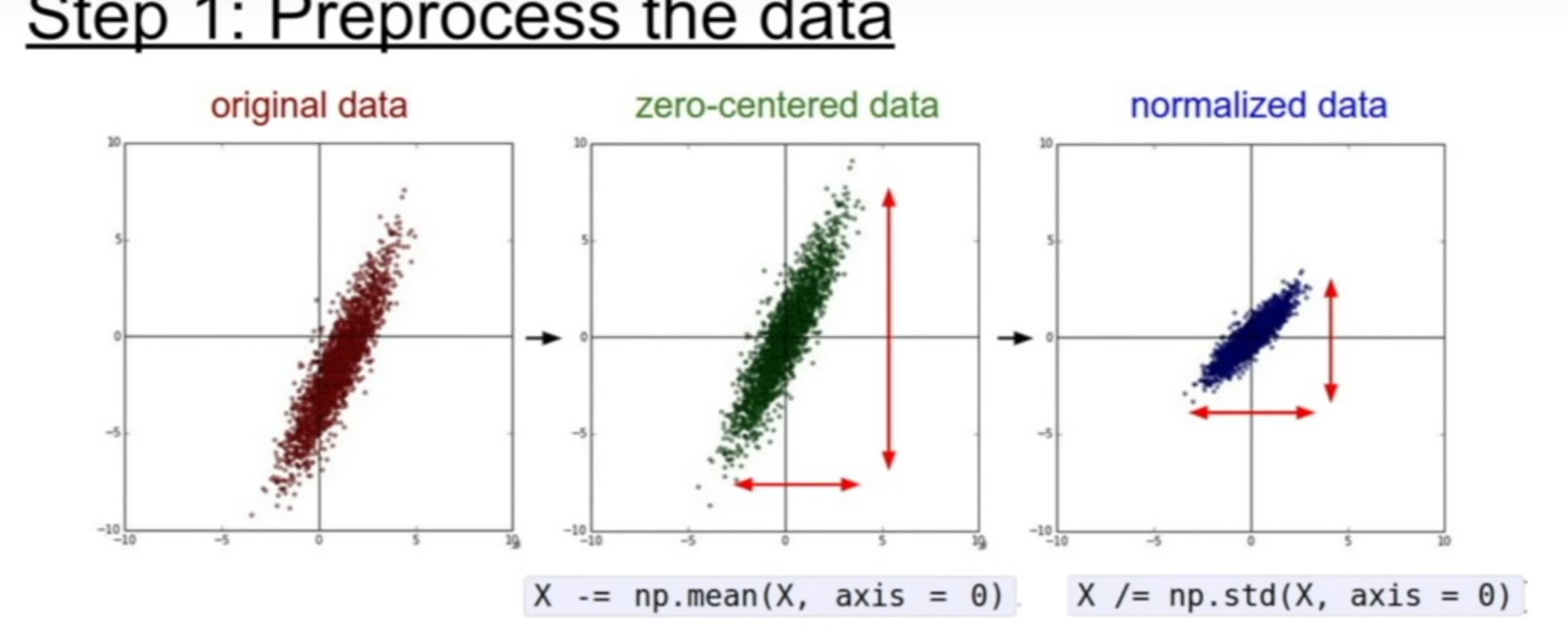

Zero-centring and whitening are fundamental pre-processing techniques in machine learning that standardise data by aligning its mean and eliminating redundancy. These methods improve model accuracy, speed up training, and are widely used across industries such as health, environment, and transport in Australia.

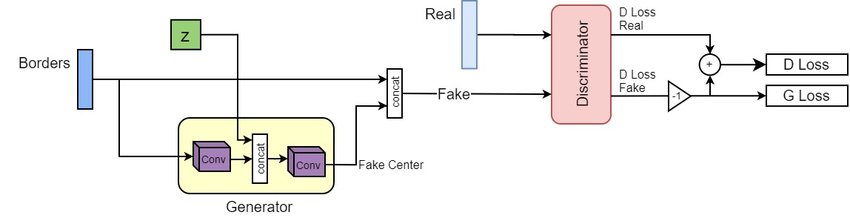

The Wasserstein GAN (WGAN), introduced in 2017 by Martin Arjovsky and collaborators, revolutionised GAN training by addressing instability and mode collapse using the Wasserstein distance. Its applications range from generating realistic images to synthetic data creation, with significant impacts globally and in Australia.

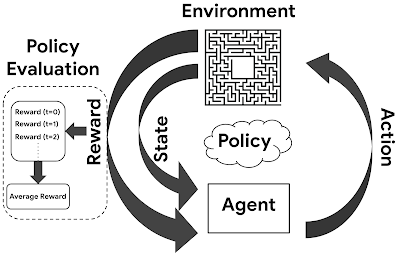

The concept of “policy” in machine learning, rooted in Reinforcement Learning, represents a set of strategies guiding AI decisions based on input states. Policies enhance decision-making efficiency, adaptability, and scalability, with applications in autonomous vehicles, audits, and resource allocation in Australia.